Video Streaming of Events

The recent Institutional Web Management Workshop (IWMW 2006) [1] was a rare opportunity to try out a few new pieces of technology. With events that occur at a different location each year, it is often difficult to do so, since the infrastructure at the venue may not be suitable, and it is difficult to liase effectively with technical staff at the venue before the event in order to put all the necessary technology into place. It may also be difficult to test significantly before the event, meaning that it is unwise to publicise the expected availability of the service. Since the IWMW took place at UKOLN's home at the University of Bath, an effort was made to look into possible technologies and take full advantage of the opportunity.

After due consideration we settled on audio and video streaming of selected speeches as a feasible (although challenging) aim.

The project was very much about establishing the answer to one question; was video streaming technology ready and waiting to be used, or does it remain (as it is often perceived) a specialist and expensive technology requiring purpose-built and dedicated hardware? We felt that the technology had probably matured to the point that it had become accessible to technical staff - even to hobbyists, and set out to test our hypothesis.

There are several technical notions that are required in order to understand video streaming, and although we will not undertake a fully fledged lecture on video theory, we will give a fair amount of background information.

Real-time video is probably one of the most resource-intensive applications one can expect to deal with due to the sheer amount of data and the speed at which this data needs to be handled. A lot has to be done in the background before being able to send a Web-friendly video stream, in which we would include: a reasonable viewing size (typically 320×240); which can be accessed over a standard Internet connection; while still being more than a random collection of moving squares. Firstly sending an uncompressed video via the Internet would be inconceivable; in fact there are few user-targeted applications with uncompressed video - DVD and even HD-DVD / BD-DVD (Blu-ray Disc-DVD), while quite high-quality, all use compressed video.

An example of the amount of bandwidth required to receive raw video in VGA format (640×480), which is close to television quality, would be:

640×480 × 24 bits/pixel × 25 frames/s = 184,320,000 bits/second = 22 MiB/s

A fairly fast broadband connection (1 Mbit/s) still only represents somewhat less than 1% of the required bandwidth. Therefore one needs to use some sort of compression. However the compression used is highly dependent on the target transport method. As for audio compression [2], one needs to get the best video input possible.

Encoding Video

A video can be considered as a collection of images being displayed one after the other, with the term image being defined as a rectangular shaped mosaic made of pixels representing a scene. And indeed this is the way most video cameras will capture them. However video is rarely stored that way as the storage requirements would be far too great, as shown above.

In order to bring the size down to a manageable level, most video devices will use some form of compression. The first compression method used is transformation of each frame of the video from the RGB colour space to YUV. RGB means that each pixel is composed of three values of identical size (usually 8bits), one for Red, one for Green and one for Blue (hence the name). YUV stems from the principle that most of the details on an image are contained in the grey scale version of the image. Colour being something that does not need to be as stored precisely, the overall size of the image is reduced. An example of this would be that for every cluster of 2x2 pixels defined in the grey scale layer, only one will be defined in the luminance and one for the chrominance layers. At this point, digital imaging specialists are probably alarmed on reading about YUV being used in the digital video context when this term should only really be applied to analogue video. The correct colour space name for digital video should be referred to as YCbCr, but the principle is the same [3][4].

The colour space is not the only way to compress video, as it is by far not sufficient. One can look at video in two different ways: the first, from the historical standpoint, the old-fashioned film method, where images are recorded one by one onto film. Each frame contains all the information to view the actual frame. In other words a continuous series of images displayed at fairly high speed one by one.

The other way of looking at the problem is from the animation point of view where one has to draw each image by hand. In this approach of course very little of the image changes from one frame to the next and one can therefore keep the background from the previous frame and just redraw the bits that have changed.

This is called temporal compression and is used in nearly all digital video compression algorithms. There are many different ways of achieving this effect and all have their advantages and drawbacks. It has to be a compromise which usually can be summarised, for a predefined image quality, to result in a large file format and fairly primitive algorithm that is very easy to decode or a small file and extremely complex algorithm, which is consequentially highly resource-intensive to decode. This can be expanded to any variations of the parameters, but all in all you cannot get a very small file with a perfect image quality that remains very easy to decode or encode at the same time. Therefore, your choice of compression format is highly dependent on the final use of the file.

Muxing Video

Muxing, a new item of jargon, refers to the concept that, most of the time, a video file is an envelope for the audio and video content it contains. However the industry has not made life easy by disambiguating the term and so they frequently refer to the file format by the same name as the compression algorithm.

Starting with audio only, the main use of a container is that it will have a series of flag positions and descriptions of how to read the encoded audio stream it contains. Most file formats will enable the user to choose to move to any part of the stream, forward or backward.

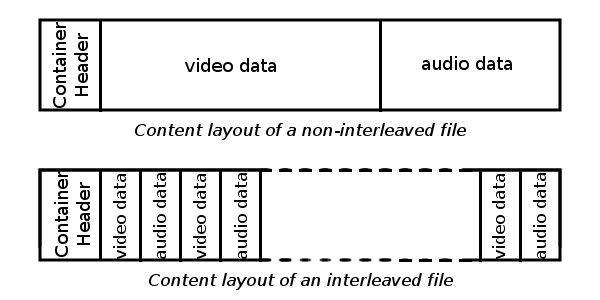

Video containers work pretty much the same way with the added complexity that it keeps detailed location of the memory pointer for two streams, the audio and the video. For our streaming purposes, we need to choose a container that enables a player to play the file even though it does not have all of it. By this we mean that there are basically two ways of putting audio and video data in a file: the easy way is to write one first; and the other is to do so afterwards and rely on the container data to tell the player how to synchronise the two. This is called a non-interleaved file. The alternative is an interleaved file where bits of the audio and the corresponding bits of alternative is an interleaved file where bits of the audio and the corresponding bits of videos are written in succession. The former is the only way a file can be streamed.

Figure 1: File content of video containers

The Technologies Used

In order to ensure that the process was technically feasible, we first put together a list of our requirements and the constraints placed upon us. Our use cases were fairly simple; we required a system for video streaming that:

- Was sufficiently stable for use in a conference environment, where very little time could be spared by technical staff for 'nursemaiding' the system

- Could be built from off-the-shelf parts, most of which were already available to us; we had a strictly limited budget for the purpose

- Could be reused in a variety of environments, i.e., which did not make use of dedicated hardware specific to the University, and was not dependent on University-specific software licensing or exemptions.

Requirements

Bandwidth

The University of Bath, being linked to JANET, the Joint Academic Network, has a great deal of bandwidth available to it - especially in summer, when most students are on vacation. However, as mentioned in the technical background section above, video streaming is potentially an extraordinarily expensive activity in terms of bandwidth. Whilst JANET bandwidth is extremely large, with the University's own network weighing in at 100 Megabits, the major cause of concern was the viewers' Internet connections.

Moreover, we should recall that video streaming bandwidth is additive; one viewer = one unit of bandwidth, ten viewers = ten units of bandwidth. Scalable solutions require relatively little bandwidth to be used per connection. This is a major issue in determining the choice of codec (Coder-Decoder) to be used.

Reasonable needs in terms of bandwidth translated, in practice, into finding a video codec that performs well at low bitrates. Measuring the performance of codecs is an ongoing research topic; it is situational, in that it depends on the content of the video stream and also on the user. For example, those who watched the World Cup features on the BBC Web site will have noticed that certain codecs have a tendency to fragment badly when faced with camera motion and several focuses of attention on the pitch, which tends to induce nausea on the part of the viewer. On the other hand, that same codec suffices entirely for watching a 'talking-head' announcer discussing the news of the day against a static background.

Fortunately for us, a wired network connection was available in the lecture theatre. Had this not been the case, we would have been forced to share a wireless connection with a large number of delegates' laptops, which would have limited bandwidth in unpredictable ways, and would have very likely reduced the performance and stability of the result.

Cost

As mentioned previously, this project was partly about ascertaining the cost of video streaming. Important criteria included:

- Free, multiplatform viewing software must be available

- A free server platform must be available

In this instance, 'free' could apply either to 'free as in speech', or 'free as in beer', although obviously 'free as in speech and beer' was a strong selling point. Whilst academic licences, exemptions or demonstration versions were available for certain platforms, it seemed disingenuous to demonstrate solutions to the enthusiast that come with a hefty price tag in normal use, or cannot be applied in practice due to licensing restrictions.

Sensors, DV and Hardware Encoding

Partly because of the cost issues, we preferred solutions that allowed us to use the popular combination of a DV camera and a FireWire (IEEE-1394) link. Firewire-enabled DV cameras are now fairly commonplace - we used Emma's throughout testing - and a solution based around it would require no specialised hardware other than a FireWire input port or PCI Firewire card (costing around £15, this was not an expensive upgrade). One alternative would have been the use of an MPEG encoder card taking an S-Video or composite video input, but a real-time MPEG encoder card is relatively expensive, and probably out of range for the intended audience.

It was also possible to use a webcam or similarly inexpensive video input device. From personal experience, we knew that webcams often suffered from video quality issues. Webcams come in two common flavours; CCD (Charged Coupled Device) sensors, or, at the low end of the market, CMOS (Complementary Metal Oxide Semiconductor). CMOS webcams tend to have difficulty picking up fast changes in light intensity, are rather grainy, and blur on fast motion. CCDs operate better, but even at the upper end of the market, webcams have a limited range and quality, cannot be adjusted or focused to any degree of precision, and tend to shift inadvertently when filming. They are inherently better suited to close-up static shots. Since we hoped to provide an overview of the lecture theatre, and attempt some camera work when possible, the DV solution was more suited to our needs.

Patent Issues

Video and graphics are profitable areas for companies, which are inclined to seek legal protection for their discoveries and implementations wherever possible. To be a little more direct, video streaming is a patents minefield.

The 'Windows codecs', which is to say WMV (Windows Media Video) and ASF (Advanced Streaming Format), are patent-encumbered and action has previously been taken against software authors who implemented these standards in unwanted ways. For example, the popular GPL project VirtualDub, a video editing application, was requested by Microsoft to remove support for ASF from the application. Microsoft had patented the codec, and although the original author had reverse-engineered the file format, Microsoft felt that the code infringed upon their patent.

Similarly, various other file formats are not 'free'; indeed, very few are. The problem is not necessarily that a single patent exists to cover a given format; rather, a large number of patents apply. For example, MPEG-2 is covered by at least 30 patents, each of which must be licensed, although some consider it necessary to license up to 100 patents. To simplify this process, a patent pool exists, which is managed by MPEG LA. The MPEG-2 file format is now licensed under strict rules - royalties for each decoding application are payable, at a cost of around $2.50 per instance.

The Sorenson codec was for many years a difficult subject, used frequently in the encoding of Quicktime movies. The specification was not made public, making it difficult to view these videos without using an official Apple decoder. It was eventually reverse-engineered, however, and is now viewable on all platforms.

However, it is clear that such issues have a significant impact on the long-term preservation of digital video, as well as on the immediate usability and cost of the result. We were keen to establish tentative best practice in the field; this issue was therefore a significant factor in our choice of technology.

Realistic Encoding Time

In keeping with the budget limitations, we did not have a particularly fast machine available for encoding. We had borrowed one of our desktop computers which was at the time slightly over a year old. It was a 3GHz university standard Viglen desktop PC running Linux, with a non-standard addition of an extra 512 MiB of RAM, to bring it up to a total of 1 GiB. Fortunately, it was a dual-thread, or hyperthreaded, Intel with 2 MiB of cache in total; when putting together specifications for a good video encoding platform, cache size is an important variable. This was entirely fortuitous, however; it had not been bought with this use in mind.

Video encoding, as mentioned above, is not necessarily a fast process; it is a tradeoff between speed and accuracy. The codec chosen must be sufficiently fast to encode in real-time on the desktop PC pressed into service, whilst retaining sufficiently good quality of image to be watchable at a low bitrate.

Ease of Use

We were not very concerned about the fact that less 'polished' server-based solutions would be fiddly for the technical staff to operate, as long as the result was stable and 'took care of itself' under most circumstances. However, we were very concerned about the amount of work the end user would have to put in to set their computers up to view the video stream. This was a significant issue for us because we were unable to offer any form of technical support to remote users other than a Web page.

Shortlisting Codecs

As mentioned in the background section, it is important to recall the difference between container formats and codecs. Instead of going into the details, we present several likely combinations of container and codec.

Options considered included:

- ASF/WMV

- QuickTime (regrettably impractical, as we did not own a Mac) [5]

- MPEG-1, MPEG-2

- MPEG-4/H.264

- Ogg Theora

- RealVideo (typically uses a variant of H.264)

- Flash-based videostreaming (generally uses a variant of H.263 (Sorenson) to encode video in FLV (Flash Live Video) format)

Of these, few are reliably supported natively on all viewing platforms. Flash is available on any machine with the Flash codec installed. Many platforms have some form of MPEG-1/2 decoder. The ASF/WMV solution is handled natively on Windows, Quicktime is handled natively on Macs. RealVideo generally requires a download. Ogg Theora is not native to any platform, and always requires a codec download. Support of specific versions of each of the above is patchy, so that the details may vary depending on implementation details.

In terms of costs, the vast majority of these formats are patented and require a royalty. Theora is open and patent-free. FLV is a proprietary binary format.

Note that the following table contains highly subjective measures of low-bitrate performance, and cannot be generalised - the measurement of performance is very dependent on situation, light level, level of motion, etc.

| Codec | Encoder | Low-bitrate performance | Native? | IP |

| ASF/WMV | Windows Media Services 9 (Server 2003) | OK | Windows | Patented, restrictions in place |

| QuickTime | QuickTime Streaming Server (Part of OSX Server) | OK | Mac | Various formats, some open |

| MPEG-1/2 | VLC, FFMPEG | Low | Various | Closed, patented, royalty payable |

| MPEG-4 H.264 | VLC, FFMPEG, QuickTime streaming server | Good | No | Contains patented elements |

| RealVideo | RealVideo server | Adequate | No | Contains patented elements |

| Ogg Theora | Ffmpeg2theora | Very Good | No | Open specs |

| FLV | Flash Media Server, FFMPEG | Good | Flash plugin | Some specs available (but uses patented MP3 code) |

Table 1: Some codec/wrapper combinations

As can be seen from Table 1, there was little likelihood of finding a one-size-fits-all solution. The Flash-based solution came the closest to overall interoperability without requiring any downloads. We then proceeded to take a closer look at a few 'free as in beer' software combinations.

Candidate Software Solutions

We then looked around for methods of generating and streaming video, and came up with a total of three candidate solutions, each of which was capable of encoding from a digital video camera over FireWire (also known as i.Link or IEEE 1394).

VideoLan

VideoLan (VLC) was capable of streaming MPEG-1, MPEG-2 and MPEG-4. In practice, though, encoding was slow and the resulting video stream quickly picked up lag.

FFmpeg + dvgrab, IEEE1394 or libiec61883, Outputting to ffserver.

ffserver is an encoding server capable of producing anything that the FFmpeg library can handle, including Flash-encapsulated formats. The uplink between the machine performing FFmpeg capture and the machine running ffserver must be rock-solid and extremely fast, since the intermediate video stream is essentially uncompressed!

The major advantage of this approach was the fact that it can output one video input simultaneously in multiple formats, so that some users can watch Flash whilst others watch the same stream in MPEG-4, Real Video, Windows Media Video and Ogg Theora.This would have been our method of choice, but which local conditions and shortage of testing time precluded at that time.

Ogg Theora

This solution also used dvgrab, IEEE1394 or libiec61883, but encoded entirely on the machine performing the capturing. The encoding process itself is completed using a piece of software called ffmpeg2theora, which produces an Ogg Theora-encoded stream. This is then forwarded (piped) directly to an icecast server using oggfwd. Icecast is actually an 'internet radio' streaming media server, principally designed to stream audio data to listeners. It also has video streaming support via Ogg-encapsulated video codecs (Ogg, like AVI, is an 'envelope' for data in various formats; by contrast, MP3 files contain only MP3-encoded data.) The commonest video format found in Ogg files is Theora.

Had we been entirely confident of our infrastructure and resources, we would have used the ffmpeg solution. However, we felt that the data transfer might be briefly interrupted from time to time, and that a better result would be achieved using a less demanding approach. We therefore chose the Ogg Theora solution. A disadvantage of the approach was that almost all viewers needed to download the codec specifically for this event, since it is not a commonly distributed codec. On the other hand, the stream to the icecast server was less demanding, the image looked good at low bitrates and the encoding used very little system resources (i.e. a much slower machine could have been used successfully for this format).

Conclusion

In general, the video streaming was a popular feature. At one point, sixteen users were watching simultaneously with no ill effects on the server. This gives us the confidence to consider publishing a timetable in advance in future years, ensuring that the service is promoted before the event. However, the major flaw was as follows; turning off the camera or interrupting the Ogg stream caused broken frames, which meant that remote users' connections to the icecast server were broken. They were therefore forced to reconnect approximately once an hour.

We did not have a method of switching between DV inputs, either in software or in hardware. Hardware solutions such as DV-capable mixing desks do exist for this sort of purpose, and would be a low-effort solution for future years - although, given that such a piece of hardware costs something in the realm of a couple of thousand pounds, this takes us out of the realm of our original statement of intent. Software-based solutions are very probably available, or certainly could be created at the cost of a couple of weeks of qualified time and the expenditure of a few thousand brain cells, but we are not aware of an appropriate application for doing this with video data as of now, although audio information is another question.

The experiment ably demonstrated the correctness of our hypothesis, however. Video streaming is not beyond the reach of today's hardware, requiring just a few kilobytes/second upload bandwidth, a cheap FireWire digital video camera, a standard PC plus a FireWire port, a copy of Linux and something worth broadcasting! We hope in future years to be able to repeat the experiment at future IWMWs, using a dedicated laptop.

References

- Institutional Web Management Workshop 2006 http://www.ukoln.ac.uk/web-focus/events/workshops/webmaster-2006/

- "Recording Digital Sound", UKOLN QA Focus Briefing Paper 23, 2004 http://www.ukoln.ac.uk/qa-focus/documents/briefings/briefing-23/

- "YUV Wikipedia Article" (viewed 2006-10-27) http://en.wikipedia.org/wiki/YUV

- "YCbCr Wikipedia Article" (viewed 2006-10-27) http://en.wikipedia.org/wiki/YCbCr

- QuickTime Streaming Server 2.0: Streaming Live Audio or Video http://docs.info.apple.com/article.html?artnum=75002

Further Reading

- FFMpeg Multimedia System http://ffmpeg.mplayerhq.hu/

- ffmpeg2theora http://www.v2v.cc/~j/ffmpeg2theora/

- Ogg Theora Video streaming with Icecast2 howto http://www.oddsock.org/guides/video.php

- Theora Free Video Compression http://www.theora.org/

- Theorur - for Ogg/Theora Steaming http://theorur.tvlivre.org/

- VideoLAN http://www.videolan.org/