Research Libraries and the Power of the Co-operative

RLG Programs became part of OCLC in the summer of 2006. In November of last year, RLG Programs announced the appointment of a European Director, John MacColl. This article explains the rationale behind the combination of RLG with the OCLC Office of Research, and describes the work programme of the new Programs and Research Group. It argues for co-operation as the necessary response to the challenges presented to research libraries as the Web changes the way researchers work, and it lays out a new programme dedicated to research outputs, which will have significant European Partner involvement.

What Game Are We In?

Ranganathan's Fourth Law of Library Science [1] 'Save the time of the user', has never been more urgent a mandate upon research libraries and information services than it is at this point in the development of the Web. Research libraries have in many ways been left behind by the Web. It is a commonplace for research library directors to admit that researchers have deserted the physical premises of their libraries in droves, though they continue to make use of the e-journals which are licensed for them by libraries. But for much of their information seeking, discovery and use, they now make use of the tools of the Web – Google, Google Scholar, Amazon, Facebook, YouTube, Flickr and a host of services – such as CiteSeer for computer scientists - which they have built for themselves without library input. Libraries in research institutions have sought to re-establish their priority within the working lives of researchers by building new infrastructure – institutional repositories, federated search services, and virtual research environments – but the evidence of take-up is generally not convincing.

Two decades ago, academic libraries still saw themselves as set on a course towards UBC, Universal Bibliographic Control. One of the core programmes of IFLA (International Federation of Library Associations), the UBC programme office closed in 2003. The closure was not the result of UBC having been achieved. The Web had happened. And whereas the early days of automation in libraries had led to the belief that computer-assisted bibliographic services (witness MARC) would be the instrument which would finally make UBC a reality for a profession which had spent over a century refining and improving its controls over research information, in fact what happened with the Web was that automation evolved into something much more than an instrument of bureaucratic efficiency, and became a universal medium for human interchange and communication. The currency of research information was widened way beyond the digital analogues of the print paradigm, to include preprints of research papers, conference presentations, image banks, datasets, graphical representations produced from them, and a variety of community conversation venues which reflected the heterogeneity of research cultures. Libraries were sent reeling. The notion of Universal Bibliographic Control became difficult even to imagine in this widened environment of shared research inputs and outputs. Universal loss of control is what followed.

Their responses to the Web revolution were nonetheless generally correct. The Web is changing scholarly publishing, and so the motivations behind institutional repository development are on target. And we do need to provide for discovery which allows for the resources in our care to be found by those who seek them in the new medium. The Web provided the information age with a vast, functional, networked environment which suited it very well - but in doing so it accelerated the information age paradigm of scarce attention and abundant resources [2], fully reversing the paradigm to which libraries had adapted well but which was already disappearing in the late 20th century, of abundant attention and scarce resources. And so, indeed, we had to find new and effective ways to save the time of the user. But in our efforts to do so, we have tended too quickly to set up and fix in place other new services, in ways which are too influenced by the paradigms of our pre-Web professional existence, and without due attention to the behaviour of our users (whose well-understood ways of working in the print world we thought we knew and so had stopped checking on). Researchers have deserted our services not because the answers to all of their information discovery, access, fufilment and persistence needs are met by the Web's big services, and those built by their own communities, but because we cannot meet these needs either. We cannot provide them with high-quality, authoritative, reliable and comprehensive services across the research domains they inhabit. By contrast, services like Google Scholar and CiteSeer do indeed save the time of the user, and if the content they provide is compromised or limited in certain ways, then the answer perhaps ought to be to address the provision of the content, rather than seeking to create new tools.

Research Information at Web Scale

In the summer of 2006 the Research Libraries Group, a membership organisation representing a powerful sample of some of the world's top research libraries drawn from the academic and national library sectors, museum libraries, and archives, became part of OCLC, the world's largest library co-operative. Since its foundation in 1967, OCLC has been involved in building a co-operative database for the sharing of catalogue records – WorldCat – which is now the largest bibliographic database in the world, with around 100 million separate records, and over a billion holdings attached to them from member libraries across the globe. The 'marriage' of RLG and OCLC was a timely one. Despite their difficulties in meeting the challenges of the Web in recent years, research libraries are pioneers in the creation of new territory for information and memory organisations in this digital age, which is a much happier medium for the information age than was the multimedia analogue age which preceded it. The digital age has itself resolved into the world of the Web, which is a habitat so natural for people that we have seen it produce a set of hub services with high gravitational pull, now used daily by millions of people across the world, and which are setting the standards for the way services on the Web behave. When we use the Web to pursue information-based goals now, we expect interfaces which allow access, search, fulfilment and contribution within a paradigm which is becoming as clear as the printed codex did in its day for the more limited purposes which it could serve.

In short, the Web now allows the information age the freedom it needs, and for researchers of all kinds, that means that they require information services which play in the environments which have high gravitational pull (so that they can be found there and are convenient), which behave in expected ways within those environments, and which have 'Web-scale' comprehensiveness. To be 'Web scale' means to benefit from the power of aggregation of both supply and demand which is so well exemplified among the major hubs by amazon.com. In the world of information and memory organisations, OCLC's WorldCat has Web scale. It represents an aggregation of supply – through user-contributed content in the form of catalogue records (now extending to the article level, as well as books and journal titles) - and of demand, from users requiring copies of records for their own databases. Indeed, it took on a role of serving the 'long tail' of libraries and their users – for inter-lending as well as for copy cataloguing – long before the term was coined by Chris Anderson [3].

But that core model has ramified in various ways as the information age has become the age of the Web. The users who contribute content are now publishers as well as libraries. They are library consortia, contributing entire national union catalogues. On this sort of scale, WorldCat becomes more than just a massive set of records for copy cataloguing. It is a vast pool of authoritative, standardised data, and therefore capable of supporting data-mining to deliver some fascinating new Web-scale services. WorldCat Identities [4], for example, creates a page for every name in WorldCat, algorithmically drawing together variant forms of personal name, in multiple languages and scripts, together with cover art from the bibliographic objects, around a graphical bibliographic profile which shows publications by and publications about the subject in a timeline graphic. OCLC WorldMap [5] shows the bibliographic profiles of entire countries, as represented in WorldCat, and allows up to four at a time to be compared.

Much of the potential of WorldCat to go beyond the support of cataloguing is only now beginning to be explored. With the incorporation of RLG into OCLC, that exploration has been accelerated and focused, because the RLG team of Program Officers, experienced in the needs of the research information and memory organisation sector across universities, national libraries, museums and archives, has now joined forces with the former OCLC Office of Research, which consists of research scientists and software engineers who organise and mine WorldCat on behalf of OCLC members, and who prototype and build new tools which allow it to meet the needs of these member libraries. The bringing together of RLG and OCLC therefore gave Web-scale capability to research libraries, so that they can now build services of value to researchers who themselves operate at Web scale, and can assist institutions in exploiting Web-scale effects in order to make their service offerings system-wide more efficient and progressive. At the same time, it gave OCLC the opportunity to put the research information world's needs firmly at the forefront of its agenda. OCLC of course represents libraries of all kinds, but the research information sector arguably stands to gain more from global pooling of bibliographic data at the present time because its users – researchers, learners and visitors to cultural memory services on the Web – are living out much of their professional lives and their cultural engagement on the Web. These are the needs which require Web-scale services to be delivered, and OCLC has the Web-scale from which these new services can be specified, prototyped, tested, built, evaluated and released. That work is done within OCLC Programs and Research.

The Group divides into two teams, OCLC Research and RLG Programs, which work closely with each other. They plan and deliver their work via two synergistic agendas. The Research group is currently working on the areas of content management, collection and user analysis, interoperability, knowledge organisation, and systems and service architecture. Research scientists are addressing a swathe of issues facing the information profession through the lens of data analysis and experimentation. In the other half of the partnership, RLG Programs has a related Work Agenda which is likewise structured into themes, programmes and projects, but which grapples with issues through the environment of libraries, museums and archives 'on the ground', using its Partners as test populations and sources of user information. It surveys its Partner libraries, engages them in conversations, works with them to facilitate problem-solving, and seeks sectoral consensus to sectoral problems wherever possible. The idea of 'Web-scale' services permeates the entire work agenda of RLG Programs as it does OCLC Research. Web-scale solutions are sought to system-wide problems, and these solutions only arrive through collaborative discussion and analysis, from which fully scoped many-dimensioned problems emerge, and can be tackled.

Priority Areas

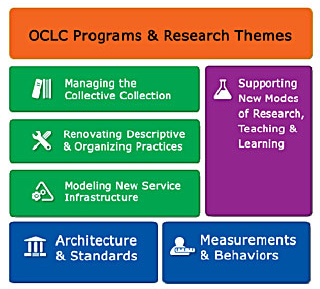

The RLG Programs Work Agenda consists of six themes, which are shown here:

Figure 1. The RLG Programs Work Agenda

Supporting New Modes of Research, Teaching, and Learning

This theme contains programmes devoted to 'scholarship in text aggregations', 'personal research collections' and (recently added) 'research outputs in reputation management', which is described more fully below.

Managing the Collective Collection

Activity within this theme covers 'shared print collections' and 'harmonisation of digitisation'. The feasibility of mass digitisation of special collections, the terms and conditions libraries should set when dealing with digitisation partners like Google and Microsoft, and the identification of unique holdings as priority targets for digitisation and preservation, are all topics which are currently featured under the 'collective collection' theme. WorldCat can also be mined for data on last copies and on collection overlaps in support of collection management across the digital corpus. This work is complemented by OCLC Research under its 'content management' theme, where data mining is being used to analyse the 'system-wide print book collection' to establish the size and characteristics of aggregate print book holdings, with an emphasis on implications for digitisation and preservation decision-making. A member of the Research team is also working on the economic justification of digital preservation. Economic issues associated with preserving digital resources over the long term are a critical research area for the digital preservation community. The work includes the development of forecasting strategies and sustainable business and economic models.

A separate project on the 'anatomy of aggregate collections' analyses the digitised corpus provided via the Google Book Search programme. Here, the Programs and Research synergy is very powerful, since 18 of the 29 libraries around the world which are currently working with Google on book digitisation from their collections are also RLG Programs Partners.

A 'last copy' project identifies rare or unique materials in individual library collections. Comparative collection assessment work within Research is looking at collection development, assessment, and resource sharing for print and e-book collections. A prototype publisher name server resolves ISBN prefixes to publisher names, resolves variant publisher names to a preferred form, and captures and makes available various publisher attributes (such as location, language, genre and dominant subject domain of the publisher's output).

Renovating Descriptive and Organising Practices

Under this theme, we examine ways in which collecting institutions can introduce efficiencies into their metadata creation operations, including cross-domain leverages, work on persistent identifiers, and the interoperability of vocabularies to assist discovery. In the archives field, RLG Programs continues the work developed by RLG on EAD (Encoded Archival Description) and on the EAC (Encoded Archival Context) standard.

Modelling New Service Infrastructures

This theme is concerned with ways to 'lift' library functionality to the network level. Much of the work done by RLG Programs seeks to achieve and promote new areas of consensus, and here we are exploring ways of developing integrated library system functionality within a shared context on the network. Registries have been described by Lorcan Dempsey as the 'intelligence in the network' [6], and are obvious levers of efficiency. RLG Programs staff are working with OCLC Research colleagues to develop, test and promulgate new registries, such as a Registry of Copyright Evidence, which will save the time of those seeking to digitise works in establishing their copyright status quickly and easily. And in work which crosses the boundary between new service infrastructures and renovating descriptive practices, we are developing tools for aggregating and syndicating third-party data and user-generated content to enhance metadata and help form the recommendation of and foundation for additional services.

Architecture & Standards; Measurements & Behaviours

Underlying all of this work are the ever-present efforts to measure and analyse user behaviours, and to advance standardisation work. RLG Programs engages Partners in hypothesis formulation and testing of their users' preferences for discovering, gathering, organising, and sharing information, and Programs and Research staff participate and often lead in both formal and informal standards efforts in representing library, archive, and museum interests.

Research Outputs in Reputation Management

We are conscious of a strong European interest in the area of research outputs publication and management. Open Access publication of research outputs in conjunction with their publication in peer-reviewed journals has become an issue not merely of efficiency in research lifecycle management, but also of political and economic import in a system in which research is financed largely from public monies. The accountability of public expenditure on research has become a high-profile matter, and public frameworks for the assessment of HE research have emerged. The latter rely, among other measures, on some evaluation of the quality of published output. Mainly due to the high cost of sustaining these frameworks on the basis of peer review of significant samples of research outputs produced by large numbers of institutions, there is discussion about moving parts of this evaluation from a peer-review process to one based on metrics, including, but not limited to, the well-tested approach of citation analysis. These frameworks for assessment necessitate new modes of interaction between university research administration and information management services offered by libraries. In the context of institutional management of reputation based on research performance profile and its associated financial reward, this is an area of library practice which has assumed a very high priority for research libraries. Good practice has not yet emerged, however, and the models for confronting these challenges are diverse due to the novelty of practice involved in libraries working on systems design with research administration units. Nor is there yet any common agreement on an optimal set of metrics for the assessment of research such that individual researchers as well as their institutions can be easily profiled nationally and internationally.

OCLC work to date in the Open Access area has been mainly through the DSpace harvesting project, in which OCLC Research periodically harvests OAI-compliant metadata from the institutional repositories of interested DSpace users. This metadata is harvested into a format suitable for re-harvesting by non-OAI services and popular search engines. With our new 'research outputs in reputation management' programme, Program Officers will also work with Partner libraries in this problem space, where some early state-of-the-art work could have useful impact, and should suggest further lines of work. Furthermore, it is an area where a comparative perspective would be interesting, given emerging national research assessment frameworks.

One project within the programme will consider expertise profiling. Many universities have expertise databases, which are available to the media or others. At the same time, some institutions are experimenting with academic staff 'home pages' in institutional repositories. University managements are interested in these services as ways of showcasing research profiles and strengths, and demonstrating knowledge transfer capability and practice. This information is useful to universities in their relationships with the media, funding agencies, their own governance bodies, and other organisations. What are the ways in which universities are disclosing academic staff expertise and productivity? Do they intersect with existing library services? In some institutions, some of these services are provided by third parties. What are the advantages and disadvantages of this approach?

Another project will look at workflow within research output administration. What types of workflow are emerging to support research administration? Typically, libraries running research repositories are providing data which is amalgamated with financial data (on research grant income) and headcount data (on numbers of research staff and students) to compose discipline-specific profiles at varying degrees of granularity. Are models for efficient data-merging yet in evidence? Are third-party system vendors responding to this need? Can best practice approaches be identified and promulgated? What models have emerged or are emerging in the relationship between institutional repositories and the managed publications lists that are required to drive assessment inputs? Is there any evidence of best practice?

With both expertise profiling and research output administration, we anticipate an initial survey phase which identifies the state of the art against a defined set of activities. A Working Group will be convened to set and direct the survey. It will include experts in research repository developments from several different territorial and disciplinary cultures, together with academic staff and university research administration representatives. This Group will meet at the conclusion of the survey phase to consider existing best practice, required standards, and recommended new functionality. Their conclusions will lead to a report which will be promoted widely within the research library, publication and assessment communities. This may then lead to more targeted pieces of work based on the intersection of Partner interests and our findings.

Finally, we will also focus upon 'search engine optimisation of research outputs'. In an environment of rankings, and where Web visibility can enhance reputation, individual academic staff, institutions, departments and research groups are interested in good practice. Some now have guidelines for how they should be named and credited in academic publications, so that they can be represented as fully and consistently as possible in citation analyses. Research outputs held by institutional repositories generally conform to well-supported bibliographic networked data standards (e.g. Dublin Core, OAI-PMH) but not to the degree of standardisation which is required in a non-distributed bibliographic system (e.g. on terminology use and authority files). What steps are institutions taking to promote the discoverability of their research outputs on the Web? In the context of providing content which is tuned to optimise discovery by third-party search engines, what is the minimum platform investment which research libraries need to make?

Models have been proposed to encourage quality thresholds for metadata in institutional repositories to be achieved via co-operative metadata approaches. What is the value of a more highly regulated co-operative approach as opposed to the lesser regulated approach of producing more variable metadata and trusting to third-party search engines such as Google to perform effectively? How closely related are enhanced metadata quality standards and search engine optimisation in the field of research outputs? This project is likely to take the form of an international conference of designers, developers and managers of bibliographic databases and digital repositories, together with information scientists. An agenda will be set to allow presentations of the state of the art in research assessment policy regimes, and in database and repository functionality, leading on to an attempt to record consensus and extrapolate common requirements for database and repository design.

Strategic Guidance for the Sector

Programs and Research is working for the research information system as a whole. The focus of its staff across both the Programs and Research teams is on system-wide efficiencies, delivered from a deep understanding of the Web environment which now provides the medium for the conduct of research, and the discovery, delivery and preservation of its outputs. We work primarily with our individual Partner libraries from many of the top universities, national institutions, museums and archives around the world – many of which are in the UK. In addition, we recognise the strong roles of national funding agencies and library and research consortia within many European countries, and across Europe as a whole. We are keen to work strategically with these groups in order to pursue common agendas which will improve the research library sector performance for the good of researchers and research information users throughout the world.

The research library community does not have the financial muscle of Google or Microsoft – not even if its financial resources are pooled. But it does have a Web-scale bibliographic resource in WorldCat. For libraries whose records are fully represented in it, there are opportunities to exploit that scale and benefit from network effects which will ensure that their resources are discovered and used not only by their own constituents, but by the vast numbers of hidden users across the world whose own research can benefit from access to resources which were previously unavailable to them. And as it grows ever larger, so its capacity to improve the quality of metadata-based research services in general is increased. The Programs and Research Team is acutely conscious of the need to make the data in this massive, co-operatively created Web-scale resource work ever harder for its builders and their users. Research libraries have indicated their commitment to co-operation for decades, and they have realised the benefits of it from interlending and shared cataloguing. But the challenges presented by the Web environment mean that co-operation which extends only to interlending and shared cataloguing is not enough. Being awake in a Web world requires a much deeper commitment to the power of co-operation.

References

- Ranganathan, S.R. (1931) The five laws of library science, Madras Library Association

- Haque, Umair (Tuesday November 5, 2005) 'The attention economy' Bubblegeneration blog http://www.bubblegeneration.com/2005/11/attention-economy-across-consumer.cfm

- Anderson, Chris (2004) 'The long tail' Wired 12 (10)) http://www.wired.com/wired/archive/12.10/tail.html

- WorldCat Identities http://orlabs.oclc.org/Identities/

- OCLC WorldMap http://www.oclc.org/research/projects/worldmap/default.htm

- "The (Digital) Library Environment: Ten Years After", Lorcan Dempsey, February 2006, Ariadne, Issue 46 http://www.ariadne.ac.uk/issue46/dempsey/