The Potential of Learning Analytics and Big Data

Patricia Charlton, Manolis Mavrikis and Demetra Katsifli discuss how the emerging trend of learning analytics and big data can support and empower learning and teaching.

‘Not everything that can be counted counts, and not everything that counts can be counted.’ Attributed to Albert Einstein

In the last decade we have had access to data that opens up a new world of potential evidence ranging from indicating how children might learn their first word to the use of millions of mathematical models to predict outbreaks of flu. We explore the potential impact of learning analytics and big data for the future of learning and teaching. Essentially, it is in our hands to make constructive and effective use of the data around us to provide useful and significant insights into learning and teaching.

Learning Analytics and Big Data

The first international conference on Learning Analytics and Knowledge was held in 2011 in Alberta [1] defining Learning Analytics “as the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimising learning and the environments in which it occurs”

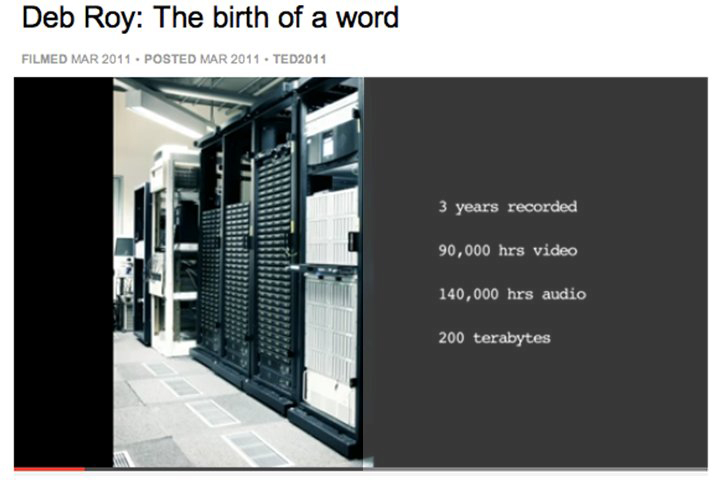

So what has changed in education? Hasn’t learning analytics been part of teaching since teaching began? With the digital age we have seen the exponential growth of data and with it the potential to analyse data patterns to assist in determining possible factors that may improve the learner’s success. However, the challenge is to determine which data are of interest. We are now in an era where gaining access to data is not the problem; the challenge lies in determining which data are significant and why. There are many data mining tools available to help with the analysis, but in the past they were targeted on structured data. Today we have so much data that come in an unstructured or semi-structured form that may nonetheless be of value in understanding more about our learners. For example, Deb Roy [2] recorded the life of the development of his child’s first word. In Figure 1 we see the amount of data captured and analysed which provides a visual narrative of learning taking place.

Figure 1: The data captured to see the birth of a word in action

The opportunity to record the data visually means that a narrative of how language learning takes place in early development can be analysed. But the scale of data to see the development of a word (a huge step for the development of a baby or toddler and the family) is immense. The world of technology has changed since the turn of the century as now [3]:

- 80 per cent of the world’s information is unstructured;

- Unstructured data are growing at 15 times the rate of structured information

- Raw computational power is growing at such an enormous rate that we almost have a supercomputer in our hands;

- Access to information is available to all

In a BBC Radio 4 interview, Kenneth Cukier [4], when discussing Big Data stated “Let the data speak for itself”. However, “more data does not mean more knowledge” (Dr. Tiffany Jenkins [4]).

Cukier describes an example from Google predicting the flu outbreak in the US. He explains that a correlation experiment was run using millions of terms where they used lots of mathematical models:

‘…what they were able to find was they were able to correlate in a very short period of time where flu outbreaks were going to be in quasi real time’.

Cukier states that because of the large scale set of variables that are used in the era of Big Data, we as humans must give up a degree of understanding how the causation and possible new insights into the data used to provide information may have been determined. Computers can relentlessly trawl through data but the lens that is used to determine the patterns through those data are a human invention. And as stated during the Radio 4 [4] programme by Marcus du Sautoy, mathematics is used to find patterns. We use mathematics everyday. However, to obtain information that is really useful to us, we need to know what we are looking for in the sea of data. He gave the example of the Hadron Collider experiment in which huge amounts of data was created as electrons were smashed together to determine how electrons and quarks have mass, which was achieved through identifying the Higg’s Particle [5]. However, the Higg’s Particle was too unstable so researchers had to look for the existence of evidence that a Higg’s Particle might leave behind. Searching for the evidence in a mass of data requires knowing what kind of evidence is needed. Knowledge of the domain and understanding and interpretation of the patterns we see. So how can we aquire an understanding of how to handle Big Data and its possible value to learning analytics?

What Is Big Data?

‘Big Data applies to information that can’t be processed or analyzed using traditional processes or tools. Increasingly, organizations today are facing more and more Big Data challenges. They have access to a wealth of information, but they don’t know how to get value out of it because it is sitting in its most raw form or in a semistructured or unstructured format; and as a result, they don’t even know whether it’s worth keeping (or even able to keep it for that matter).’[3, p.3]

There are three characteristics that define Big Data: volume, variety and velocity. Big data is well suited for solving information challenges that do not natively fit within a traditional relational database approach for handling the problem at hand. The major shortcomings of the existing tools today according to the report by Emicien [6]:

- Search helps you discover insights you already know, yet it does not help you discover things of which you are completely unaware.

- Query-based tools are time-consuming because search-based approaches require a virtually infinite number of queries.

- Statistical methods are largely limited to numerical data; over 85% of data are unstructured.

Learning Analytics and Educational Data Mining

So How Might We Work with Big Data?

We need to use new models that do not treat data as only numerical data, that are time-efficient as the data available have a time dependency relevance and can provide a representation of means of informing us of new potential discoveries. The idea that there are systems in place that can correlate millions of terms and use over millions of mathematical models in a meaningful time span to provide potentially relevant predictions places us centre-stage of a further dimension of Zuboff’s Smart Machine [7]. ‘Knowledge and Technology are changing so fast […] What will happen to us?’ [7, p.3]. A statement with which many involved in delivering teaching and learning today may have sympathy. There are many tools and applications available as well as online data about students’ achievements, learning experiences and the types of teaching resources available. The analytic tools currently used to support the analysis of these data from an educational perspective are covered by two key research perspectives:

- educational mining data tools; and

- learning analytics.

While related and having similar visions, they pursue different needs and provide different but relevant insights into working with the digital context of the data about the world of education.

The Educational Data-mining Lens

‘Educational Data Mining is an emerging discipline, concerned with developing methods for exploring the unique types of data that come from educational settings, and using those methods to better understand students, and the settings which they learn in.’ [8]

Working with any data does require an idea; a hypothesis and a view to know what kind of patterns you might be interested in. For teaching and learning it is the understanding of those critical factors that influence the teaching and learning experience. The types of tools that assist us in navigating the data are data mining tools. As indicated, not all classic methods previously used will make sense in Big Data.

Some examples of the types of applications that can result from Educational data mining are [9]:

- Analysis and visualisation of data

- Providing feedback for supporting instructors

- Recommendations for students

- Predicting student performance

- Student modelling

- Detecting undesirable student behaviours

- Grouping students

- Social network analysis

- Developing concept maps

- Constructing courseware

- Planning and scheduling

Both Learning Analytics and Educational Data Mining communities have the goal of improving the quality of analysis of educational data, to support both basic research and practice in education.

However as indicated by Siemens and Baker [10], there are differences between the approaches as their focus and routes come potentially from different places. The comparison between the approaches can help us better understand the potential role of learning analytics. A brief comparison of the two fields is given in Table 1.

| Learning Analytics | Educational Data Mining |

Discovery | Leveraging human judgment is key; automated discovery is a tool to accomplish this goal | Automated discovery is key; leveraging human judgment is a tool to accomplish this goal |

Reduction & Holism | Stronger emphasis on understanding systems as wholes, in their full complexity | Stronger emphasis on reducing to components and analysing individual components and relationships between them |

Origins | has stronger origins in semantic web, “intelligent curriculum,” outcome prediction, and systemic interventions | has strong origins in educational software and student modelling, with a significant community in predicting course outcomes |

Adaptation & Personalisation | Greater focus on informing and empowering instructors and learners | Greater focus on automated adaptation (eg by the computer with no human in the loop) |

Techniques & Methods | Social network analysis, sentiment analysis, influence analytics, discourse analysis, learner success prediction, concept analysis, sense-making models | Classification, clustering, Bayesian modelling, relationship mining, discovery with models, visualisation |

Table 1: A brief comparison of the two fields (courtesy of George Siemens and Ryan Baker [10])

Learning-analytics Lens and Big Data: From Theory to Practice

Below we consider some scenarios to explore the potential, approaches, impact and need for learning analytics in the education context.

Scenario 1: Assume All Students at Risk at All Times

As students are at risk at all times of failing, is it still useful to predict which students will be at a higher risk level than others? Teachers’ insight into past experiences of their students provides important understanding in identifying how to provide the right type of scaffolding at the right time. How then could we exploit teachers’ knowledge and experience gained from teaching similar cohorts in the past?

We could automate the testing of certain thresholds / hypotheses that teachers deem important indicators of learning at key times during the year. An example being: ‘if a student has not achieved an average of 45% or above on three assessed assignments by mid-January, the student is likely to fail the year’, or another example, ‘if in addition to not achieving a minimum of 45%, the student has not made the due fee payment, he/she is at risk of dropping out’.

The identification of such thresholds is based on patterns observed by academics when teaching similar student cohorts, albeit without using complex statistical methods. At a very minimum, such hypotheses should be tested by way of feeding the teachers’ relevant experience into evaluating student performance at key control points.

Scenario 2: Assume Students Present Different Levels of Risk at Different Times

More complex patterns of student behaviour where a multitude of factors come to bear are not easy to detect or to express as simple hypotheses. Teachers and academic support staff will have a good sense of what factors influence student performance, for example, past academic achievements, number of visits to online course materials, number of hours per week in a paid job, hours per day spent travelling, number or pattern of free periods in student timetables, demographics, financial support and so on. Guided by such clues, modelling experts will test the historic data looking for inter-relationships and the significance of each of the factors suspected to be influential. Manipulating data structures by categorising or grouping data or handling missing data items is all part of the statistical analysis, with the aim of creating a predictive model that can run automatically. Of course, it calls for expertise in multidimensional analysis and there are plenty of people working in and for education who are qualified to take this on.

Such analysis could result in seven to 10 (or more) significant factors that have influenced student success for a given cohort in the past, and these factors will form the basis of a predictive risk assessment model for the current student cohort. The model will produce a predictive score for each student and display results in easily interpreted categories of risk: for example, at high risk, at risk, or safe, or even red, amber, green.

In principle, this approach is the most robust as it incorporates the widest possible set of considerations when evaluating student performance and the risk of failure. Moreover, once the model is created, it can be run daily to provide the most up-to-date outlook for each student.

Ideally, the predictive risk assessment model should also incorporate the ability to execute discrete tests of various different hypotheses at given times in the year (as described in scenario 1 above, especially where there are gaps in the associated historic data.

Scenario 3: Troubleshooting via a Single Piece of Data vs Big Data

Assume that we have a robust predictive risk assessment model (with 85% to 95% accuracy), is there any point in also considering other types of observed student behaviour? Take a scenario where the predictive model ran overnight and a student who was predicted to be ‘safe’ was seen later that day by the teacher in class with his head down on the desk for the second time that week. It can be argued that in an ideal situation, the predictive model should run alongside an online incident management process which can manage behavioural issues that have actually been observed (as opposed to predicted risk). Also, the number and types of incidents logged over time should be added to the pool of learning analytics and examined for their contribution to student learning.

Impact of Predictive Learning Analytics

Like all other models in all walks of life, the impact of predictive analytics will be negligible if the right people are not (a) examining the results of the model on a regular basis and (b) taking action to mitigate predicted risk. Science and technology have already come together to develop tools to exploit learning analytics and big data so as to help remove barriers to learning and to increase attainment. There are tools that encompass the automation of all the methods outlined in the above three scenarios, i.e.

- testing of specific hypotheses on student performance

- predictive risk assessment using multiple data points

- management of individual student observed behaviours

Educators who work with this level of precision will have the most power to influence student learning and to shape learning and teaching methods for future students. Policy makers may also be interested in this kind of information at large scale. This is becoming more accessible both due to connectivity and to the potential of continuous assessment that is becoming possible either directly or indirectly through the interaction and the traces that students leave behind when interacting with educational software.

Systems Using Analytics and Data for Teaching and Learning

There are many tools and platforms available for teachers to use. We have selected some of the tools and platforms to analyse in this report that were presented and demonstrated at the London Knowledge Lab 11

Jenzabar

Jenzabar’s Retention Management [12] platform provides analytic tools that enable an all-inclusive student profile instantly informing whether a student is safe, at risk, or at high risk of dropping out or failing. To develop the model, Jenzabar first analyses an institution’s existing information, as far as three years back, to determine trends and significant factors affecting retention and graduation rates for a specific cohort of students. Retention factors include all aspects of a student’s profile, past and present, academic, social and financial. Any number of ‘suspect’ factors can be tested for their impact on retention / graduation, but from experience only about 12 factors have been found to be significant for any given cohort. Once the significant factors are mathematically identified, weight values are assigned to each in order to create the Risk Assessment model.

The analytic tools (called FinishLine and supplied by Jenzabar) are currently used at approximately 70 HE and FE institutions, mainly in the US. As well as the predictive modelling, it also provides easy management of alerts and interventions. Examples of cohorts managed via FinishLine are non-traditional students (with factors such as number of years away from study, hours in a job per week, number of small children, length of commute), transfer students, distance learning students, international students, first-years, second-years and final year students.

Teaching and Learning Analytics and MOOCS

The MOOC (Massively Online Open Courses) model is closest to IOE (Institute of Education) models for certain kinds of CPD course – expert input plus significant peer interaction, with no individual guidance or assessment. The general expectation is that the MOOC model could transform the nature of HE. The Course Resource Appraisal Model (CRAM) provides the visual analysis [13] provides an insight into how to think about the teaching and learning experience impact when we move from face-to-face, blended learning and larger-scale delivery such as MOOCs. Understanding the impact of changes on both teaching and learning when scaling up requires bringing together the pedagogical understanding with the learning experiences for the students. Visual representations of the impact of scaling up and including other resources used in delivering teaching and learning provide teachers and learning technologists with an explicit insight into design changes.

Maths-Whizz

The Maths-Whizz [14] proprietary online tutoring system is able to assess individual students’ levels continuously in the different topics in mathematics and then identifying an overall “maths age” for each student. This is useful when used as a relative measure to compare profiles and abilities of students within a class in an easy and digestible manner. This empowers teachers to assess areas of attention for their class as a whole as well as individual students. This capability is currently being expanded to provide ministries of education with an ability to compare different regions or schools in terms of both maths ability and progress rate, raising the potential of evaluating the impact on the learning of mathematics via their various educational initiatives. Over 100,000 students in multiple countries are currently being virtually tutored by Maths-Whizz providing a significant amount of data for useful analysis and value-added conclusions.

Blikbook

BlikBook [15] uses analytics to understand class engagement and drive better learning outcomes. It is a course engagement platform for lecturers and students in higher education. It helps students find the academic content they need, whilst helping lecturers save time supporting students outside the lecture theatre. The platform was made publicly available in September 2012 and is now in use at over a third of UK Higher Education institutions. Students using the platform rate their lecturer 20-40% higher in answers to National Student Survey questions, and lecturers report increased academic performance, particularly among students who tend to perform below the average.

Teacher Assistance Tools in an Exploratory Learning Environment

The MiGen Project [16] has developed an intelligent exploratory learning environment, the eXpresser, that aims to support 11-14 year-old students’ development of algebraic ways of thinking. Given the open-ended nature of the tasks that students undertake in eXpresser, teachers can only be aware of what a small number of students are doing at any one time as they walk around the classroom. The computer screens of students who are not in their immediate vicinity are typically not visible to them and these students may not be engaged in productive construction. It is hard for teachers to know which students are making progress, who is off-task, and who is in difficulty and in need of additional support. Even for those students whose screens are currently visible to the teacher, it may be hard for the teacher to understand the process by which a student has arrived at the current state of their construction and the recent feedback the student has received from eXpresser, and to provide appropriate guidance. A suite of visualisation and notification tools, which are referred to as the Teacher Assistance tools, aim to assist teachers in focussing their attention across the whole class as students are working with eXpresser. The interventions supported by the tool help students reflect on their work. The teacher is also made aware of the interventions, provided as feedback and suggestions to students, so that the teacher can use this knowledge when setting new goals and further feedback.

Tools and Platforms | Learning Analytics Perspective | Educational Data Mining Perspective | Teaching Analytics Perspective |

Jenzabar | Strong analytics overall and systemic approach using large- scale data from across the organisation. | As well as learning analytics, statistical models are used to create predictive analysis | Whole organisation is involved in understanding the behaviour view. Although no explicit pedagogical framework is included, teachers and lecturers can access and input into the student model. The tool provides visual representations to lecturers and support staff to inform which students need support and why. |

CRAM (Course Resource Appraisal Model) | Provides visual representation of teaching | Classification system and relationships to support activities across Teaching and Learning analysis | Automated visual models of the impact on teaching and learning. |

Maths Whiz | Domain-specific analysis that enables different types of insight into the students’ learning and relative learning of Maths | Uses analysis clustering models of static relative learning points to determine Maths age and contextual data for relative improvement analysis | Uses a visual model to provide information of the Teachers to use as part of their teaching. |

BlikBook | Social peer group approach for exchange and group problem solving | Student modelling and profiles for community use | Student feedback to the teachers and more peer- led student groups |

MiGen | Social grouping and outcome predictions of students. | Rule-based analysis and various problem-specific computational techniques analyse task progression to provide feedback to the teacher and students. | Constant visual analytics of teaching and learning for teachers to instigate immediate intervention on task progression and collaborative activities. |

Table 2: Tools and their data

One critical success factor of all these tools has been the engagement with teachers. While they have all taken a view on learning needs and experience of the students, it is often the teacher that takes the lead role in determining the contextual factors that influence the students’ learning. This is possible when the teacher or lecturer participates in the design process of the tool and contributes to how the tool will be used. It is only in the ownership and interpretation of the ‘big data’ by those that must work with it that significant impact and value are realised.

Discussion

How might we make use of learning analytics and big data? Big data and learning analytics: Friends or foe? How does big data and learning analytics influence education?

Decision Making for Teachers and Policy Makers

The proliferation of educational technology in classrooms is both a blessing and a curse for teachers. Among other factors, and related to the discussion here, teachers need to be able to monitor several students simultaneously and have meaningful reports about their learning. Communicating therefore the vast quantity of traces that students leave behind when interacting with computers into meaningful and informative ‘indicators’ is an effort shared among many research and development groups [17]. Examples that are informative and beneficial to learning range from the simple analysis of common question errors to lack of resource usage to more complex patterns of students’ interactions [18]. The analysis can include recommendation about which students to group together. Also, the analysis is useful in helping teachers to see the potential impact of resources and new ways of delivering teaching and learning [19][20]. When teachers, head teachers and policy makers are faced with many constraints, data can provide them with the information they need to make the better decisions for the learners of today and the future. How might this be done? In what form do these data best serve decision makers? How might we interrogate the data to understand the key concepts that may underpin the potential rationale of teaching and learning experience for the user? Or are we really in the realm that Cukier refers to as ‘letting the data speak for itself’?

Students, Their Own Data and Own Learning

While reflection on one’s learning is critical, how do we learn to reflect and make effective decisions about improving our learning? Personalised feedback is extremely useful but for the teacher it is time-consuming and cannot always be available in a timely manner. Personal analytics that potentially identify one’s common errors in understanding or less-than-appropriate techniques used by the students can provide them with a context in which to reflect on their own development and to address improvements in a timely manner. How might we best present such feedback and information to students? There are several examples of systems and Web sites that attempt that. The most recent hype is around the analytics provided in the Khan academy, a Web site with open resources for learning mathematics and science through a series of online video and interactive activities. Detailed bar graphs show students’ progress, histograms presenting the problems students encountered with particular activities and a tree structure represents the knowledge. Can the use of various feedback cases and contextual knowledge be useful in supporting such feedback? How can teachers leverage such knowledge to provide the appropriate and effective feedback efficiently? How can the data help students help each other in developing further understanding and help them improve their learning experience?

Data, Research Questions and the Potential of Data

Researchers have various types of data that they collect and analyse to understand various developments and insights. The research itself is formed from questions in search of understanding about the context or critical factors that affect teaching and learning. Sometimes these questions are based on observations, or an idea of innate knowledge that we might class as a hunch, a hypothesis that draws from the knowledge and experience about the current context of teaching and learning, or impacts and changes in society. Process and product data both from human-computer and computer-mediated human-human interactions provide unique opportunities for knowledge discovery through advanced data analysis and visualisation techniques. For example, Porayska-Pomsta et al [21] analysed patterns of students-tutor computer-mediated dialogues to develop machine-learned rules about tutors’ inferences in relation to learners’ affective states and the actions that tutors take as a consequence. We can harness the power of data the better to understand and assess learning in both technology-enhanced and even conventional contexts. Big Data can be found even in small-scale approaches as the amount of data collected even from a small series of interactions can be enough to provide useful insights for further analysis. With access and the opportunity to share data across domains, together with the variety of areas of learning, we are in a position to reflect on large-scale data and patterns that may provide further insights into correlations between what are the important factors for learning to take place and the critical indicators. While intuitively we may be able to state what these factors are, providing the evidence is difficult. There are so many variables that may influence the learner, the learning, the teacher and the teaching, so how might the more ubiquitous and pervasive nature of learning research data be made available to teachers? How can a broader audience have access to the data and be provided with the right contextual lens to interpret the data? Is there a right context? What is the best way for researchers to share their findings with teachers and learners? How can teachers and learners be included in the process and influence the process from research questions to analysis?

Systems Can Adapt, Automate and Inform of Change

Systems and tools can adapt to the context and data by providing automated responses, for example, quiz assessments, analysis of interaction, usage or not of online resources, summaries of who is interacting more frequently than others and with whom. This is not new since the ease of access to Internet through browsers; the lens with which we view data requires a trust of the underlying rules and algorithms that have been provided for us to access data. For example, the browser adapts to our searches based on the knowledge it already has about our usage. Trend analysis provides information from the past to some potential insights in the future. For example, Intelligent Tutoring Systems may provide individualised feedback to students but we know now that it is being used both to ‘game the system’ [22] but also as a means of reflection [23] Automation of systems to adapt based on learning trends and factors is possible. For example, currently such data is forming part of the use of and growing interest in MOOCs (Massive Open Online Courses)[24]. When scaling up to a large student cohort what happens to both teaching and learning? How should systems be managed? How can we help teachers and learners get the best out of such systems? Can knowledge profiling of critical factors help in interpreting the data presented and the automations in place? These are all questions that concern the fields of Educational Data Mining and Learning Analytics.

Conclusion

Critical to considering the data is the interpretation of the data and that our instinctive perspective still holds crucial value even when articulation is challenging. Without experience of the context, data analysis may bring little value. In a recent seminar by Professor Diana Laurillard about the possible way of understanding the impact of scaling up for large online courses, which investigated the move towards MOOCs, she reported a discussion with one of the lecturers who had been asked to assess the impact of scaling up: ‘The lecturer intuitively felt that the change would have an impact but was struggling to articulate in what way’. After some further investigation and working with a teaching design tool (see Course Resource Appraisal Model (CRAM) [13]) that provides a visual representation and feedback about learning and teaching, the lecturer could see there would be an impact on personalised learning (eg getting one-to-one feedback). As the size scaled up, the one-to-one teaching opportunities diminished. Although tools and techniques have evolved substantially in the last few years, there are still very important limitations that need to be addressed and as technology is rapidly being adopted in schools and homes around the world, the educational sector runs the risk of ‘picking the low hanging fruit’ of learning analytics — meaningless descriptive information or simple statistics that values what we can easily measure rather than measures what we value.

References

- 1st International Conference on Learning Analytics and Knowledge, 27 February - 1 March 2011, Banff, Alberta, Canada https://tekri.athabascau.ca/analytics/

- Learning Your First Word http://www.ted.com/talks/deb_roy_the_birth_of_a_word.html

- Chris Eaton, Dirk Deroos, Tom Deutsch, George Lapis & Paul Zikopoulos, “Understanding Big Data: Analytics for Enterprise Class Hadoop and Streaming Data”, p.XV. McGraw-Hill, 2012.

- BBC Radio 4, Start the Week, Big Data and Analytics, first broadcast 11 February 2013

http://www.bbc.co.uk/programmes/b01qhqfv - Why do particles have mass? http://www.lhc.ac.uk/17738.aspx

- The Big Data Paradigm Shift: Insight Through Automation http://www.emicien.com/

- Zuboff, S. (1984). “In the Age of the Smart Machine” New York, Basic Book.

- The International Educational Data Mining Society http://www.educationaldatamining.org/

- Romero, C. (2010). Educational Data Mining: A Review of the State of the Art, In: Systems, Man, and Cybernetics, Part C: Applications and Reviews, IEEE Transactions on Date of Publication: November 2010

- Siemens & Baker (2012). Learning Analytics and Educational Data Mining: Towards Communication and Collaboration. Learning Analytics and Knowledge 2012. Available in .pdf format at

http://users.wpi.edu/~rsbaker/LAKs%20reformatting%20v2.pdf - LKLi (London Knowledge Lab Innovations)

https://www.lkldev.ioe.ac.uk/lklinnovation/big-data-and-learning-analytics/ - Jenzabar, 2013 http://www.jenzabar.com/higher-ed-solutions/retention

- Laurillard, D. (2013), CRAM, Course Resource Appraisal Model

https://www.lkldev.ioe.ac.uk/lklinnovation/big-data-and-learning-analytics/ - Maths-Whiz http://www.whizz.com/

- Blikbook http://www.blikbook.com/

- MiGen http://www.lkl.ac.uk/projects/migen/

- Gutierrez-Santos, S. Geraniou, E., Pearce-Lazard, D. & Poulovassilis. A. (2012) Architectural Design of Teacher Assistance Tools in an Exploratory Learning Environment for Algebraic Generalisation. IEEE Transactions of Learning Technologies, 5 (4), pp. 366-376, October 2012. DOI: 10.1109/TLT.2012.19.

- M. Mavrikis Modelling Student Interactions in Intelligent Learning Environments: Constructing Bayesian Networks from Data. In: International Journal on Artificial Intelligence Tools (IJAIT), 19(6):733 - 753. 2010.

- Charlton P., Magoulas G., Laurillard, D. (2012) Enabling Creative Learning Design through Semantic Web Technologies, Journal of Technology, Pedagogy and Education, 21, 231-253.

- Laurillard, D., (2012) “Teaching as a Design Science”, Building Pedagogical Patterns for Learning and Technology, New York, NY, Routledge.

- Porayska-Pomsta, K., Mavrikis, M., and Pain, H. (2008). Diagnosing and acting on student affect: the tutor’s perspective. In: User Modeling and User-Adapted Interaction, 18(1):125 - 173.

- Baker, R.S., Corbett, A.T., Koedinger, K.R., Wagner, A.Z. (2004) Off-Task Behavior in the Cognitive Tutor Classroom: When Students “Game The System”.Proceedings of ACM CHI 2004: Computer-Human Interaction, 383-390.

- Shih, B. Koedinger, K. and Scheines, R. A, Response Time Model for Bottom-Out Hints as Worked Examples in Educational Data Mining conference 2008.

- See the recent ‘moocshop’ in the 2013 International Conference of Artificial Intelligence in Education https://sites.google.com/site/moocshop/

Author Details

Email: patricia.charlton@pobox.com

Web site: http://tinyurl.com/TrishCharlton

Patricia Charlton is a researcher on artificial intelligence, cognitive science and technology-enhanced learning. She has recently researched and designed knowledge co-construction systems using computational reflection and meta-level inferencing techniques.

Email: m.mavrikis@lkl.ac.uk

Web site: http://www.lkl.ac.uk/manolis

Manolis Mavrikis is a Senior Research Fellow on Technology-Enhanced Learning in the London Knowledge Lab. His research lies at the intersection of artificial intelligence, human-computer interaction and machine-learning and aims to design and evaluate transformative intelligent technologies for learning and teaching.

Email: Demetra.Katsifli@jenzabar.com

Web site: http://www.jenzabar.com/

Demetra is responsible for leading the implementation of academic technologies for universities and colleges. Her professional career in Higher Education spans 33 years and has focused on leading enterprise services and systems to underpin the core business of education. Demetra’s research interest is in educational technology in Higher Education.