Realising the Potential of Altmetrics within Institutions

Jean Liu and Euan Adie of Altmetric take a look at the growing presence of altmetrics in universities, and consider some of the potential applications.

The concept of alternative metrics as indicators of non-traditional forms of research impact – better known as ‘altmetrics’ – has been gaining significant attention and support from both the scholarly publishing and academic communities. After being adopted by many publishing platforms and institutional repositories within the past year, altmetrics have entered into the scholarly mainstream, emerging as a relevant topic for academic consideration amidst mounting opposition to misuse of the Journal Impact Factor.

The future of altmetrics has mostly been discussed in the context of highlighting research impact. Although the metrics themselves still require much refinement, qualitative highlights from these data are valuable and have already begun to appear in the digital CVs of researchers. It is likely that qualitative altmetrics data will be increasingly used to inform research assessment, such as in funding applications, as well as faculty promotion and tenure. However, the development of altmetrics is still in its early stages. Moreover, much of the data collected at the moment indicates the attention paid to rather than the quality of different scholarly works, and it is important to bear this in mind and to distinguish between the different kinds of impact that a piece of research can have [1]. Altmetrics are very good at finding evidence of some kinds of impact and not so much others. They complement rather than replace existing methods.

The Altmetric Service

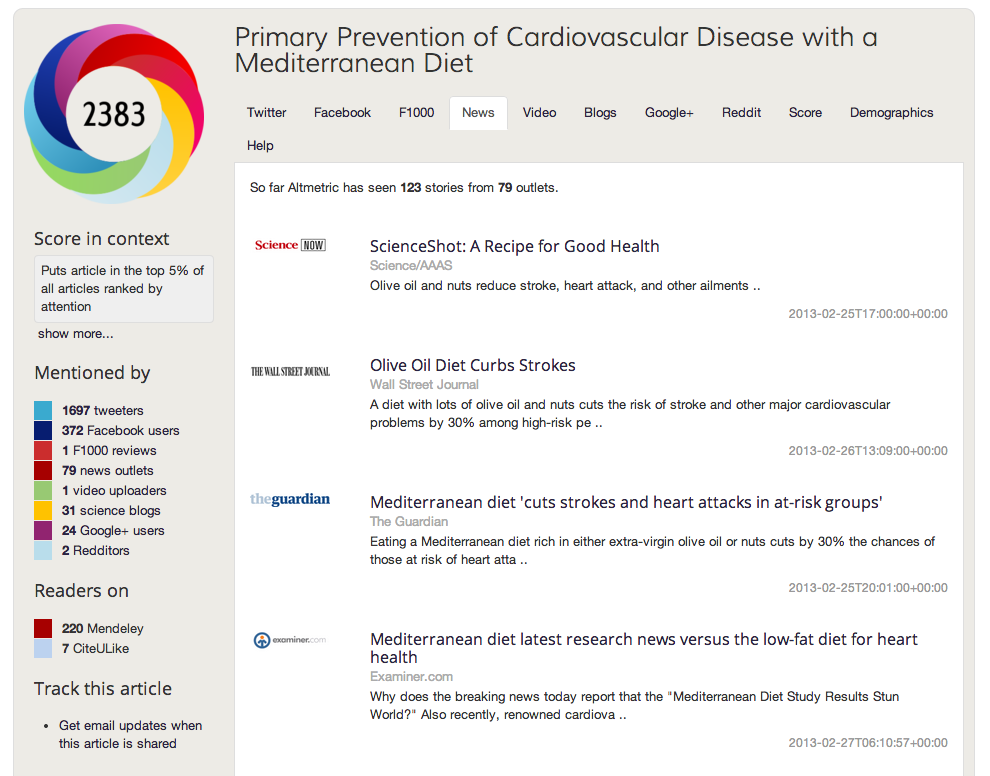

Our service, Altmetric [2], is an aggregator of online attention surrounding scholarly content, primarily journal articles and datasets [3]. Mentions of scholarly content on social media posts, news coverage, blog posts, and other sources are picked up as online attention, and are displayed in “article details pages”, which allow users to see who has mentioned specific articles, and what has actually been said (Figure 1). The article details pages were principally designed with publishers and their authors in mind, and include both quantitative measures (raw metrics, along with an aggregate estimate of attention known as the Altmetric score [4]) and qualitative content (the mentions themselves). In the context of research assessment, it is important to bear in mind that the Altmetric score merely estimates the amount of attention paid to the article, and does not in any way judge the quality of a scholarly work. The score’s primary use is for article discovery, as readers are able to filter quickly through an immense collection of articles by the amount of social attention, therefore potentially uncovering new and interesting articles to read.

Figure 1: Screenshot of an Altmetric article details page

Although Altmetric’s data and tools have thus far been geared towards scholarly publishers, we have recently begun to work with institutions. Our institutional experience formally began in February 2013, when we started providing services and tools free to academic librarians and institutional repositories [5]. The feedback that we have received from librarians and repository managers has been very positive, although the fact that Altmetric’s products are publisher-oriented means that there is still much to be done to achieve greater value for institutional use.

In this article, we will explore the potential for altmetrics to be used effectively within the institutional environment. We will also examine some of the possible applications of altmetrics from the perspectives of the different end-users and of the university as a research institution.

Enriching Altmetrics for Researchers

Many of the online discussions taking place about research are initiated by academics, who are increasingly using social media platforms [6], blogs [7], and Web-based peer review platforms for scholarly discourse. Qualitative altmetrics data are potentially useful to researchers on a personal level. Altmetrics tools aggregate online attention rapidly, and within days of an article’s publication, researchers are often able to see the online conversations about and mentions of their work that would otherwise have been difficult and time-consuming to find.

Many researchers are pleasantly surprised by the amount of press coverage and social media attention accorded their articles, and are also appreciative of how the metrics are calculated at the article (not journal) level. One neuroscientist who had published in a newer open-access journal wrote to us, saying that Altmetric ‘… allow[s] research articles like mine, that were largely ignored by the major journals, to have a chance at making an impact and contributing to my career.’ [8]

The immediacy of altmetrics is a great advantage to researchers, especially when describing the impacts of their work. Altmetrics are rapid proxies for attention and article uptake [9]. Moreover, qualitative altmetrics data can occasionally provide evidence that an article has made an impact within society, separate from an article’s academic impact (as indicated by the number of citations) [2]. For instance, healthcare practitioners may be less likely to publish and cite works in the medical literature, but might only have time to blog or tweet about new interventions that they have read about and applied in the clinic.

Scholarly Publishers’ Use of Altmetrics

Although researchers have much to gain from consuming altmetrics data, the available filtering applications and data analysis tools have so far been more focused on the needs of publishers. We have seen that publishers are motivated to adopt altmetrics into journal platforms for two main reasons:

- to help their editors understand the reader engagement with their articles [10]; and

- to provide valuable data that can potentially incentivise researchers to submit papers to the publication.

At Altmetric, we have collected attention data for approximately 1.7m articles (as of 13 November 2013). For our publisher-oriented application, the Altmetric Explorer, we developed various filters to help users find articles of interest and navigate quickly through the huge amount of scholarly content. The filters include time frame, journal titles (and ISSN), article identifiers (eg DOI, PubMed ID, handle, etc.), publisher, PubMed query, and others, but filtering by people or institutions is still in active development. We also provide contextual information to help users make sense of the attention scores, but the comparisons are at the journal, rather than discipline, level. As toolmakers who are also interested in exploring the potential of altmetrics in institutions, we think that it is important to address some key areas for improvement.

Clustering Altmetrics Data by People and by Institutions

We envision a smarter way to organise altmetrics data, which not only connects researchers more efficiently with the attention surrounding their work, but also provides a more logical way to link up the many articles that comprise distinct research projects. Institutions should be able to track the online impacts of their faculty members’ intellectual outputs, and individual researchers should be able to see where their own contributions lie.

Moreover, further granularity that reflects the organisation of universities should be implemented. Any attention that surrounds the output of whole research groups, comprised of labs in the same department or even inter-disciplinary, inter-departmental teams, should be more efficiently aggregated and presented by an institutional altmetrics tool. However, rather than simply creating ‘researcher-level metrics’ (as opposed to article-level and journal-level metrics), it will remain necessary to emphasise the qualitative attention data above the quantitative.

Discipline-specific Context for Metrics of Attention

Interestingly, our data show that 30-40% of recent biomedical papers will have attention tracked by Altmetric, but that this is only the case for less than 10% of articles in the social sciences. Overall, altmetrics data are typically available for science, technology, engineering, and mathematics (STEM) fields, whereas disciplines in the humanities are currently poorly represented.

What accounts for these discrepancies in online attention across disciplines? One explanation could lie in the inherent biases that are present in methods of data collection used by altmetrics tools. For items such as social media posts and blog posts, altmetrics tools can only pick up mentions and conversations if hyperlinks to scholarly content are included [11]. Amongst academics and communicators in STEM, there seems to be awareness of good ‘linking behaviour’, which makes it easier for mentions to be collected. (Furthermore, altmetrics tend to be publicised more for STEM fields, and therefore there could be a greater awareness of altmetrics in general.) Bloggers and social media users in STEM fields are diligent in including URLs to scholarly content, and sites such as Research Blogging [12] have gone further by developing more standardised ways for citing scholarly articles in blogs.

Another explanation relates to the different formats of research outputs that are produced by the various fields. Books, for instance, are frequently cited scholarly works in the humanities, and yet are not tracked by most altmetrics tools. Academics in the humanities could be blogging more frequently about books, not papers, but this attention would therefore not be captured. A general observation is that bloggers in humanities fields tend to make reference to older works, and article URLs, which altmetrics tools require, are less frequently included.

Presently, the uptake and usage of social media tools are by no means consistent amongst academics across disciplines, and in certain fields there may even be a tendency to use particular services over others. Additionally, in the mainstream news, there is a marked bias in reporting towards health and life sciences topics. Finally, it is already known that older articles will tend to have higher metrics and attention scores simply because they have had more time to accumulate mentions.

Regardless of the reasons which divide STEM fields and the humanities, it is clear that without suitable discipline-specific contextual information for available attention data, users have no way to determine what amount of attention is typical for a particular field or sub-field. For instance, what is a ‘normal’ amount of attention that an article about Renaissance art would be expected to receive? How much attention would be expected for an article in high-energy particle physics? The need to establish discipline-specific context and benchmarks has grown out of a reliance on the metrics, although having some way to establish the ‘norm of the field’ for qualitative data would also be useful.

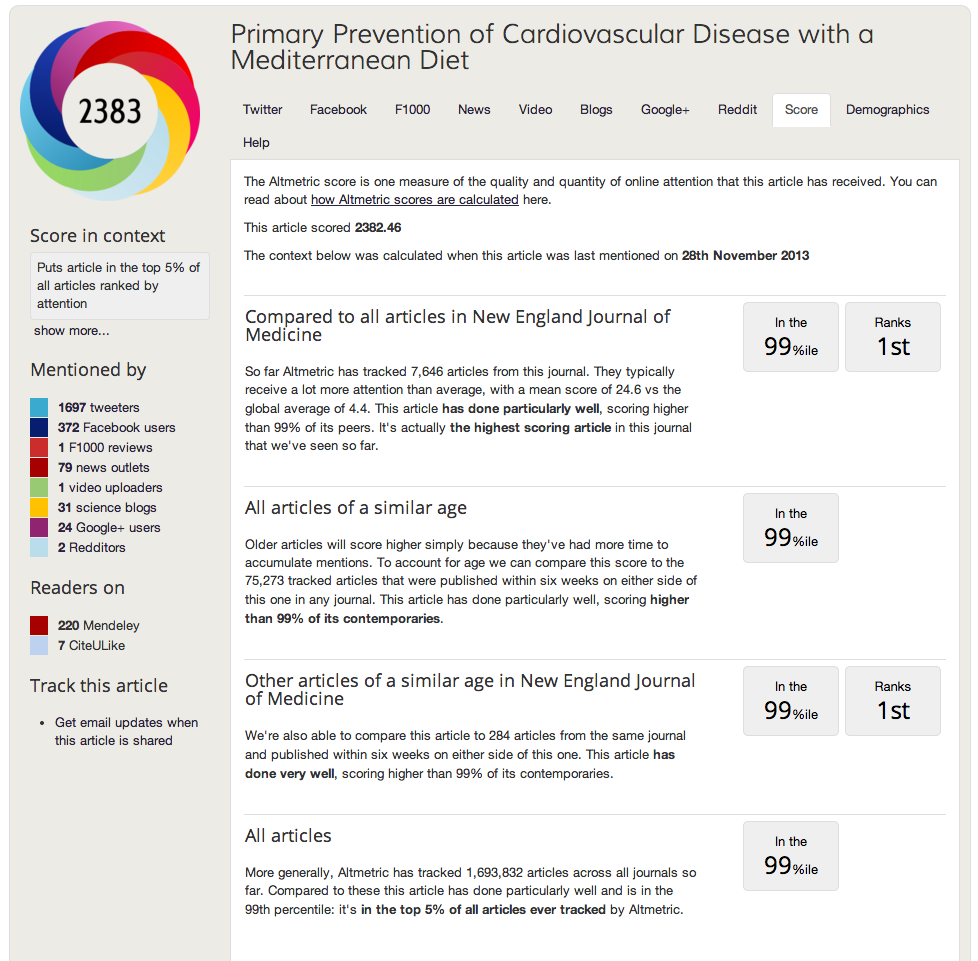

While Altmetric does emphasise the stories behind the numbers, we do provide raw metrics and a quantitative estimate score of attention (the Altmetric score). For the latter, we provide journal-specific contextual information. Although not as finely tuned as discipline-specific benchmarks would be, journal-specific benchmarks are still useful. Taken in isolation, the numeric value of the scale-less Altmetric score would give users little sense of how much attention this article received in comparison to others. On Altmetric article details pages, we provide a tab labelled ‘Score’ (Figure 2), which displays the results of various automatic calculations for context, including different kinds of comparisons with other articles by journal and by date of publication.

Figure 2: Screenshot of the Score tab in an Altmetric article details page

Being able to construct discipline-specific benchmarks would be useful, but unfortunately, establishing discipline-specific context in attention metrics is technically challenging. Journal article metadata do not typically include the discipline of the article; rather, the overarching disciplines that the journal itself covers tend to be used. However, this becomes particularly problematic for articles in multidisciplinary journals such as PLOS ONE, Nature, Science, and PNAS. For these types of journals, precise topics that pertain to individual articles will need to be analysed from the full text, perhaps through text-mining or manual input.

New Uses for Altmetrics

Altmetrics data are collected rapidly and in great quantities. On a daily basis, Altmetric picks up 15,000 mentions of scholarly content; over a week, Altmetric sees mentions of 22,000 unique articles (as of 13 November 2013). In order to interpret all these data, it is important to know what questions need to be answered; these questions can be different depending on the user.

While journal editors and researchers tend to be the main consumers of altmetrics data right now, other users can potentially benefit as well. In the following section, we speculate on some possible institutional users who might gain insights from altmetrics.

Informing University Communications

In order to attract talented students and faculty, bolster community relations, and promote a positive image of the university, institutions must be able to raise awareness of their achievements and intellectual outputs successfully. To reach the broader public, the achievements of the institution might be communicated in a variety of ways, including press releases, external reports, blogs, print newsletters, media campaigns, and more. Altmetrics data can potentially aid communications and press officers’ strategies in two important ways:

- the identification of actionable trends in attention; and

- the measurement of the success of media campaigns.

Since researchers do not always notify university communications teams about their new publications, media and press officers may not be aware of the institution’s newest research. Continuously monitoring altmetrics data associated with researchers or departments from the university may provide a solution. Spikes in attention for specific articles or topics can be useful early signals of public interest. A recent article submitted to the pre-print server arXiv serves as an interesting example. In early September 2013, an article entitled “Automated Password Extraction Attack on Modern Password Managers” was submitted to arXiv by researchers at Carnegie Mellon University [13]. A day or two after the article first appeared on arXiv, 6 people tweeted about it [14]. There was a period of silence, until early November 2013, when there was a second wave of uptake, and the article was tweeted by a further 25 users. What was responsible for the second wave? Perhaps there was a topical reason; it is possible that the spike in interest was related to recent events, which heightened awareness of Web security issues amongst the general public. Such events include a security breach at Adobe in the autumn of 2013, during which millions of users’ passwords were leaked. From a communications perspective, the second wave of Twitter attention and the connection with recent events could be a good signal of public interest. As such, the university’s press office could consider increasing awareness of these researchers’ article, perhaps by distributing a press release or posting a highlight piece on the institution’s blog. In this way, altmetrics could provide a way of gauging potential areas of public interest, allowing communications experts to focus their outreach activities to maximise exposure.

Altmetrics may also be helpful in determining which online communication formats and styles are effective for conveying messages about the university’s research and achievements. For example, it might be noted from qualitative altmetrics data that the inclusion of research-related images leads to greater uptake of press releases; it might also be observed that a specific journalist’s blog coverage of the story has been particularly popular, and so the university could reach out to the journalist again in the future.

Qualitative altmetrics are portals into the online world of research communications. As such, we believe that altmetrics, aggregated at the institutional or departmental level, have potential to help university communications teams to interact effectively with the press and understand how to best to utilise online self-publishing platforms.

Enriching Undergraduate Education and Postgraduate Training

Altmetrics tools are valuable for filtering and discovering new scholarly content. In terms of education, altmetrics tools have some applications in training for both postgraduate and undergraduate students. Researchers, including postgraduate students, can clearly benefit from altmetrics in the most direct way, as attention surrounding many different research outputs can be tracked. These outputs include journal articles, theses, dissertations, presentation slides, datasets, and other documents, and are commonly deposited into institutional repositories. Since early 2013, Altmetric has supported a number of open access institutional repositories in the UK and abroad, including IU Scholarworks (Indiana University, USA) [15], Opus (University of Bath) [16], LSE Research Online (London School of Economics) [17], and Enlighten (University of Glasgow) [18]. The monitoring of online attention surrounding a variety of research outputs may be helpful for postgraduate students to gauge both academic feedback and public interest in their work.

Undergraduate students are usually introduced to scholarly articles in the classroom, during the preparation of assignments, essays, and presentations. Altmetrics data, which can tell meaningful stories about societal uptake, and perhaps impact of recent research, could help to foster students’ interest in course topics. For instance, lecturers could provide students with ‘socially curated’ or ‘trending article’ reading lists, supplemented with qualitative altmetrics data to direct further exploration of a particular topic and its associated online communities. The Altmetric Explorer application is useful for compiling such reading lists, as it allows for custom filtering of articles by various parameters, including Altmetric score, publication date, and subject. Moreover, some publishers include trending articles lists on their Web sites, which may also be of interest to lecturers and their students.

Conclusion

No longer considered purely ‘alternative’, altmetrics are the necessary byproducts of the digitisation of scholarly content. In the near future, there will likely be increased uptake of altmetrics tools in the institutional environment, perhaps leading to greater pressure to use the data in research assessment. As altmetrics toolmakers, we should strive to build institution-oriented tools, and incorporate features to help users interpret the data appropriately and suit the needs of different institutional users.

References

- Liu J., Adie E., “New perspectives on article-level metrics: developing ways to assess research uptake and impact online”, Insights: the UKSG journal 26, 153-158, July 2013 http://dx.doi.org/10.1629/2048-7754.79

- Altmetric http://www.altmetric.com

- Adie, E., Roe, W., “Altmetric: enriching scholarly content with article-level discussion and metrics”, Learned Publishing, 26, 11-17, January 2013 http://dx.doi.org/10.1087/20130103

- Altmetric: How we measure attention http://www.altmetric.com/whatwedo.php#score

- Adie, E., “Altmetrics in libraries and institutional repositories”, Altmetric Blog, January 2013

http://www.altmetric.com/blog/altmetrics-in-academic-libraries-and-institutional-repositories - Darling, E. S., Shiffman, D., C?té, I. M., Drew, J. A., “The role of Twitter in the life cycle of a scientific publication”, Ideas in Ecology and Evolution 6, 32-43, October 2013 http://dx.doi.org/10.4033/iee.2013.6.6.f

- Shema H., Bar-Ilan, J., Thelwall, M., “Research Blogs and the Discussion of Scholarly Information”, PLOS ONE 7, e35869, May 2012 http://dx.doi.org/10.1371/journal.pone.0035869

- Personal communication

- Thelwall, M., Haustein, S., Larivière, V., Sugimoto, C. R., “Do Altmetrics Work? Twitter and Ten Other Social Web Services”, PLOS ONE 8, e64841, May 2013 http://dx.doi.org/10.1371/journal.pone.0064841

- Gibson, D., Baier, A., Chamberlain, S., “Uptake of Journal of Ecology papers in 2012: A comparison of metrics (UPDATED)”, Journal of Ecology Blog, January 2013

http://jecologyblog.wordpress.com/2013/01/17/uptake-of-journal-of-ecology-papers-in-2012-a-comparison-of-metrics - Liu, J., Adie E., “Five challenges in altmetrics: A toolmaker's perspective”, Bulletin of the American Society for Information Science and Technology 39, 31-34, April/May 2013 http://dx.doi.org/10.1002/bult.2013.1720390410

- Research Blogging http://researchblogging.com

- Gonzalez, R., Chen, E. Y., Jackson, C., “Automated Password Extraction Attack on Modern Password Managers”, arXiv:1309.1416, September 2013 http://arxiv.org/abs/1309.1416

- Altmetric article details page for “Automated Password Extraction Attack on Modern Password Managers” http://www.altmetric.com/details.php?citation_id=1734330

- IUScholarWorks – Indiana University https://scholarworks.iu.edu

- Opus: Online Publications Store – University of Bath http://opus.bath.ac.uk

- LSE Research Online – London School of Economics http://eprints.lse.ac.uk

- Enlighten – University of Glasgow http://eprints.gla.ac.uk

Author Details

Email: jean@altmetric.com

Web site: http://www.altmetric.com

Jean Liu is the product development manager and data curator for Altmetric.com. Previously, she was a researcher at Dalhousie University in Halifax, Nova Scotia, where she obtained an MSc in Pharmacology/Neuroscience.

Email: euan@altmetric.com

Web site: http://www.altmetric.com

Euan Adie is the founder of Altmetric.com, which tracks the conversations around scholarly articles online. He was previously a senior product manager at Nature Publishing Group where he worked on projects like Connotea and Nature.com Blogs.