Serving Services in Web 2.0

"I want my browser to recognise information in Web pages and offer me functionality to remix it with relevant information from other services. I want to control which services are offered to me and how they are offered."

In this article I discuss the ingredients that enable users to benefit from a Service-Oriented Architecture (SOA) by combining services according to their preferences. This concept can be summarised as a user-accessible machine-readable knowledge base of service descriptions in combination with a user agent. These service descriptions contain, amongst others, information on how output of services can be used as input for other services. The user agent interprets the response of services and can generate relevant additional links in the response using information in the knowledge base. This concept is an extrapolation of the use of OpenURL [1] and goes beyond linking to an appropriate copy. Publishing and formalising these service descriptions lowers the barrier for users wishing to build their own knowledge base, makes it fun to integrate services and will contribute to the standardisation of existing non-standard services.

I hope that publishing this concept at such an early stage of its development will encourage contributions from others.

Trend and Goals

The concept described in this article is inspired by the observation of a trend and the expectation that we can take advantage of this trend in reaching the following goals:

- Improve sharing of information and services

- Offer the user enriched context-sensitive information within the reach of a mouse click

- Lower the implementation barriers to create new functionality by combining existing services

- Enhance personalisation, thereby making it easier for the user to choose which services to integrate

This trend is partly based on Web 2.0 [2]: mixing the services from different providers and users in a user controlled way. A user may find information in one place and may find the algorithms to use that information in another. The intelligent combination of both may offer the user extra functionality, more comfort or just more fun.

One of the components often associated with Web 2.0 is Ajax, (Asynchronous, JavaScript and XML). Ajax is a mechanism whereby XML responses from different targets can be requested from a single Web page and retrieved asynchronously without the screen freezing while waiting for responses. It also allows such responses to be dynamically transformed and mixed into a new presentation.

I consider Web 2.0 to be not so much a technical change but rather a change in mindset. Service and data providers realise that their products are part of a larger environment and can gain in value when they optimally integrate with that environment. Not protecting one's own data but making it easily accessible for other services is the major difference in that modern mindset.

It is likely that many users will want to control the integration of information as they control their bookmarks or favourites rather than let their institution or Internet provider control it. Personalisation will go beyond the preferences offered by each single service provider. By enabling users to mix the different options and services offered by several service and data providers, a more personal browser environment is created. Static links make room for dynamically generated links based on intelligent use of context and knowledge of the user's preferences. Part of this process may even happen in the background, invisible to the user, only becoming visible when the results are relevant to the user based on user-defined criteria. For example, a pop-up with the message "I found something!" may appear after a 'no hits' result, because a user agent tried other searches without bothering the user.

The Philosophy

Enhancing the integration of services begins with lowering the barrier to find and generate requests to them automatically through the:

- Standardisation of access to services

- Publication of standardised service descriptions

- Creation of services description registries to facilitate finding of services

However if we address the situation as it stands rather than waiting for it to become what we would prefer, we need to describe non-standard services as well. Describing non-standard services as they actually exist, in a machine-readable and standardised way, allows us to make use of what is currently available and facilitates the standardisation process for those services and might help to combine (the best of) different native standards and evolve them into new ones.

A major component in the proposed service descriptions is the relation between services, metadata and the criteria that might invoke those services. This concept of context-sensitive linking is known from OpenURL, but the approach of this article takes it further. In the end it should resemble what the human brain does: a certain response generates possible activities not based on exact definitions but based on a user's experience and preference. A 'standard' for this purpose might therefore not be a conventional one, as it should be developed according to the world as experienced by a new generation of users rather than by a standardisation committee.

The Components

To explain the philosophy of the integration of services in practice let me first identify the components involved:

- The starting point each time is a response from a service, for example an XML metadata record or a Web page containing machine-readable metadata.

- The services. In this article any application invoked by a URL that returns data is regarded as a service. Examples of services are: SRU services (Search and Retrieval via URLs) [3] that access a library catalogue, resolution services, linking services, translation services, services that zoom in on a remote image, etc. But the collection of documents which are linked by static URLs could, in some cases, be considered a service. The URL invoking the service identifies the service. A part of this URL will be variable.

- A knowledge base containing information on services, how the service is accessed, which metadata should trigger them (or trigger the presentation of a link) and the user preferences with respect to handling such services.

- A user agent. This is the active component that interprets the response from a service and uses information from the knowledge base to generate new links to other services possibly invoking the services automatically. The user agent can be one of the following:

- A browser extension (Firefox browser), loaded as part of the browser, able to access and change the current Web page

- A portal running in the browser (Ajax), that generates a presentation based on the XML response. An example of such a portal is The European Library portal [4]

- A server side application that intercepts user requests and server responses

- A conventional OpenURL link resolver

It is proposed here that, whatever user agent is being employed, they could all make use of the same knowledge bases.

Description of the Process

How does it work? Consider an information service as a Web-application that is identified by a base-URL like "http://host/application" and which takes some URL-parameters following the question mark like "request=whatever¶meter=xyz" resulting in the URL:

http://host/application?request=whatever¶meter=xyz

Now suppose there is a registry of services (the knowledge base) that contains, for each service, a description and data about how to access the service, such as the URL, the input parameters, the response etc. For each service the description contains the metadata fields that trigger the user agent to create a link to this service. For example when the metadata contain an abstract a link to a translation service can be presented to the user. A simplified overview of such a knowledge base can be seen in figure 1. In figure 4 an example is shown where this information is being used to create links.

Figure 1: Overview of simplified service descriptions as an example of a knowledge base.

The entries highlighted are used in Figure 4.

In conventional situations, when the user wants the abstract to be translated, he or she needs to cut and paste it into another Web site. However, if this knowledge is contained in a knowledge base similar to that described above, and is accessible by a user agent, this agent may create a link to the translation service based on the fact that the field 'abstract' was defined as a trigger. The user agent may modify the response by attaching a link to the abstract field. Clicking on this link will invoke the translation service with the abstract automatically being used as one of the input fields. In figure 1 the subsequent steps are illustrated graphically.

Figure 2: Steps in the process of using the output from service A as input to services B based on information in the knowledge database

Of course the above description is oversimplified. The trigger to create a link may be based on much more complex criteria than only the presence of a metadata field and how the service will be invoked, e.g. automatically or on request, and will depend on the user's preferences.

Describing the relation between input and output of services in a machine- interpretable way will allow intelligent applications to take information from a response and provide the user with a new mix of information or with suggestions for accessing new services. In this way information of mutually independent services can be integrated without the services having to be aware of each other (loosely coupled services). This becomes more valuable when it can be personalised in a simple way where the user determines which relations and which services are relevant and how they are invoked. Services can be invoked automatically (e.g. requesting a thumbnail); they can be suggested to the user if relevant (e.g. doing a new search based on results in a thesaurus) or they can be selected on request from a context-sensitive menu.

An Example Implementation Using Ajax

To develop a standard schema for service descriptions in a knowledge database a prototype implementation has been made based on the Ajax concept. Figure 3 shows the architecture. The basic components are the XML containing the descriptions (name and address) of SRU-searchable targets and a stylesheet that transforms the XML to a JavaScript portal running in the browser. The portal sends requests to these targets and transforms the SRU responses from these targets to HTML.

To illustrate the integration of services a knowledge base containing service descriptions in XML has been added together with a XSL user agent. This user agent is nothing more than a stylesheet that transforms the XML knowledge base into HTML and JavaScript, in which dynamic links are created to services in the knowledge base for the metadata fields that are defined as a "trigger" for a service.

Figure 3: Architecture of a Ajax implementation of a portal with a user agent and a services knowledge database

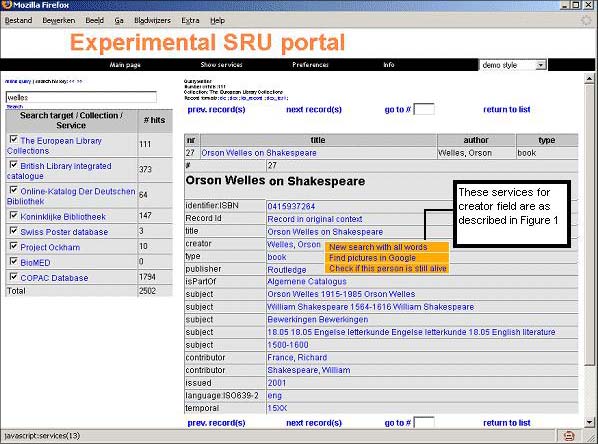

The implementation is at http://krait.kb.nl/coop/tel/SRUportal. In Figure 4 a screenshot is shown to illustrate what a response may look like.

Figure 4: Screenshot illustrating the linking driven by service descriptions. Note the services for creator field as described in Figure 1.

The screenshot shows a record with Orson Welles as creator. In the knowledge base displayed in Figure 1 there is an entry for a service 'Google images' and this entry contains the field "creator" as one of the triggers for the service. The XSL user agent has therefore created a link so that clicking on the creator name shows a menu of services in which 'Find pictures in Google' is present as an option. Additionally there is an entry to the service named 'Dead or alive' resulting in an extra menu item 'Check if this person is still alive'. Clicking on the menu items makes a 'deep link' to the requested information. In this implementation users may specify their own knowledge base (service description file) under 'preferences' and therefore let the user agent automatically generate links to all kind of services such as translation services and searches in other databases based on triggers in the service descriptions in their knowledge base.

Semantic Tagging

Ideally the response of a service is, as in the above example, in XML. However not all services provide the response in XML. Branding is often a reason for using HTML instead of XML: the data provider wants their Web pages to be 'visible' and sending only raw data in XML makes them invisible to the user. This makes it difficult for the user agent to identify the relevant fields. To understand HTML pages and provide additional services based on the interpretation of those pages, we need semantic tagging.

For example, in a library catalogue, metadata are presented to the user in HTML. Some fields may be clickable to search for other records with the same contents. Now consider a catalogue record containing an ISBN. The user may want to have this field presented as clickable to be able to find the book on Amazon. The user agent (e.g. a browser extension) has to recognise the ISBN to make it clickable in the user's preferred way. The simplest way, one would think, is to put in the Web page something like: <isbn>12345678</isbn>. Browsers will only display 12345678 but the user agent may recognise the <isbn> tag and manipulate the presentation of that tag to become a link. This probably does not work in all browsers but in Firefox such a tagged field can be processed quite easily.

A new generation standard for this purpose, usable in most browsers is CoinS [5], (Context Objects in Spans). With CoinS context information (metadata) is stored in span tags in an HTML page. These spans are recognised by their class attribute being "Z3988". An example of a COinS span is:

The author of this book is <span class="Z3988" title="ctx_ver=Z39.88-2004&rft_val_fmt=info:ofi/fmt:kev:mtx:book&rft.isbn=12345678&rft.author=Shakespeare" />Shakespeare</span>

The HTML just shows: "The author of this book is Shakespeare". A user agent may use the context information to offer a service with the contents of the span as input. In this example a user agent may change the text in the span to a clickable link to, for instance, Amazon to request a book with ISBN=12345678 or to Google to search for images of Shakespeare.

Currently COinS is mostly used to link to an OpenURL link server to find articles that are identified by Context Objects. COinS identifies an object with all its accompanying metadata and can be sent to a link resolver as a single entity. For tagging individual metadata I prefer a more user-friendly form like:

<dc:creator>Shakespeare</dc:creator> and

<dc:identifier>ISBN:12345678</dc:identifier>.

Very recently Dan Chudnov introduced the concept of unAPI [6], which corresponds to the above idea, also based on the use of spans. Without going into details this can be seen as a "microformat" for semantic tagging but with more generic usage than only for OpenURL.

When the response is not XML and the data provider does not make the data machine-readable by semantic tagging, we have to escape to screen scraping techniques by the user agent. Examples of this are the several user agents, available as browser extensions or bookmarklets, that interpret the response of Google Scholar to provide OpenURL links for articles and books [7]. Bookmarklets are pieces of JavaScript that are accessible as a link in the browsers' link bar. However, the value and usability of human-readable Web pages will be enhanced when they are made machine-readable with standardised semantic tags. Providers might be afraid that only the machine-readable part of their data will be used, thereby losing the possibility of branding, but I suspect the opposite will be true. Their Web pages will still be found via Google and will gain added value by the enhanced usability of the presented data without suppression of the original Web page.

Extrapolation of the OpenURL Concept

The philosophy presented here can be considered as an extrapolation of the OpenURL concept. OpenURL found its origin in the need to be able to link from a description of an object to the appropriate copy for a specific user. This was realised by using a standard URL request format containing the identification of the object. The host part of the URL is set to the location of the link resolver of the user's institution. This link resolver interprets the metadata in the URL to generate a page with context-sensitive links, for example, a link to the full text of an article or a link to search the title in Google etc.

The characteristics of most OpenURL implementations are:

- a Web page, controlled by the user's institution with links to the link resolver

- a link resolver that is controlled by the user's institution

- a vendor-specific knowledge base with information about services to which links might be created depending on the metadata in the OpenURL

The differences between the concept described in this article and the OpenURL concept are:

- Service providers describe their services in a standardised format instead of a vendor-specific format

- Users select their preferred services and store their descriptions in a personal knowledge base rather than using the vendor's knowledge base

- Users control the criteria for invoking the service instead of the user's institution controlling them

- Linking to the service is direct, automatic or on user request, based on user preferences, thereby skipping the OpenURL resolver

Most OpenURL implementations consist of two parts:

- Web pages containing bibliographic metadata from which an OpenURL can be generated

- The link resolver that takes the metadata from this OpenURL to create new context-sensitive links.

In case of a user agent both the interpretation of the bibliographic record and the generation of the context-sensitive links are combined and therefore replaces the OpenURL link resolver. This has some major advantages, such as the links being more direct. There is little added value in 'adding a link to an OpenURL resolver' when the metadata are machine-readable and the appropriate links can be generated in the user's display directly.

The different types of user agents have their pros and cons. Bookmarklets and browser extensions are bound to a workstation. Ajax implementations are suffering from the cross-domain security issue: browsers do not always allow the manipulation of pages taken from one domain with scripts coming from another domain. The disadvantage of user agents that run on a server is that such an application is often not under the user's control. The method being used depends on the situation, the user's Internet device and the user's preferences.

Of course, not all users will have their own user agent or even be aware of anything about OpenURL. However, Google Scholar offers the user the option to enter a library name in the Google Scholar preferences. This will result in the addition of a link to that library's OpenURL resolver in the responses from Google Scholar. Obviously this is a first step in users being aware of the possibility of controlling context-sensitive linking. In fact Google is doing here what I would rather like to be part of what user agents do.

The Knowledge Database

Our aim is to standardise the format and semantics of the knowledge base so it will allow different types of user agents to use the same knowledge base regardless of whether the user agent is a browser extension, an XSLT file of an Ajax portal, a bookmarklet or even a conventional link resolver. It should also facilitate the exchange of service descriptions stored in different knowledge bases. It should be easy to write user agents or browser extensions using the service description to create dynamic links to services using URL-GET, URL-POST or SOAP (Simple Object Access Protocol) [8]. The knowledge base shown in Figure 1 is just a first step in work in progress. Collecting many examples of existing non-standard services and describing them in a machine-readable way might become the basis for a generic service description model.

To enable users to feed their personal knowledge base with service descriptions, it would be useful if service and data providers published their services in exactly the same format as the knowledge base in order to allow extraction of specific entries and copy them to the user's own knowledge base. This publishing can take the form of registries that hold many service descriptions, but it would also be useful when services explain themselves in a machine-readable way such as the 'explain' record in the SRU protocol. Requesting the explain record is, in fact, requesting the service description. Additionally, a simple indication that a Web page is accessible, via parameters, with the syntax being described in the service description, would enable user agents to gather relevant services and store that description in a knowledge base.

The use of service descriptions as above does not remove the need for service-specific registries with service-specific descriptions. Service registries are needed to help user agents find services with specific properties e.g.: SRU-services. There are several ways to describe services in a structured or more or less formal way like Zeerex [9], WSDL (Web Service Definition Language) [10], OWL-S [11], OAI-identify etc. These descriptions are mainly available for services that are already standardised and can be used in conjunction with the knowledge database.

People who are familiar with Web Services will probably ask "why not use UDDI (Universal Description, Discovery and Integration) [12] as the mechanism for sharing service descriptions?". A combination of SOAP, WSDL and UDDI might be considered to be the appropriate technical mechanism for implementing the concept described here. However, this combination does not have a low implementation barrier, and certainly not for creating and using personal knowledge bases.

Metadata

Metadata can be seen as the glue between services: integrating services is through metadata and it is therefore desirable that these metadata be standardised as much as possible for both input and output. So whether we provide semantic tagging or data in XML or COinS we must be able to input this data e.g. as URL parameters and therefore we need standard metadata models and standard names. Because metadata is encoded in different ways user agents have to map the information in the knowledge base to the appropriate input and output syntax and semantics for different services. For example a name of a person in a Web page might be used in an author or subject search but does not have to be an author or a subject. Similarly the user agent has to map 'dc.title' in a HTML page, 'dc:title' in a XML metadata record and 'atitle' or 'jtitle' in an OpenURL all to the same concept of 'title'. The knowledge base is supposed to be generic enough to cover this.

Standardisation

By describing different services from different providers that actually do the same thing, it is possible to find common input parameters and common output fields. By specifying these common variables and by parameterisation of the potential differences between implementations, we are actually almost defining a standard. For example, every search service needs a query and a start record for the first record to be presented. By describing this for different native search services we soon find that we need those parameters for each search service. Thus a description of that type of service will contain information on the parameter used for the query and the parameter that is used for the start record. Generic names for parameters with the same meaning will be defined, as well as for parameters that classify the differences between different implementations. The description can be used to define a universal interface to different services, for example a universal interface to SRU and Opensearch [13]. Such a description is in fact almost a specification of a possible standard for a service. Thus describing non-standard services may help the standardisation process.

A question remains over what will happen with overlapping standards like SRU, Opensearch, OpenURL and OAI-PMH [14] or what would have happened if these standards had been developed at the same time with people contributing to all four standards? Personally I think that these four standards could easily have been merged into a single standard without losing functionality or adding complexity. When new services are implemented it might be useful to have already existing standards in mind. Moreover the requirement to describe the service and that requests for a service might be generated automatically should be taken into account when implementing a new service.

The Role of Google

It seems unavoidable that Google will be often used as a starting point for searching information and will compete with metasearch via SRU. The advantage of metasearch via SRU is that search results are available in XML and therefore easily usable as input for other services. It is questionable whether search engines like Google are willing to provide search results in XML on a large scale, thereby losing all opportunity of presenting branding information or advertisements. For example, inserting the OpenURL in Google Scholar is done by Google based on the user's IP number instead of Google enabling users to insert their own OpenURL in Google's Web pages. It is to be hoped that Google will support initiatives like COinS or semantic tagging to have the best of both worlds: the presentation of a Web page remains as it is but at certain points a tag is provided containing information which can be recognised by a browser extension to generate user-controlled context-sensitive links. When COinS is available in Web pages, Google might copy existing COinS to the related item in the results page or, for metadata describing objects, Google might create a COinS itself.

Google could also play a role in finding and identifying service descriptions or other XML files. When Google makes the entries for XML files machine-readable, a user agent may pick out the relevant entries automatically and offer to store for the user the base-URLs of these services or the complete service description for later use without the user needing to link to these services directly.

Cross-domain Security

Ideally a user would load a personal browser environment containing a user agent from a central place so it is not bound to a specific location. However this introduces the cross-domain security issue, which is the main showstopper for Ajax-like applications. The cross-domain issue is where a user agent loaded from one server is not allowed to manipulate the response from another server. The Ajax concept is needed to allow integration of services by a user agent running on the user's workstation. Browser extensions do not suffer from this problem because they are installed on the user's workstation; however, they are therefore not location-independent.

Running an Ajax-enabled portal should not require changes to the local settings of the workstation and I expect that enabling data sources across domains is the only relevant security setting that currently should be changed during the browser session to allow a user agent located on any server to access data retrieved from any source. So browser vendors may contribute to the user-controlled integration of services by solving the cross-domain security issue.

Equally neither is the security issue solved by routing all request and responses through a central server. This central server is then used as proxy to user-specified services, which may not always be desirable. Making users responsible for their own security and helping them to restrict the access to trusted parties might be a possible alternative.

Work to Be Done

What is shown and discussed here is only a beginning and reflects work in progress. It needs a little bit of imagination to see how this may develop in the near future:

- Services might be triggered by more complex criteria rather than the presence of a single metadata field in a structured metadata record

- Services can be activated in the background and only appear when there is something to show rather than bothering the user in advance

- Services can trigger other services, for example from location to co-ordinates to display on a map

- Web pages can be analysed (semi-automatically) to discover potential services and generate new service descriptions. Users may add the descriptions to their personal knowledge base (like their favourites)

- By formalisation or standardisation of service descriptions users or user agents may exchange 'working' service descriptions

Data and service providers are encouraged to:

- Provide their metadata in XML or use semantic tagging of HTML pages with CoinS or unAPI

- Provide service descriptions for any service that is usable in this context at a standard location e.g.: http://your.host/services.xml. This mechanism is comparable to the mechanism of a Web site's icon by placing this icon as 'favicon.gif' in the Web servers' root directory.

- Create registries with service descriptions

- Use user-friendly URL's for access to their services. This will make it easier to describe the access to services and will lower the barrier for implementation of user agents to generate their URLs and describe the URL in a knowledge base.

- Keep in mind that a provided service may be useful for other services; in other words prevent complex interpretation of output and prevent complexity in the generation of input

- Use wherever possible existing standards

Conclusion

It has been shown that integration of services is easy and simple. Most of the ideas presented in this article have been technically possible for a long time. There is however a trend visible whereby access to services is increasingly based on http. This allows access to services via an http request from a user's display. In other words, integration can take place in a Web page at the user's desktop rather than via an API in the back-office of services. Users may take advantage of this trend and can change the presentation of a response to create their own links to bring enriched information 'mouse clicks closer'. The major change proposed in this article is to standardise the description of non-standard services to lower the barrier for this type of integration and add the relation between services and the criteria that trigger them. When more providers contribute to this approach by providing this type of service description, I think that users will take advantage of this concept by selecting their preferred services and storing them in a personal knowledge base.

I hope this idea moves us towards a more intelligent Web and offers what the semantic web promised but, as I perceive it, has not yet delivered.

References

- The OpenURL Framework for Context-Sensitive Services,

http://www.niso.org/committees/committee_ax.html - Tim O'Reilly, What is Web 2.0,

http://www.oreillynet.com/pub/a/oreilly/tim/news/2005/09/30/what-is-web-20.html - SRU : Search and Retrieval via URL, http://www.loc.gov/standards/sru/

- The European Library portal, http://www.theeuropeanlibrary.org/

- OpenURL COinS: A Convention to Embed Bibliographic Metadata in HTML, http://ocoins.info/

- Dan Chudnov, unAPI version 0 http://www.code4lib.org/specs/unapi/version-0

- Peter Binkley, Google Scholar OpenURLs - Firefox Extension, http://www.ualberta.ca/~pbinkley/gso/

- Simple Object Access Protocol (SOAP) 1.1, http://www.w3.org/TR/2000/NOTE-SOAP-20000508/

- ZeeRex: The Explainable "Explain" Service, http://explain.z3950.org/

- Web Services Description Language (WSDL) 1.1, http://www.w3.org/TR/wsdl

- OWL-S 1.0 Release, http://www.daml.org/services/owl-s/1.0/

- UDDI http://www.uddi.org/

- Opensearch, http://opensearch.a9.com/

- The Open Archives Initiative Protocol for Metadata Harvesting,

http://www.openarchives.org/OAI/openarchivesprotocol.html