Spinning a Semantic Web for Metadata: Developments in the IEMSR

The IEMSR, a metadata schema registry, exists to support the development and use of metadata standards; in practice, what does this entail?

Metadata is not a recent invention. It dates from at least the time of the Library of Alexandria, at which hundreds of thousands of scrolls were described using a series of indexes retaining various characteristics such as line count, subject classification, author name and biography. However, specific metadata standards, schemas and vocabularies are created on a regular basis, falling into and out of favour as time passes and needs change.

Metadata may be described as ‘structured data which describes the characteristics of a resource’, although it is often more concisely offered as ‘data about data’. There is a great deal of specialist vocabulary used in discussing it: standards, schemas, application profiles and vocabularies. Going through each of them in turn: a schema is a description of a metadata record or class of records, which will provide information as to the number of elements that can be included in the record, the name of each element and the meaning of each element. In other words, just as with a database schema, it describes the structure of the record. The Dublin Core (DC) standard defines the schema, but does not define the encoding method that must be used (for example, HTML, XML, DC-Text, DC-XML and so forth). A metadata vocabulary is simply a list of metadata elements (names and semantics).

The Application Profile

The hardest of these concepts to understand is the application profile (AP), and to understand where the AP sprang from, it is useful to look at the development of the Dublin Core metadata standard. DC originated at a 1995 invitational workshop held in Dublin, Ohio, with the aim of developing a simple core set of semantics for categorising Web-based resources. These core elements, 15 descriptors in total, resulted from an ‘effort in interdisciplinary and international consensus building’ [1], and the core goals that motivated the effort were listed as:

- Simplicity of creation and maintenance

- Commonly understood semantics

- Conformance to existing and emerging standards

- International scope and applicability

- Extensibility

- Interoperability among collections and indexing systems

DC required standardisation, and received it, as ISO Standard 15836 [2] and NISO standard Z39.85-2007 [3]. This successfully achieved, later workplans [4] began to reflect the question of satisfying the interests of diverse stakeholders; the problem of refining DC elements; relationship to other metadata models; and the knotty question of DC’s relationship with one technology that has enjoyed sustained hype since the late 90s - RDF. Weibel [4] notes that ‘few applications have found that the 15 elements satisfy all their needs’, adding that this ‘is unsurprising: the Dublin Core is intended to be just as its name implies – a core element set, augmented, on one hand, for local purposes by extension with added elements of local importance, and, on the other hand, by refinement through the use of qualifiers[…] refining the elements to meet such needs.’

The Dublin Core Metadata Initiative (DCMI) added further metadata terms [5]; by 2008, over 50 additional properties were mentioned on the DCMI Metadata Terms Web site. Other terms were undoubtedly in use in the wild - users were invited to create their own ‘elements of local importance’ - and it is likely that many did so, as well as choosing to refine existing elements. To refine an element is to restrict its scope in order to convey more accurately a given semantic; a subtitle represents a refinement of the general title element, and can be treated as a special type of title. Exactly how many metadata terms have been created to extend DC, and how many qualified forms of terms have been created, is a difficult question. There is no master list. Metadata vocabularies are often created for essentially local purposes, and do not always outlive the context of their creation. The incentive to publish is limited, unless in doing so the intention is to submit created terms as candidates for inclusion in DCMI standard vocabularies.

The application profile essentially represents a plural form of the instruction to refine or extend an element for a given purpose, and is designed to customise a set of terms for a given context of use. As the Dublin Core Application Profile Guidelines would explain it [6], a DC Application Profile (DCAP) is ‘a declaration specifying which metadata terms an organization, information provider, or user community uses in its metadata […] A DCAP may provide additional documentation on how the terms are constrained, encoded or interpreted for application-specific purposes.’ Rather than creating a new set of terms, existing terms are customised via whichever mechanism is most appropriate; the overall set of documentation, instructions and metadata terms is collectively referred to as an AP.

One advantage of all this is that the number of new elements created is potentially controlled by the extensibility of existing elements. A disadvantage is the weight of documentation and analysis required to encode the transformations formally. Again, there is limited incentive to publish findings.

An additional purpose of the application profile that has recently gained in prominence is the imposition of a complex entity-relationship model embedded within the data model, an approach that is not without its critics; this will be examined later in this article. Those interested in the recent evolution of the structures underlying Dublin Core from a simple set of shared term definitions to its present state should start with the DCMI recommendation on the subject of interoperability levels in Dublin Core metadata [7].

The IEMSR: A Metadata Registry on the Semantic Web

The IEMSR began as an early adopter of various Semantic Web technologies, and was placed firmly on the bleeding edge. Its development, however, was motivated by some very pragmatic concerns: the need to provide an easy place to find information about metadata schemas, vocabularies and application profiles; to promote use of existing metadata schema solutions; to promote interoperability between schemas as a result of reuse of component terms across applications (the ‘Holy Grail’ underlying the application profile); to reduce the amount of duplication of effort amongst metadata developers and implementers and, preferably, to provide a means to manage evolution of schemas.

Storing and curating existing knowledge was and remains a priority, that is, information about APs and metadata vocabulary, their creators, the processes (if applicable) by which they were created, and information about their development and use over time. Application profiles are expensive joint efforts in most cases, and are time-consuming and expensive to build and test. The schema registry can store the output in a machine-readable manner via a standard query interface.

The Role of Registries

The software engineering process that underlies application profile development is a complex one and has not received a lot of attention in the literature. A schema registry is able to become a centrepoint for this process. Registries are potentially able to support the development of an AP. Registries may also support APs in a deployment-time process. A natural first step in creation of a data repository is to consider the data formats and metadata vocabularies and schemas to be used. A registry offers a central point of call at which many APs may be found in a shared and easy-to-query format.

With this comes the general aim of developing and supporting good engineering methods for developing and testing metadata schemas, elements and application profiles. There is significant investment by the JISC in application profiles today: the IEMSR Project sees an opportunity to improve the quality of the resulting artefacts by applying well-known engineering processes such as iterative development models and encouraging frequent engagement with users at each stage. For this to be possible we need to develop and test appropriate means of evaluation and prototyping.

The IEMSR Project

The IEMSR Project began in January 2004 and completed its second phase in September 2006. Phase 3 ran from October 2006 and is scheduled to end in the summer of 2009. The Registry consists of three functional components:

- Registry Data Server: an RDF application providing a persistent data store and APIs for uploading data (application profiles) to the data store and for querying its content

- Data Creation Tool: supports the creation of RDF Data Sources (application-specific profiles) for use by the Registry Data Server

- User Web Site Server: allows a human user to browse and query the data (terms and application profiles) that are made available by the IEMSR Registry Data Server

The Registry is designed for the UK education community where both Dublin Core (DC) and IEEE Learning Object Metadata (LOM) standards are used. IEMSR focuses on both DC and IEEE LOM application profiles, although the data creation tool in particular presently focuses on supporting Dublin Core; to build targeted support for LOM requires us to find an appropriate user group with which to work. Phase 3 of the project concentrated on consolidation, evaluation and improvement; the present level of usability, accessibility and stability of project outputs, components and APIs were evaluated, updated requirements developed and the results fed back into further development through several iterations. The wider registry community was contacted via the Dublin Core Metadata Initiative (DCMI); the latter stages of the phase concentrated on dissemination and encouraging closer links with stakeholders and other schema registry developers. Partly as a result of this, the project grew to concentrate more on direct interaction with intermediate developers – that is, developers primarily engaged in non-registry related tasks - via provision of relevant APIs and the creation of of documentation, training sessions and exemplars.

A Registry Endpoint for the Semantic Web

The IEMSR rapidly became one of a new breed of projects: a Semantic Web project. The basic technology underlying the Semantic Web is the Resource Description Framework (RDF) which enables the encoding of arbitrary graphs (ie. any sort of graph or network data structure involving nodes and relationships between nodes), by no means the only way of expressing graph structures, but the means preferred by the World Wide Web Consortium (W3C). The set of technologies and design principles collected together under the name ‘Semantic Web’ are intended to provide a means of encoding information as machine-processable ‘facts’. Hence, one might expect that encoding information about metadata standards into RDF would permit them to be available for use by both humans and software agents.

The idea of the IEMSR was conceived at approximately the same time as the idea of RDF data stores gave rise to a sudden proliferation of proposed and prototype RDF query languages; DQL, N3QL, R-DEVICE, RDFQ, RDQ, RDQL, RQL/RVL, SeRQL, Versa, to name but a few – and SPARQL, which has since emerged as a W3C Recommendation. IEMSR’s choice of query language was related mostly to strong links between the Institute for Learning and Research Technology (ILRT), technical lead on IEMSR in the earlier phases, and the SPARQL development effort. Several years later, a core activity on Phase 3 of IEMSR has been to collect evidence regarding the appropriateness of this choice, and its implications in respect of issues such as practical long-term sustainability and portability of the IEMSR codebase.

Advantages

SPARQL was one of several possible query languages; however, the use of RDF itself was essentially a given. The files thus created were entirely standard, and so the risk of the data created during the project itself becoming obsolete was low. The modular design resulting from use of an RDF data store and associated query language was very flexible, and offered an excellent potential for reusability of the data and of service functionality in other contexts.

The Semantic Web has been widely described as the future for data publication and reuse on the Web, so this approach also meant that the project was in line with current and expected future developments on the Web. It additionally meant that the project would benefit from compatibility with other Semantic Web datastores that may be of relevance (for example, searching could be augmented via information gleaned from thesauri).

Disadvantages

As a very new set of technologies, the adoption of SPARQL so early on was very much living on the bleeding edge of technology. As such a lot of things could and did go wrong; software that fails to compile on new platforms, bugs, changes in protocol and a collection of other problems.

In terms of accessibility for developers, familiarity with RDF itself is far from widespread, whilst developers with prior experience of using SPARQL are exceedingly rare. Crafting an appropriate SPARQL query can be difficult, for several reasons. Firstly, a very good knowledge of an existing data model is required to do the job well; its flexibility can be seen as a blessing and a curse, because from the point of view of the developer, crafting a sensible question about a given dataset requires (beyond a knowledge of SPARQL syntax) a good understanding of what represents a sensible query in a given context. Of course, the same is true of databases, but the relatively restrictive model offered by a table – or series of joined tables – tends to offer pointers to the developer about how best it should be accessed.

There is no obvious limit to the complexity of a SPARQL query – other than the time it takes to execute – and this can represent a real difficulty for those running a public SPARQL endpoint. A badly written query can render a system unresponsive for some time, depending on system settings, even on a system that contains only a few tens of thousands of statements. Denial of service attacks on publicly available SPARQL endpoints are easy to produce, accidentally or intentionally. For this reason, as with conventional relational database services, there is a potential for direct access to the endpoint to be made available only via a set of login credentials, and for simple Representational State Transfer (REST) endpoints (analogous to stored queries in standard relational databases) to be made available for simple and everyday types of queries which could be added on request following testing.

Defining a Data Model

Which data model to use is perhaps the most difficult question facing the DC Registry community in general, as the data model underlying DC is itself a question that has remained current for several years. In a sense, the search to ‘do it right’ may be missing the point; rather, doing it consistently and adequately would suffice. The present-day data model in use in the IEMSR dates back several years, and is as such out of date. Today, this remains an issue. Inter-registry sharing of information in particular requires availability of a standard with which both registries are compatible. Consequently, a Dublin Core Registry Task Group is presently looking into interoperability concerns and potential solutions.

Building a Desktop Client

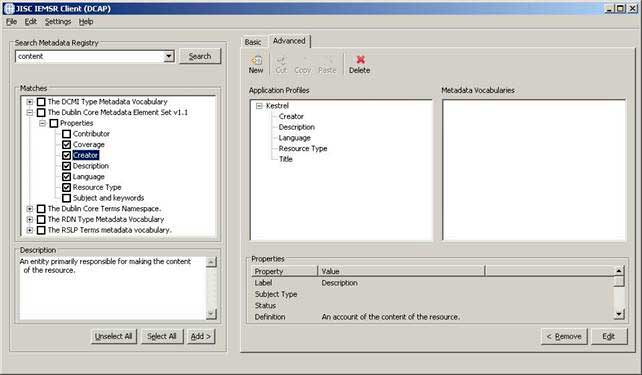

The desktop client is designed in order to simplify the creation of new metadata vocabularies and application profiles. The example screenshot shown in Figure 1 shows the use of the IEMSR desktop client to create a simple application profile for contain the metadata shown in Figure 2.

Figure 1: Building an application profile

It is written in Java and uses the Jena Semantic Web framework and the SWT library - a GUI framework developed by the Eclipse foundation. SWT is an alternative to the Java standard GUI library – Swing – and the original choice may have related to cross-platform compatibility. The client makes use of a standard set of Java libraries for functionality such as logging and XML parsing.

The core functionality of the desktop client is the creation of a valid RDF document, describing the application profile or metadata vocabulary that has been created. For RDF-related activities, such as the creation of a valid RDF model, the IEMSR GUI client makes use of the Jena library, which is a well-known open source Semantic Web toolkit developed by Hewlett Packard (HP) [8].

Recently, a static code verification tool, PMD [9], has been added to the desktop tool. This type of tool is designed to discover potential error code without executing it, just by searching for error-prone patterns. A potential benefit there is the possible input for improving code style.

User Testing

Initial user testing showed that the classical approach to distribution of Java software (essentially, a Jar archive to be executed by running a script) causes difficulties for users, especially on Windows, where .exe or installable software files are usually expected. As a result, an executable file is now generated for the Windows platform.

Development across all major OS platforms without automated tests is quite problematic, as with the example of the operation of generation of an installable Windows executable file. As the generation tool was only available for Windows, references to it elsewhere naturally caused the build process to stall. Lack of automated tests also makes any re-factoring quite a headache, because it is impossible to check if changes broke code somewhere else.

There were a few comments from the testers that the client application design is not user-friendly: in some cases the current state of the application is not clearly visible, operational buttons are either hidden or obscure, if the progress some users were making through their task so far is not very well indicated. It is necessary to take into account that the majority of IEMSR users are not very experienced in using software applications, so the need for more visual help and guidelines in the application has been recognised.

Early user testing was discouraging, in that it showed certain limitations of the original software design process; in particular, software stability was an issue, a great deal of vocabulary and imagery used was based in the assumption that the user was familiar with DC terminology, and screens that in practice required users to adhere to a specific workflow did not indicate this. These issues and others were identified through task-based user testing and heuristic evaluation [10].

User reaction to later iterations of the software was in general very positive, showing that the software had improved significantly in both usability and functionality. Subjects succeeded in completing tasks. Composing application profiles from a series of existing elements proved to be an easier to understand, and represented notably less work than creating everything from scratch. On the other hand, we discovered that even in later iterations of the software, search results were not easy to understand and use. Creation of novel elements is not at present supported unless defining a novel vocabulary (ie. this task cannot be completed concurrently with the creation of a new AP). Tests of metadata element edit functionality showed that usability issues also remained in that area.

Design of a complex application should include guidance for the user; at each step of interaction, the system should indicate or hint at the type of answer or value that it would ‘like’ to receive. Providing an initial value in interface elements such as search fields (for example, the word ‘Search’ may appear in a search box) ensures that the user recognises that the field is related to the ‘Search’ button, which may not otherwise be clear to a novice user. Another related feature of user-friendly applications would be an introductory ‘wizard’ mode, where information about how to make use of the system is explained in a step-by-step manner. The downside of having a great deal of visible help information is the possibility that it may become excessive; users, after some experience of the system – once they have learnt about the system and its behaviour and established a routine in their use of the software – may become annoyed by the wizard mode, so a means should be provided to reduce the verbosity of the system, and switch off all help hints. This introduces the idea of multiple application operating modes:

- User is learning to use the software

- User is competent with system functionality

The initial design did not observe all of Neilsen’s heuristics [10] - for example, a great deal of recent development work concentrated on improved error-handling, relating to the heuristic, ‘Help users recognize, diagnose, and recover from errors: Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution.’

At present, the GUI client produces ‘flat’ APs, that is, application profiles without any structure. This is not a problem as such, but means that it is appropriate for some use cases and not for others, such as application profiles like SWAP that are currently under development, and which use complex entity-relationship models. A prototype that gives an idea of what a ‘structural’ application profile creation kit might look like is discussed later in this article.

Building a Web Site to Support Browsing of IEMSR Data

The choice was made to use Java + Struts + Tiles for this work. JSP has many advantages, as does Java itself; a plethora of libraries that can be used for dealing with almost anything in Java (JSTL is now used on the Web site), and easy XML parsing, to name two. The Web application architecture provides an effective build and packaging system.

On the downside, the resulting code is quite difficult to use as an ‘exemplar’ - that is, the use of complex libraries and toolkits tends to make the code more difficult to read and to reuse, and requires significant background knowledge about the component elements and frameworks. It does not lead to code that is understandable at a glance. Due to the nature of SPARQL, it is not always easy to debug errors, especially intermittent bugs.

The most common issues that came up during user testing were:

- Difficulty understanding the actual data model represented in the IEMSR.

- Difficulty using the search function: it is not surprising that this problem exists, as the number of terms defined in the IEMSR is very small by comparison with a user’s vocabulary. Therefore, the user is not very likely to hit on appropriate terms without help.

- Missing or incomplete data.

- URLs that are not persistent; sessioning IDs and session timeouts.

- Errors (such as searches that provide no results) do not always give verbose results, or suggest other possible options.

Several significant issues have been identified during user testing.

- Searching for keywords can be quite difficult, and the ranking is not very obvious. One proposed remedy suggests that a thesaurus, or search suggestion, might improve search efficiency.

- The system does not handle internationalisation; although data is stored from many translations of core terms, it is not visible to the user other than via the SPARQL interface.

- Persistent URLs are absolutely indispensable if anything is to be built on the schema registry as a platform.

- Data should be editable (or a mechanism provided for recommending improvements) at the Web site.

The Web site could also provide a ‘search query construction’ interface for the creation of custom SPARQL searches. Users unfamiliar with SPARQL frequently find that it represents a high barrier to entry, and a custom query construction functionality could represent a useful learning tool to overcome this barrier.

Building Demonstrators for the IEMSR

As part of the wider remit of user engagement, in both a human facing sense, and in its capacity as a machine-accessible service, IEMSR has a clear opportunity to become a provider of a novel series of tools; some of which have been prototyped at UKOLN and which exploit the link between schemas or elements and evidence of their actual, real-world usage in exemplar records. For example, automated methods are able to measure some aspects of the consistency and variance of metadata record content and therefore identify elements that are error-prone or frequently confused. They provide useful feedback to the repository manager and to the application developer.

There are many opportunities to learn from exemplar records, and also from the processes by which they are created and used. Linking the IEMSR with the deposit process of metadata enables contextually relevant information stored within the IEMSR to be provided to the user. In return, retrieving data of any sort (such as indicators of user activity) gathered via the user interface enables feedback to be returned to the server, and hence eventually to be reported in an aggregated form to the repository manager. Of course, it is not possible to analyse an application profile consistently via what amounts to evaluation of a single interface or implementation; much of what is measured will be dependent on the interface by which it is accessed. Clearly, the provision of services such as these is strongly dependent on excellent integration with other services, such as aggregators and repository instances.

Of course, a very significant problem for many services - and the IEMSR is no exception to this - is that of encouraging enough initial interest to secure the data necessary to render the service useful. Interest should be actively solicited through the provision of useful services, appropriate marketing and a consistent focus on relevance and practicality. Interest can also be generated by lowering the barrier to entry for end-users. This might be achieved through actions such as user-centred review of the service and its documentation, attention to the accessibility and relevance of the service, and related toolsets and dissemination to the wider community. There is reason to explore the potential of methods for partial automation of the application profile, element, and encoding development process. Such work may also represent a valuable form of development.

Whilst we will not cover all of the demonstrators currently in place in this article, we showcase a couple here to give a quick idea of the sorts of activities that the IEMSR can support. A detailed description of the underlying development and structure falls outside the scope of this article.

Constructing Complex Application Profiles

As we discussed in the introduction to this article, the best-known application area of DC as a standard is the idea of a fairly small vocabulary of element names, such as ‘Title’, ‘Creator’ and so on, applied to objects in an extremely straightforward manner. An image, for example, may have a title, a creator, a description, a date, and a set of rights associated with it.

| Title: Kestrel Creator: E Tonkin Description: A kestrel perching on a road sign Date: 2008-10-01 Rights: Copyright E Tonkin, 2008 Identifier: http://… |

Fig. 2: One resource, one set of metadata associated

In practice, this very simple use of metadata probably does satisfy a very large percentage of immediate user needs; it provides an option, effectively, to tag the object in a semi-formal manner and using a fairly standard syntax. However, this represents a limitation in scenarios where simple object-level metadata are not enough to express what the information architect wants to say. For an example of this, consider the enormous and long-standing popularity of Bram Stoker’s best-known novel, which has given us generations of derivatives and re-interpretations: Nosferatu, the Hammer Horror Dracula films, Dracula-the Musical, Dracula-the Ballet, Frances FordCoppola’s Bram Stoker’s Dracula (the film), and, closing the circle, the novelisation of Frances Ford Coppola’s Bram Stoker’s Dracula.

How should these be sensibly catalogued? One natural approach to the question is to ask whether they are all the same type of thing: after all, Mr Stoker’s original novel was a unique and fairly imaginative work, although from today’s perspective it may owe a great deal to the penny dreadful traditions, J. Sheridan Le Fanu’s Gothic horror Carmilla, Rymer’s Varney the Vampire, or, The Feast of Blood, and so on. Still, Stoker is popularly seen as ‘patient zero’ for the modern epidemic of vampire fiction, some of it wildly different in emphasis and tone from his original work. By comparison, adaptations of Stoker’s novel such as film(s), musicals, ballets, novelisations of the film and so on generally appear to be derivative, more or less faithful versions of the source novel. This observation alone provides us with two separate levels of ‘thing’; a series of original creative works (Varney, Carmilla, Dracula, Interview with the Vampire…), and an adaptation of it to a particular form, audience and time (Bram Stoker’s Dracula: The Graphic Novel, Hammer Horror Dracula, etc.).

Whether this specific typology sketched above is useful, the general principle of a model that portrays items and relations using multiple different types of thing, or entity, is at the basis of a large proportion of the application profile development efforts currently occurring today. Documentation on the subject often speaks grimly of the development of an entity-relationship model – in other words, a list (often a hierarchy) describing the types of thing involved in a domain, and of the ways in which those types of item are related. Rather than creating novel entity-relationship models, it is frequently suggested that the well-known FRBR model be applied (a discussion of this appears in Ariadne issue 58 [11]); either way, supporting users in creation of this sort of application profile is clearly a quite different sort of problem to that of supporting development of a simple ‘flat’ AP.

To support this development effort, a prototype [12] was created that is intended to permit the creation of structurally complex application profiles, a ‘structural’ application profile creation kit.

Internationalisation Concerns

The IEMSR contains information about certain application profiles in several languages; for example, the DC Core Elements are currently available in no less than 15 languages [13], including English, French, Italian, Persian and Maori.

This in itself provides a useful resource for interface development; to demonstrate this we have created a prototype [14] that demonstrates a very simple process by which IEMSR can be used to provide internationalisation for a form.

Conclusion and Further Work

We have reviewed the stability of the backend service itself and decided that the data collection itself is a valid process, that the data is portable and can potentially be expressed and searched using any of a number of mechanisms, and that at present the SPARQL interface fulfils the immediate need identified. Given the limited familiarity with SPARQL we have produced a series of lightweight demonstration scripts for developers to adapt to their purposes.

We intend to continue the development effort, moving on the basis of the relatively stable and well-understood/documented setup that we now have towards a lighter, simpler service based around REST and other Web standards currently being reviewed by the JISC Information Environment. As a result we expect to improve, simplify and encourage practical interoperability with other JISC services and developments. As a result of experience during this phase, the IEMSR can operate as a centrepoint for collecting information, evaluating, annotating etc. both in terms of software and development needs and in terms of user engagement, for the purposes of supporting actual engineering methods for developing schemas, elements, APs. We look towards using IEMSR as a basis for developing other tools that are more immediately useful to the user - such as fast prototyping tools, analysis of existing datasets (description rather than prescription), user interface-level innovations based on IEMSR data, etc., ie demonstrators. To see the IEMSR as a standalone service and product is constrictive. It is appropriate to consider a variety of use cases including but not limited to those already identified, and workflows that contain application profile or metadata descriptions as a component. Many of these are opportunistic in that the feasibility of processes often results from novel developments, as in the case for example of the SWORD deposit mechanism. Hence, we will emphasise close engagement with the developer community.

At the end of a three-year roller-coaster ride of alternate cheerfulness and nail-biting nervousness, one feels that something should be said about the IEMSR from the point of view of its status as one of the earliest examples of a relatively small number of JISC projects based around Semantic Web technologies. It is not lost on us that early adopters are at risk of, metaphorically speaking, buying Betamax. We suggest that the choice to base a project on what at the time represented early draft standards and prototype software is not one that should be taken lightly; as it happens, it seems that SPARQL has grown a modest but dedicated user community, and a number of other production SPARQL databases are now available, giving us a good and improving expectation of sustainability. For those faced with a similar choice, there is no perfect answer beyond those typically found in any project’s risk analysis. Will the technology gain traction or disappear? Will the specificity of the technology and knowledge base exacerbate the difficulties inherent in recruitment? Do the opportunities outvalue the risks?

In the latter stages of any large development process, the realisation inevitably dawns that there are no simple answers - very often a project proves to be more complex in practice than it initially appeared. However, in compensation a better understanding of the parameters of the problem is gained, which can be usefully applied in building on the existing basis of code.

Acknowledgements

Thanks go to our UKOLN colleague Talat Chaudhri for his useful comments and suggestions during the writing of this article.

References

- Weibel, S., Kunze, J., Lagoze, C. and Wolf, M. (1998). Dublin Core Metadata for Resource Discover. Retrieved on 20 April 2009 from

http://www.ietf.org/rfc/rfc2413.txt - Information and documentation - The Dublin Core metadata element set. ISO Standard 15836

- ANSI/NISO Standard Z39.85-2007 of May 2007

http://www.niso.org/standards/z39-85-2007/ - Weibel, S. 1999, The State of the Dublin Core Metadata Initiative: April 1999. D-Lib magazine 5 (4) ISSN 1082-9873. Retrieved 20 April 2009 from

http://www.dlib.org/dlib/april99/04weibel.html - DCMI Usage Board, 2008. DCMI Metadata Terms. Retrieved on 20 April 2009 from

http://www.dublincore.org/documents/dcmi-terms/ - Baker, T., Dekkers, M., Fischer, T. and Heery, R. Dublin Core application profile guidelines. Retrieved on 20 April 2009 from

http://dublincore.org/usage/documents/profile-guidelines/ - DCMI: Interoperability Levels for Dublin Core Metadata http://dublincore.org/documents/interoperability-levels/

- HP Tools http://www.hpl.hp.com/semweb/tools.htm

- PMD. Retrieved on 20 April 2009 from http://pmd.sourceforge.net/

- Neilsen’s heuristics for user interface design http://www.useit.com/papers/heuristic/heuristic_list.html

- Talat Chaudhri, “Assessing FRBR in Dublin Core Application Profiles”, January 2009, Ariadne Issue 58

http://www.ariadne.ac.uk/issue58/chaudhri/ - UKOLN - Projects - IEMSR - OSD Demo http://baiji.ukoln.ac.uk:8080/iemsr-beta/demos/OSD/

- Translations of DCMI Documents. Retrieved on 20 April 2009 from http://dublincore.org/resources/translations

- Multilingual input form http://baiji.ukoln.ac.uk:8080/iemsr-beta/demos/lang/