IMPACT Final Conference 2011

Marieke Guy reports on the two-day conference looking at the results of the IMPACT Project in making digitisation and OCR better, faster and cheaper.

The IMPACT Project (Improving Access to Text) [1] was funded by the European Commission back in 2007 to look at significantly advancing access to historical text using Optical Character Recognition (OCR) methods. As the project reaches its conclusion, one of its key objectives is sharing project outputs. The final conference was a 2-day event held over 24 - 25 October 2011 at the British Library in London where it demonstrated findings, showcased tools and presented related research in the field of OCR and language technology.

IMPACT Project Director Hildelies Balk-Pennington de Jongh from the Koninklijke Bibliotheek (KB) National Library of the Netherlands opened the conference. The busy two days were then divided into a series of blocks covering different aspects of the project. Day One kicked off with a look at the operational context.

Block 1: Operational Context

Strategic Digital Overview

Richard Boulderstone, Director, e-Strategy and Information Systems, British Library (BL), provided us with an overview of BL's digitisation work to date. The Library had embarked on a huge digitisation process and now had 5 billion physical pages and 57 million objects online, although they only represented 1% of its collection. This however, figuratively speaking, was but a drop in the European ocean. The Conference of European National Libraries 2006 Survey estimated that the national libraries of Europe were holding over 13 billion pages for digitisation and the number was rapidly growing. One starts to get a sense of the magnitude of the demand.

The British Library was involved in a project with brightsolid to digitise BL’s newspaper collection [2], while it had also recently signed a deal with Google to digitise 250,000 more pages.

Richard Boulderstone, British Library, provides an overview of BL’s digitisation work to date.

Richard continued his theme of numbers and moved on to the economics. Early digitisation projects often involved rare materials and were very costly; mass digitisation was much cheaper, but still remained costly. Richard noted the need to generate revenue and gave some examples of how BL is already doing this through chargeable content. This approach hasn’t always been popular, he admitted, but was necessary. When it came to measuring value, one easy approach was to compare print and digital. There were lots of benefits to digital material: it is more durable (potentially), often has a better look and feel, could offer effective simulations, made searching easier and distribution faster. It also made data mining, social networking and revenue all possible. It was quite clear that digital content was a winner.

During his talk Richard praised the IMPACT Project, describing it as unique in the way it had set the benchmark in addressing a common set of problems across Europe, and seeking to resolve them through wide collaboration and the piloting of systems which would benefit libraries and the citizens of Europe for many years to come. He noted that OCR worked very well for modern collections and that users expected and received high rates of accuracy; although Richard accepted there was still some way to go for older material. The IMPACT Project had helped make up some ground.

IMPACT's Achievements So Far

Hildelies Balk-Pennington de Jongh, KB, began her presentation by mentioning many of the OCR problems the project had faced: ink shine-through, ink smearing, no annotations, fonts, language, etc. When IMPACT started in 2008, it originally had 26 partners, was co-ordinated by KB and co-funded by EU. Over the last four years it had taken many steps to improve significantly the mass digitisation of text across Europe.

IMPACT Project Director Hildelies Balk-Pennington de Jongh, Koninklijke Bibliotheek (KB).

Hildelies explained that the approach from the start had been for everyone (content holders, researchers and industry) to work together to find solutions based on real-life problems. The main IMPACT achievements so far had been the production of a series of open-source tools and plug-ins for OCR correction, the development of a digitisation framework, a comprehensive knowledge bank, and computer lexica for nine different languages. Not only this, but the project has established a unique network of institutions, researchers and practitioners in the digitisation arena.

Technical Talks

A series of technical talks followed. Apostolos Antonacopoulos from the University of Salford looked at the effect of scanning parameters (such as setting colour vs bi-tonal vs dithered vs 8-bit greyscale and using different resolutions) on OCR results. Apostolos concluded that using bi-tonal often gave poor results unless particular methods are used. He recommended the study Going Grey? [3] by Tracy Powell and Gordon Paynter which poses a number of questions that may need to be considered when scanning.

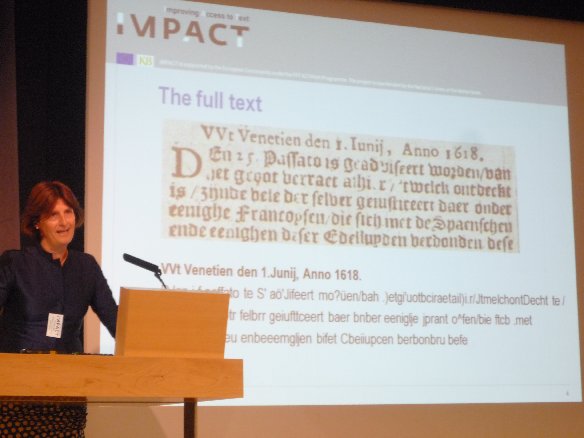

Claus Gravenhorst of Content Conversion Specialists GmbH (CCS) presented a case study by the KB and CCS which considered whether the new ABBYY FineReader Engine 10 [4] and Dutch Lexicon increase OCR accuracy and production efficiency. Claus explained that various pre- and post- processing steps could have an effect on accuracy as well as image quality. He described the test material they had chosen: 17th-century Dutch newspapers where a typical page would have two colours and gothic fonts. The test system used was docWorks which was developed during the EU FP5 project METAe [5] (in which ABBYY was involved). The workflow covered item tracking from the shelf, scanning and back to the shelf including QA, etc. This system was used to integrate IMPACT tools. There was very little pre-processing as the focus was the OCR. Zones were classified and then passed to the OCR engine. Finally, an analysis was carried out to understand the structure of the page. The goal was to generate statistical data for character and word accuracy over four test runs. There was a 20.6% improvement in word accuracy when using IMPACT tools.

Block 2: Framework and Evaluation

The second block looked at the IMPACT framework that has been developed.

OCR Experiences

Paul Fogel, California Digital Library, University of California, presented digitisation experiences and challenges faced by CDL when dealing with OCR document text extraction. Paul emphasised the difficulties and obstacles posed by poor OCR during the mass indexing and digitisation processes of cultural records: they included marginalia, image-text misinterpretations and fonts, as well as limited resources, the wide range of languages and low number of dictionaries. Paul explained that US libraries had been very opportunistic in their digitisation, especially in their use of Google. He introduced us to Solr [6], an open-source search engine technology used for distributed search. It was worth noting that Google had recently moved from ABBYY 9 to Tesseract [7], Google’s own in-house open-source OCR engine.

Technical Talks

Clemens Neudecker from the KB presented the IMPACT Interoperability Framework which brings together project tools and data. He introduced the workflows in the project and the various building blocks used including Taverna [8], an open source workflow management system. Although progress had been good, Clemens pointed out, but there was still a long way to go.

Stefan Pletschacher, University of Salford, began by presenting an overview of the digitisation workflow and the issues at each stage. These stages are usually given as scanning (whatever goes wrong at this stage was hard to recover later), image enhancement, layout analysis, OCR and post-processing. He explained that it was sensible to evaluate at each step but also to consider the workflow as a whole. To carry out performance evaluation, it is necessary to begin with some images that are representative of the images to be processed. Then one will OCR the results.

There then followed an explanation of the concept of ‘ground truth’. Interestingly enough a conversation on the nature of ground truth had already done the rounds on Twitter. Some of the definitions discussed are given below:

- Ground truth is manually created text by double re-keying and segmentation correction

- Ground truth is the ‘transcription’ of a document or a text and more

- Ground truth is the data against which you evaluate your results, for OCR it is transcribed text with 99.9+% accuracy.

Stefan explained that it was not just the final text but would also include other aspects, such as images to map to, de-skewing, de-warping, border removal and binarisation. The IMPACT ground truths have been produced using Alethia, now a fairly mature tool, which allows creation of information on page borders, print space, layout regions, text lines, words, glyphs, Unicode text, reading order, layers etc. Stefan concluded his talk with an overview of the datasets available: 667,120 images approximately, consisting of institutional datasets from 10 libraries (602,313 images) and demonstrator sets (56,141 images).

Block 3: Tools for Improved Text Recognition

ABBYY FineReader

Michael Fuchs, Senior Product Marketing Manager at ABBYY Europe, began his presentation with an overview of ABBYY and ABBYY products. ABBYY is an international company with its headquarters in Moscow and 14 subsidiaries around the world. Michael claimed ABBYY had made a 40% improvement in OCR output since 2007 thanks to software improvements and the improved quality of images used. Michael walked the audience through the range of improvements, including image binarisation, document layout analysis and text/character recognition. He highlighted the need for binary images as they tend to produce the best results. Recently ABBYY has also extended its character and text recognition system to include Eastern European languages, such as Old Slavonic. Michael concluded that ABBYY’s decreased cost in historic fonts and its flexible rates have contributed to IMPACT’s efforts to make digitisation cheaper and OCR technology more accessible to both individuals and partnerships across Europe and the world.

Technical Talks

Asaf Tzadok of IBM Haifa Research Lab introduced the IBM Adaptive OCR Engine and CONCERT Cooperative Correction. During his talk, Asaf introduced the concept of collaborative correction – getting users to correct OCR errors. He showed a number of crowdsourcing tools including Trove and DigitalKloot [9], and looked at where improvements could still be made. The CONCERT tool was completely adaptive and had a lot of productivity tools, separating data entry process into several complementary tasks. Asaf concluded that collaborative correction could be consistent and reliable and required no prior knowledge of digitisation or OCR. Lotte Wilms from the KB joined Asaf on stage and gave the library perspective: three libraries were involved and user tests were carried out by all the libraries with full support from IBM.

Majlis Bremer-Laamanen from the Centre for Preservation and Digitisation at the National Library of Finland then talked about crowdsourcing in the DigitalKoot Project. The Library now had 1.3 million pages digitised and its aim was to share resources and make them available for research. At this point there was a definitive need for OCR correction. The DigitalKoot project name came from the combination of the Finnish terms ‘digi’ = to digitise, ‘talkoot’ = people gathering together. It was a joint project run by the National Library of Finland and Microtask. Users could play mole hunt games to carry out OCR correction. The project had been hugely successful and had received a lot of media coverage. Between 8 February – 31 March 2011 there were 31,816 visitors of whom there were 4,768 players, who engaged in 2,740 hours of game time. Results had been positive, for example, in one check, out of 1,467 words there were 14 mistakes and 228 correct corrections.

Ulrich Reffle, University of Munich, finished off the day by talking about post- correction (the batch correction of document-specific language and OCR error profiles) in the IMPACT Project. Ulrich explained that many languages contain elements of foreign languages, for example Latin or French usages in the English language which occasions greater processing complexity. Ulrich explained that OCR problems were as diverse as historical language variations and, unfortunately, there was no ‘ring to rule them all.’

OCR and the Transformation of the Humanities

The opening keynote for Day Two was provided by Gregory Crane, Professor and Chair, Department of Classics, Tufts University. Gregory began by giving three basic changes in the humanities:

- transformation of the scale of questions (breadth and depth);

- student researchers and citizen scholars, and;

- globalisation of cultural heritage (there are more than 20 official languages).

Crane then moved on to describing dynamic variorum editions as one of OCR’s greatest challenges. Crane stressed that even with all possible crowd-sourcing, we still need to process data as automatically as possible. He gave the new Variorum Shakespeare series (140 years old) as a good example of this - the winning system is the most useful, not the smartest.

A classicist by origin, Crane focused on the Greco-Roman world and illustrated the problems ancient languages such as Latin and Ancient Greek pose to OCR technology. OCR helped Tufts detect how many of the 25,000 Latin books selected were actually in Latin. Unsurprisingly, OCR analysis revealed that many of these works were actually Greek. It seemed OCR often tells us more than metadata can. Crane saw the key to dealing with a cultural heritage language as residing in multiple open-source OCR engines in order to produce better results.

Block 4: Language Tools and Resources

The morning focused on language tools and resources. Katrien Depuydt from the Institute for Dutch Lexicology began with an overview of the language work being carried out. She explained that she would stand by her use of ‘weerld’ – ‘world’ as an example since it continued to be useful - there were so many instances of the word ‘world’. Katrien touched on the IMPACT Project’s work in building lexica (checked lists of words in a language) within a corpus (collection). She explained that it is important to have lexica set against a historical period since spelling variation problems were often time period-, language-, and content-specific. Each of the nine IMPACT Project languages had different departure points and special cases unique to them.

Jesse de Does, INL, went on to look at the evaluation of lexicon-supported OCR and Information Retrieval. He considered the project’s use of ABBYY Finereader, which provides a set of options and you prune them to what you think is possible. During the project, the team had evaluated Finereader SDK 10 with default dictionary. They thought that using the Oxford English Dictionary would be easy. It turned out that there were not very large spelling variations and that there were already measures in place for sorting this out. By limiting themselves to 17th-century texts, they had made significant progress.

Steven Krauwer, CLARIN co-ordinator, University of Utrecht, then introduced the CLARIN [10] Project and explained how its work tied in with IMPACT. The CLARIN Project is a large-scale pan-European collaborative effort to create, co-ordinate and make language resources and technology available and readily usable. CLARIN offers scholars the tools to allow computer-aided language processing, addressing one or more of the multiple roles language plays (i.e. carrier of cultural content and knowledge, instrument of communication, component of identity and object of study) in the Humanities and Social Sciences. CLARIN allows for virtual collections, serves expert and non-expert users, covers both linguistic data and its content. It is still in its preparatory phase, the main construction phase will be over 2011- 2013.

Block 5: IMPACT Centre of Competence

The last block of the day before the parallel sessions was the official launch of the IMPACT Centre of Competence. Khalil Rouhana, Director for Digital Content and Cognitive Systems in DG Information Society and Media at the European Commission, gave an overview of the EC Digital Agenda.

Khalil explained that the IMPACT Project has been one of the biggest projects in terms of size. Initially there were doubts that large-scale projects were the right way of supporting innovation and the FP7 team was involved in a lot of discussion on the approach to take. However IMPACT has been a good example of why large-scale integrating projects are still valuable for the ICT agenda of the EU. Khalil talked about the digital agenda for Europe which includes creating a sustainable financing model for Europeana, creating a legal framework for orphan and out of commerce works. Three important aspects were highlighted:

- that ‘access’ is the central concept

- digitisation should be a moral obligation to maintain our cultural resources

- common platforms are central to the strategy.

The bottlenecks were funding problems and copyright.

Hildelies Balk-Pennington De Jongh and Aly Conteh, KB, then introduced the IMPACT Centre of Competence [11] which builds on IMPACT’s strength in the development of a very strong collaboration between communities. It also provides access to the knowledge bank of guidelines and materials for institutions working on OCR and digitisation projects all over the world. Other valuable services offered by the Centre include a ground truth dataset, as well as a helpdesk, specifically designed and created for institutions to ask questions to experts who can, in turn, give some insight into how to address issues raised. The question of sustainability was also discussed. The sustainability model currently works around four key points: the Web site, membership packages, partner contributions and a Centre office established to provide assistance. The Centre also has further support from the Bibliothèque nationale de France (BnF) and the Fundación Biblioteca Virtual Miguel De Cervantes, which host the centre, while the BnF and the Biblioteca Virtual will also provide a wide range of partner support, sponsorship and networks, technology transfer and dissemination, as well as the preparation and dissemination of all open-source software.

Through the round window: British Library courtyard

Parallel Sessions

Language

The language parallel session consisted of a series of presentations and demonstrations of the IMPACT language tools and hosted by Katrien Depuydt (INL).

The first session was on Named Entity Work and presented by Frank Landsbergen. Frank began by defining named entities (NE). They are a word or string referring to a proper location, person or organisation (or date, time, etc). Within IMPACT, the term is limited to location, person or organisation. The extra focus on these words is primarily because they may be of particular interest to end-users and because they are not usually in dictionaries; so there is a greater improvement in the lexicon and ultimately the OCR. Note that a lexicon is a list of related entities in the database that are linked eg, ‘Amsterdams’, ‘Amsteldam’, ‘Amsteldamme’ = ‘Amsterdam’. He then spent the majority of his talk walking us through the 4-step process of building a NE Lexicon:

- Data collection;

- NE tagging: Possibilities include NE extraction software, use of the Stanford University module, statistical NE recognition (the software ‘trains’ itself) or manual tagging. Many of these tools currently work best with contemporary data.

- enrichment (POS tagging, lemmatizing, adding the person name structure, variants)

- database creation.

So far the majority of IMPACT’s NE work has been on creating a toolkit for lexicon building (NERT, Attestation tool) and creating NE-lexica for Dutch, English and German.

Annette Gotscharek talked about the 16th-century German printed book collection, a special case since the resources were so old, and therefore very challenging. There were a number of special features of the period language at a word level. The historical variants were all added to the modern lama. The diachronic ground truth corpus was text files of what appeared on the scans. It was collected from different resources on the Web and non-public electronic corpora. They worked on areas including the creation of a hypothetical lexicon and manually verifying IR-lexica.

Janusz S. Bie? talked about Polish Language Resources in IMPACT. Janusz and his team faced a number of problems when working with Polish text. The earlier dictionaries were rejected because although they held the relevant information, it was too difficult to extract. They also struggled to use other later dictionaries because of copyright issues. In the end they did manage to use a selection of dictionaries and texts including the Benedykt Chmielowski encyclopedia, which is famous for its memorable ‘definitions’: ‘Horse is as everyone can see.’

Tomaž Erjavec finished the session with a look at the Slovene Language Resources in IMPACT. Tomaž previously worked on the AHLib Project looking at transcription correction and markup. At the same time as their work on the IMPACT Project they also won a Google award so have been able to develop language models for historical Slovene. Their methodology has been to develop three resources: transcribed texts, hand-annotated corpus and a lexicon of historical words. They have also developed the annotation tool, ToTrTaLe which aids in tagging and lemmatising historical Slovene. The main issues have been tokenisation (words were split differently in earlier forms of Slovene), variability and extinct words. Over the course of the project they have transcribed over 10 million words, consisting of the AHLib corpus/DL, NUK GTD, Google Books and Wiki source –all of which are freely available.

Digitisation

Aly Conteh from the British Library hosted the parallel Q&A session on digitisation tips. Panel members included Astrid Verheusen, Manager of the Digital Library Programme and Head of the Innovative Projects Department, KB National Library of the Netherlands, Geneviève Cron, OCR expert, BnF, Christa Müller, Director Digital Services Department, Austrian National Library, Majlis Bremer-Laamanen, Director of the Centre for Preservation and Digitisation, National Library of Finland and Alena Kav?i?-Colic, Head of the Research and Development Unit, National and University Library of Slovenia.

The questions included: How do digital libraries exist without METS/ALTO and how do you support retrospective production of OCR?; many libraries digitising newspaper collections clean the head titles of their documents. Will this still happen in the future? Why insist on cleaning head titles rather than invest in digitising more pages? How do you measure capture quality when you lack ground truth? What are library priorities for the next 10 years?

Research

The research parallel revolved around state-of-the-art research tools for document analysis developed via the IMPACT Project.

Basilis Gatos looked at Impact Tools Developed by the National Centre of Scientific Research (DEMOKRITOS) in Athens. Involved with IMPACT since 2008, they have collaborated in the production of nine software tools to support binarisation, border removal, page split, page curl correction, OCR result, character segment and word spotting.

Stefan Pletschacher, Univeristy of Salford, considered OCR for typewritten documents, which pose a unique challenge to OCR recognition. Typical typewritten documents in archives are actually carbon copies with blurred type and a textured background. Moreover, as they are often administrative documents, they are rife with names, abbreviations and numbers, all of which render typical lexicon-based recognition approaches less useful. A system has been developed in IMPACT to tackle these particular difficulties by incorporating background knowledge of typewritten documents, and through improved segmentation and enhancement of glyph images, while ‘performing language-independent character recognition using specifically trained classifiers.’

Apostolos Antonacopoulos, University of Salford, looked at image enhancement, segmentation and experimental OCR by representing the work of PRImA (Pattern Recognition & Image Analysis) research at the University of Salford. Apostolos demonstrated their approach to the digitisation workflow and the tools developed for Image Enhancement (border removal, page curl removal, correction of arbitrary warping) as well as segmentation (recognition-based and stand-alone).

Richard Boulderstone recommended a look at the newly refurbished St Pancras Railway Station.

Conclusions

The IMPACT final conference offered an excellent bird’s eye view of the achievements that have been made through the lifecyle of the project. These achievements range from the highly technical (through development of the project tools) to the more overarching variety (through awareness-raising and capacity-building activity in the area of OCR). Some of the presentations were slightly esoteric and very much designed for the specialist. However there was still enough to keep the non-specialist engaged. It is clear that there is still much work to be done in the area of OCR scanning of historical texts, but the IMPACT Project has packed an impressive travel bag for the road ahead.

The writing of this trip report has been aided by session summaries from the IMPACT Wordpress blog [12], and my thanks go out to the team of reporters. Presentations from the day are available from the IMPACT blog and from the IMPACT Slideshare account [13]. You can follow the IMPACT Project and the Centre of Competence on Twitter [14].

References

- The IMPACT Project Web site http://www.impact-project.eu

- "British Library and brightsolid partnership to digitise up to 40 million pages of historic newspapers", brightsolid news release, 18 May 2010

http://www.brightsolid.com/home/latest-news/recent-news/british-library-and-brightsolid-partnership-to-digitise.html - Powell, T. and Paynter, G., "Going Grey? Comparing the OCR Accuracy Levels of Bitonal and Greyscale Images", D-Lib Magazine, March/April 2009

http://www.dlib.org/dlib/march09/powell/03powell.html - ABBYY Finereader http://www.abbyy.com/ocr_sdk/

- MetaE http://meta-e.aib.uni-linz.ac.at/

- Solr http://lucene.apache.org/solr/

- Tesseract http://code.google.com/p/tesseract-ocr/

- Taverna http://www.taverna.org.uk/

- DigitalKoot http://www.digitalkoot.fi/en/splash

- CLARIN http://www.clarin.eu/external/

- IMPACT Centre of Competence http://www.digitisation.eu/

- IMPACT blog http://impactocr.wordpress.com/

- IMPACT Slideshare account http://www.slideshare.net/impactproject

- IMPACT Twitter account http://twitter.com/#!/impactocr

Author Details

Marieke Guy

Research Officer

UKOLN

University of Bath

Email: m.guy@ukoln.ac.uk

Web site: http://www.ukoln.ac.uk/

Marieke Guy is a research officer at UKOLN. She has worked in an outreach role in the IMPACT Project creating Open Educational Resources (OER) on digitisation which are likely to be released later in 2012. She has recently taken on a new role as an Institutional Support Officer for the Digital Curation Centre, working towards raising awareness and building capacity for institutional research data management.