DataFinder: A Research Data Catalogue for Oxford

Sally Rumsey and Neil Jefferies explain the context and the decisions guiding the development of DataFinder, a data catalogue for the University of Oxford.

In 2012 the University of Oxford Research Committee endorsed a university ‘Policy on the management of research data and records’ [1]. Much of the infrastructure to support this policy is being developed under the Jisc-funded Damaro Project [2]. The nascent services that underpin the University’s RDM (research data management) infrastructure have been divided into four themes:

- RDM planning;

- managing live data;

- discovery and location; and

- access, reuse and curation.

The data outputs catalogue falls into the third theme, and will result in metadata and interfaces that support discovery, location, citation and business reporting for Oxford research datasets. This concept of a tool that supports a comprehensive listing of research data outputs of the University, coupled with the ability to find, cite and report on such outputs has resulted in the University anticipating that the catalogue, named DataFinder, will be the hub of the RDM infrastructure tools and services for the institution. The general view is that it will provide the glue between services, both technical and non-technical, and policies, across the four themes listed above.

To this end, DataFinder will provide a listing of Oxford research data outputs, using metadata that supports discovery and standard citation. DataFinder will make tools available to administrators to enable the University to report both internally and externally on its research data outputs. To fulfil these user requirements, the catalogue has been designed in such a way to meet the needs of its users, whilst complying with common standards. During the design and implementation process, a number of decisions were taken about the design. Inevitably, compromises had to be made in order to make the service useful to and usable by researchers, administrators and other users.

How comprehensive DataFinder will be is dependent on a number of factors: the willingness of researchers to interact with the service and the ability to gather automatically and reuse existing metadata. Its usage will be governed by visible and demonstrable efficiency savings and impact, and by the intelligence it provides for individuals, research groups and for administrative units.

DataFinder’s Role in the Oxford Data Chain

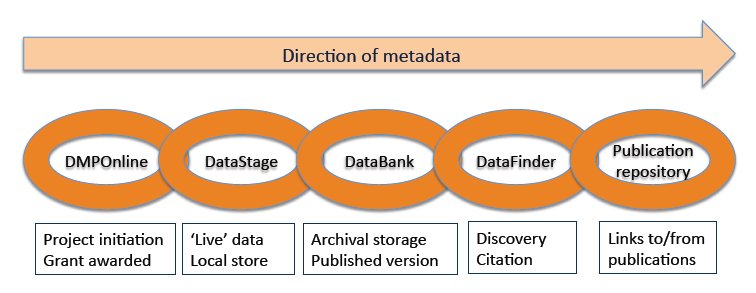

When considering the applications that will be available in the short to medium term to Oxford researchers to support RDM, one can imagine them as a data chain, each linked to those either side as a series of services, relevant at different stages of the research data lifecycle (see Figure 1).

Figure 1: Data chain

At the outset of the research process, there will be Oxford DMPOnline [3] where data comprising a data management plan is captured with a view to providing requisite information for funding agencies, but also providing vital intelligence for internal use to aid planning and research administration. The next stage is that of data creation and manipulation, usually carried out at local (departmental/individual) level. The data at this stage of the process is often described as ‘live’ i.e. being actively worked on or still in a raw state prior to manipulation. In addition to local systems, there are two new applications being created at Oxford that will be available centrally for researchers to use: DataStage [4] and ORDS (Online Research Database Service) [5].

Once the dataset has a version that can be classed as in a stable state and ready for archiving and possibly publication, it will be deposited for longer term archiving and storage. Researchers might choose (or be required) to use archival services provided by a national service such as the UKDA [6], they might deposit in a trusted local departmental system, or in the University’s institutional data archive, DataBank [7] which is being developed by the Bodleian Libraries. There is no compulsion for researchers to choose DataBank. It is at this latter stage in the data creation process when the University would ideally like to ensure a record of the data is created, wherever the actual dataset might be stored or published. In addition to the benefit of retaining a record for the University of what data have been created by Oxford researchers, DataFinder can assist Oxford and external researchers to find potential research collaborators, and could even help inspire new research.

Therefore DataFinder:

- enables researchers to comply with their funder’s policies by publishing required metadata, displaying the details of the funding sponsor, providing a link either directly to the data or provides the location of the data (which may comprise a URL, physical location or details to request access), and offering a means of reporting outputs to funders.

- supports data citation and links to related publications. Links to related publications could resolve to the publication record held in ORA (Oxford University Research Archive) which is being developed to be the University’s comprehensive online research publications service. Conversely, citations and links to data catalogue records in DataBank can be included in ORA publication records.

- enables the University to manage better its research data and other research outputs more generally

Metadata

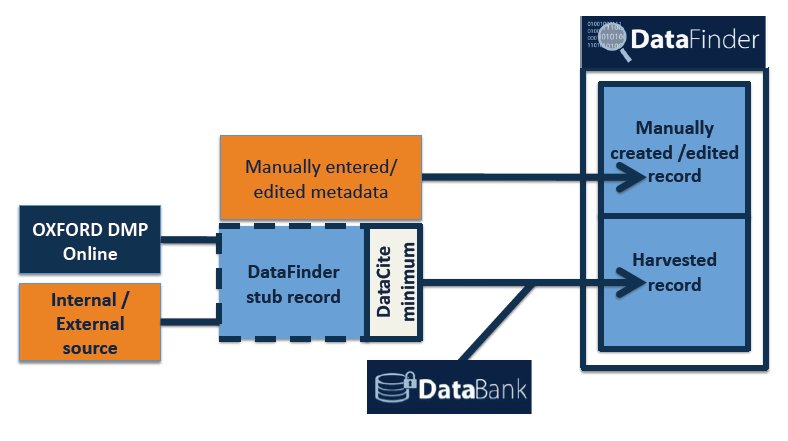

The aim wherever possible is to create a metadata chain reaction whereby metadata describing the dataset can be passed along the data management services, be they internal or external, eventually providing the information required for describing, discovering and citing the dataset in DataFinder. Although the descriptive metadata may start out as being minimal (there is little specific dataset metadata in DMPOnline as the data will not yet have been created at the project proposal stage), there is the capacity for it to be expanded and improved as the research process proceeds. The minimal metadata drawn from Oxford DMPOnline might be enhanced with additional metadata from DataStage and/or DataBank or with metadata harvested from an external source. Metadata captured in DMPOnline can form a stub record in DataFinder that can then be enhanced at a later stage. There are however, a number of routes in to metadata deposit in DataFinder and some datasets will have records harvested only from external sources. See Figure 2.

Figure 2: Metadata input and enhancement

Metadata relevant to DataFinder for each service in the data chain are as follows:

- DMPOnline. Eight fields have been identified that can be directly mapped to DataBank and DataFinder: Principal investigator (and contributors); affiliation(s); data manager; funding agency and grant number; project start date; project keywords.

- DataStage. Requires DataCite minimum five elements to be able to deposit in DataBank: title; creator; publisher; unique identifier; date.

- DataBank. Ditto DataCite minimum. This minimum is required so that DOIs can be assigned to datasets.

A core minimum metadata set has been defined for DataFinder to enable basic citation, description and location (see Table 1). Contextual metadata can be inserted to comply with funder policies and for datasets that require such information. A large number of Oxford research projects are not funded and so funder details are not relevant to all researchers, so the context of the dataset drives the metadata required. The addition of further metadata by depositors is encouraged to allow assessment of usefulness by others and a description of the data so they can be understood and re-used.

1 |

Data creator name |

2 |

Creator affiliation |

3 |

Data owner |

4 | Output a product of funded researcher Y/N |

5 | Title |

6 | Description |

7 | Subject headings |

8 | Publication year |

9 | Publisher |

10 | Earliest access date (open access/embargo) |

11 | Digital format Y/N |

12 | Terms and conditions |

13 | ID + other automatically assigned metadata |

Table 1: Core minimum metadata for manual deposit

A distinction should be drawn between records which are manually created (and/or enhanced) in DataFinder and metadata which is harvested from other sources. In order to be displayed it has been agreed that records should comply with the DataCite core minimum five metadata fields. Harvested records which do not comply will be retained as a stub record for later improvement (by the data creators or other individual). On the other hand, users who create records manually using the online form will be forced to complete a number of mandatory fields, resulting in slightly richer metadata. For example, if the manual depositor indicates that the research is externally funded, they will be required to insert information about the funder and grant reference. The mandatory fields have been kept to a minimum to encourage record creation in DataFinder because of easy participation, rather than making the task too onerous and therefore off-putting for researchers. It is therefore critical that suitable training, information and communications are provided so that researchers are aware of the benefits to themselves of providing rich metadata and comprehensive documentation for their datasets.

Controlled vocabularies and auto-completion will be employed in the manual data entry process wherever possible. This is both to ease data entry and to ensure consistency. Consistency across library and other systems will assist future data compatibility across the wide variety of digital systems used by different units of the University and when interacting with external systems. Manual depositors will log on to DataFinder using their Oxford single sign-on credentials. This is linked to the Oxford core user directory (CUD) and will provide additional data such as internal affiliation. The Bodleian Libraries are working closely with the University’s Research Services Office which has provided a list of funders who sponsor Oxford research and has included alternative funder names and abbreviations for accurate disambiguation. Selection of a subject classification scheme has been difficult as there are too many conflicting interests for any one scheme to stand out as the obvious choice. FAST (Faceted Application of Subject Terminology) [8] has been selected initially because of its breadth and relative simplicity for non-expert users as a recognised standard and because it has a linked data version. The Libraries will keep a watching brief on other schemes (such as RCUK (Research Councils UK) [9] and JACS (Joint Academic Coding System) [10] and the RIOXX initiative [11]) and mappings across schemes to facilitate reporting and policy compliance. DataFinder will permit deposit by researchers of XML files of subject-specific terms and vocabularies which are common in disciplines such as maths, economics and the bio-sciences.

Content

A series of policies are in preparation to govern the content and management of DataFinder and use of the service. These policies are still in draft form, but will be submitted to the Research Information Management Sub-Committee (of the University Research Committee) for approval in due course. DataFinder has to serve all researchers across the wide spectrum of disciplines at Oxford. It is therefore expected that there will be differences in the types of datasets recorded in DataFinder. The policies will clarify rules governing which datasets are eligible to be deposited in DataFinder as well as other areas such as retention and deletion.

It is not a requirement by the University that researchers provide a record for their datasets in DataFinder. As with publications repositories, it will therefore be important for researchers to understand the benefits and requirements for ensuring their datasets are listed. To support this, it will be crucial that informative and clear guidance is provided to researchers as to why and how they should provide records of their datasets depending on future University policies. Such information may also be applicable to research students. If it can be demonstrated that there are obvious benefits to individuals (such as to save time reporting to funders, to encourage collaboration or provide citations and a landing page), researchers may be more inclined to use DataFinder both for records of their own outputs and for finding out about the research outputs of others.

DataFinder Software

The DataFinder dataset catalogue was intended to be a simple component with an emphasis on persistence and auditability from which other services could be derived. In order to achieve this with the minimum of effort, it was decided to use our DataBank data repository as the basis for the new service, essentially stripping out the ability to store datasets, and augmenting the metadata-capture and -processing capabilities. Some of these improvements would, in turn, be rolled back into DataBank, since DataBank metadata would naturally feed into DataFinder. At the outset we evaluated a number of other options such as CKAN, which provides a rich set of tools for interacting with published data. For our purposes, however, the solutions that we evaluated all appeared to be the types of service that we would envision implementing over Databank or DataFinder rather than providing the core storage and preservation functionality – and in due course we expect to do so.

In particular, the DataBank/DataFinder architecture has the following key characteristics which will become more important as the content volume grows:

- File system orientation: an object and its metadata are localised in a single directory on the filesystem and can thus be recovered from the storage in the absence of any of the overlying software or services. The ‘burn-line’ for disaster recovery is thus set at the storage layer. The REST API that DataBank/DataFinder provides to access objects therefore needs only to be a slight modification to direct web file serving.

- Semantic focus: the internal structure of an object (whether files contain metadata or data and respective format information) and its relationships to other objects are expressed as RDF. Object directory paths are based on the object URIs used in the RDF. This provides both a native ability for content to participate in the Semantic Web but also improves recoverability as these critical structures are relatively human-readable.

- Versioning: objects are versioned on-disk using symbolic links where components are unchanged with updates logged by time and author. Both of these aspects are thus explicitly visible in the on-storage structure while the linking approach keeps storage requirements under control.

- Collection management: objects are segregated into ‘silos’ which are assigned to a curator who is responsible for the content of the silo and can make ultimate decisions about the destiny of those objects. This reflects the reality of physical collection management where expertise in the nature of the content is distinct from the expertise in the stewardship of the content. In the case of DataFinder, silos will segregate records harvested from different sources as well as managing manually created records which will come under the aegis of the relevant organisational unit when an individual creator moves on.

- Aggregation: DataFinder is designed to provide the ‘landing page’ for searches and interactions with Oxford’s data collections wherever they are. In practice, products such as CKAN [12] and services such as ColWiz [13] may provide layered functionality over the top of the catalogue and retrieval requests will redirect to a number of archives of which DataBank will be just one. However, as much as possible, DataFinder is intended to be a, possibly fleeting, participant in these interactions so that they can be recorded.

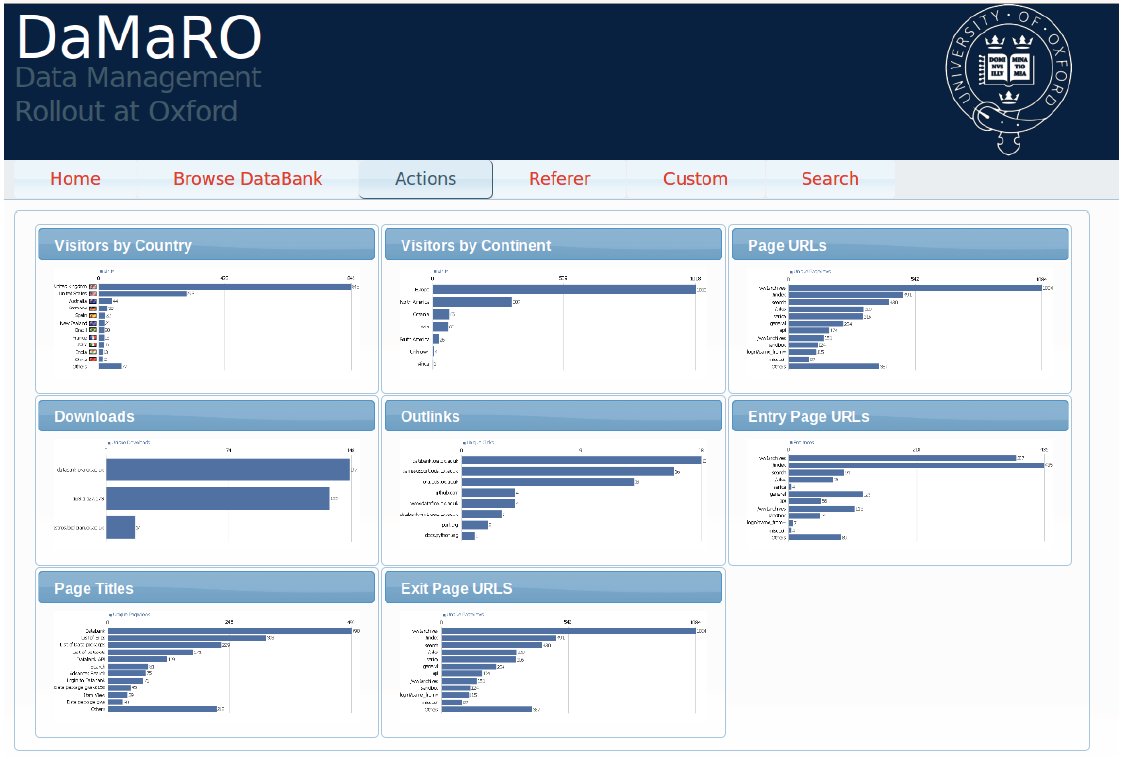

Data Reporter

DataReporter is designed as an adjunct to DataFinder/DataBank which is used to generate reports and statistics in various formats (see Figure 3). It was designed as a separate component since it is targeted at a different audience and may well be operated and maintained by parts of the organisation concerned with research information as opposed to research data. DataReporter can make use of both access/usage statistics and contents statistics to give a complete view of activity in the area. At this stage, we have identified demand for several key types of report:

- Volume and rate of research data generation – for capacity planning and budgeting

- Usage statistics to comply with funder mandates for retention/preservation

- Validation of timely deposition to comply with funder mandates for deposit

- Data publication records for assessment activities such as REF

Figure 3: DataReporter prototype

Look and Feel

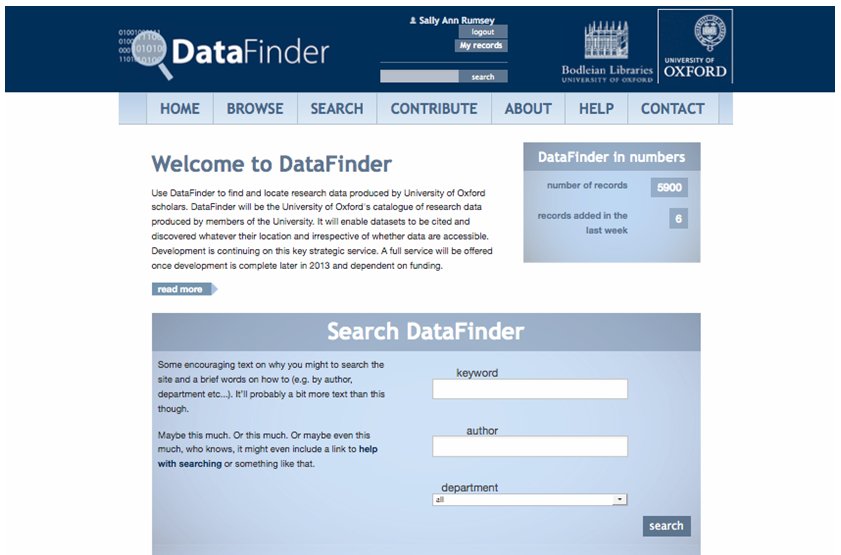

The design of the DataFinder user interface began with the construction of user journey diagrams that show how users navigate to the pages they require, following a logical sequence. The individual pages featured in the user journeys were then translated into wireframes incorporating draft functions and information deemed crucial for each page. Once these pages had been created, the first iteration was developed (see Figure 4).

Figure 4: DataFinder demonstrator interface

Solr index and search [14] allows faceted searching and Piwik software [15] provides a useful means to display usage information. These applications are in wide use already within BDLSS (Bodleian Digital Library Systems and Services) systems, so it made sense to employ them. Most DataFinder pages are freely available, however some such as the contribute form and reporting information, are password protected (using the Oxford single sign-on).

The aesthetic design was influenced by the design of ORA. BDLSS is gradually developing a core look and feel that will be implemented across many services as they are built and rolled out.

Data Model

The data model for DataFinder and DataBank is necessarily generic and flexible as the definition of ‘data’ within the remit of the systems is very broad. In the case of DataFinder, references to physical artefacts are valid records. However, at the same time, the systems should be capable of capturing and making use of all the metadata that a researcher may want to make available in order to increase utility and the consequent resulting reuse and possible citation.

At the highest level, the object model is based on the FEDORA Commons [16] idea that an object is simply a set of ‘datastreams’ that, in practice, are frequently represented as files. These can contain metadata or data as detailed in a manifest that describes the structure of the object in question, and is itself part of the object – in the DataBank model the manifest is expressed using RDF.

Crucially, this mechanism allows us to have multiple metadata datastreams within an object, allowing us to accommodate the core metadata that are common to all objects (based on the DataCite kernel), discretionary metadata based on additional funder requirements, and domain-specific metadata that depend on the source. They can be documented in the manifest and if XML formats are used, the advent of tools such as Elastic Search and later versions of Solr allow us to provide schema-less indexing and querying of this arbitrary metadata without having to specifically support a multiplicity of specialised XML schemas.

Additionally, we also implement a relational database-like disaggregation of key entities such as funders and researchers, creating distinct objects within the system to represent them. Again, RDF is then used to map these external relationships as well as links to other datasets and publications within ORA. These additional ‘context’ objects are used as authority sources for information on the entities in question – storing name variations, foreign keys in external systems for harvesting and integration purposes and disambiguation information. For example, an author may have several ‘unique’ identifiers within systems which provide content to DataFinder: ORCID, ISNI, Names, ResearcherID and HESA ID to name but a few.

Objects within the system are given a universally unique identifier (UUID) when they are created which provides the basis for a persistent URL which is registered with the Oxford PURL resolver (purl.ox.ac.uk). This is also the identifier that is used to reference the object internally as they have robust uniqueness characteristics, allowing generation in multiple locations (which aids horizontal scaling of the data repository) and providing an identifier that can be readily moved to other repositories without fear of collisions.

Roles and Permissions

The first release of DataFinder incorporates a very simple set of roles for users that are similar to those in use in DataBank and ORA:

- Researchers can create records within DataFinder. Records are, however, not published immediately. Researchers can create records that are attributed to others.

- Reviewers review deposited records prior to publication and, if necessary, refer them back to the researcher if they are incomplete or there are other queries. Reviewers and researchers updates are logged in the audit trail for the record.

- Administrators can delete records, return them to the review state, and also define new metadata sources for harvesting.

At the moment, reviewers need to validate records when a depositor has acted as a proxy for another. Whether an automatic group level role needs implementing to somewhat automate this process remains to be seen. This has not been an issue for ORA but, research data may show a markedly more rapid growth in activity that may necessitate such functionality.

Metadata Import and Export

DataFinder will have the ability to harvest data records from other repositories, to build a complete picture of Oxford’s data holdings. In the first instance, harvesting will be carried out using OAI-PMH (Open Archives Initiative Protocol for Metadata Harvesting) as it is not only well established in the repository world in general, but it is also implemented in DataBank for precisely this purpose. While DataBank records, by design, will meet the metadata requirements of DataFinder and DataCite (to permit the issue of data DOIs), it is recognised that this may not be the case for records sourced from elsewhere.

DataFinder must therefore handle the following cases individually and in combination:

- Datasets may be located in more than one repository so we will acquire duplicated records, possibly with different metadata.

- Different versions of the same dataset may be in existence

- Harvested metadata may fall short of DataFinder and/.or DataCite requirements

- Harvested sources may not permit updates to their records

- Harvested sources may update their records independently of the state of DataFinder

Reconciling these different conditions programmatically in a deterministic manner would be an extremely complex, if not impossible undertaking, so DataFinder does not attempt this task. Harvested data records are maintained within the system as is and updated only when the source system presents a new record. Duplicates and version mismatches are handled by manually creating an additional record that acts as a parent for the all the variant records. The metadata in the parent may be based on the child records but can also be corrected manually. The parent record is used for the basis of indexing and discovery with the child records displayed for information purposes and linking to the various versions/copies. The same mechanism with a single child record can, of course, be used to augment inadequate metadata with improved material.

While it is not possible to fully automate the handling of these awkward cases, the creation of these parent records can be automated to some degree based on the fact that we have authority lists and some metadata available in the system already. The extent to which these will be implemented depends largely on the volume of such cases that we encounter.

DataFinder also supports another, indirect, source of metadata in the form of DMPOnline Data Management plans which are archived in Databank as separate digital objects in their own right. While these plans do not usually explicitly describe individual datasets, they do provide some information about people, projects and funders relating to data-generating activities. This information can be used to improve the manual entry experience by pre-filling some fields or providing limited selection lists for others. This extra information can also be useful for enhancing the accuracy of automated processing of harvested material where this occurs.

Conclusion

The choices made and decisions taken when designing and developing DataFinder have resulted in a service that we believe is fit for the institution’s purpose. Exactly how successful those decisions have been will not become evident until the DataFinder has been running as a full mainstream service for some time. Some of the decisions have been governed by external drivers such as funder requirements: others have been taken in order to simplify the deposit process for hard-pressed, busy researchers. Underpinning the development has been the concept that metadata should be reused, entry simplified, and terms made consistent wherever possible to encourage deposit and creation of as many records as possible. We expect to provide a first release mid-2013 working towards a mature service (once a suitable business model has been agreed) by the end of 2014.

References

- Policy available at http://www.admin.ox.ac.uk/rdm/

- Damaro Project http://damaro.oucs.ox.ac.uk/

- Oxford DMPOnline

http://www.jisc.ac.uk/whatwedo/programmes/di_researchmanagement/managingresearchdata/dmponline/oxforddmponline.aspx - DataStage http://www.dataflow.ox.ac.uk/index.php/about/about-datastage

- ORDS (Online Research Database Service) http://ords.ox.ac.uk/

- UK Data Archive (UKDA) http://data-archive.ac.uk/

- Oxford DataBank [in development] https://databank.ora.ox.ac.uk/

- FAST (Faceted Application of Subject Terminology) http://fast.oclc.org/searchfast/

- RCUK Research Classifications http://www.rcuk.ac.uk/research/Efficiency/Pages/harmonisation.aspx

- JACS Joint Academic Subject Coding

http://www.hesa.ac.uk/index.php?option=com_content&task=view&id=158&Itemid=233 - RIOXX http://rioxx.net/

- CKAN http://ckan.org/

- ColWiz http://www.colwiz.com/

- Solr index and search http://lucene.apache.org/solr/

- Piwik Web analytics http://piwik.org/

- Fedora Commons http://fedora-commons.org/

Author Details

Email: sally.rumsey@bodleian.ox.ac.uk

Web site: http://www.bodleian.ox.ac.uk/

Sally Rumsey is Digital Research Librarian at the Bodleian Libraries where she manages the institutional publications repository, ORA. She is working with colleagues across the University of Oxford to develop and implement a range of services to support research data management. Sally is Senior Programme Manager for the University’s Open Access Oxford programme.

Email: neil.jefferies@bodleian.ox.ac.uk

Web site: http://www.bodleian.ox.ac.uk/

Neil Jefferies, MA, MBA is Research and Development Project Manager for the Bodleian Libraries, responsible for the development and delivery of new projects and services. He was involved with the initial setup of the Eprints and Fedora Repositories at Oxford and is now working on the implementation of a long-term digital archive platform. Alongside his commitments to Cultures of Knowledge, Neil is Technical Director of the IMPAcT Project, Co-PI on the DataFlow Project, and an invited contributor to the SharedCanvas Project. He also represents the Bodleian on the Jisc PALS (Publisher and Library Solutions) panel. Previously, he has worked in a broad range of computer-related fields ranging from chip design and parallel algorithm development for Nortel, writing anti-virus software for Dr Solomon’s, and developing corporate IT solutions and systems for several major blue-chips.