Purposeful Gaming: Work as Play

Patrick Randall looks at how games can be used to crowd source improvements in OCRed text in digitization initiatives.

Purposeful Gaming: Work as Play

In 2011, Microtask and the National Library of Finland launched DigitalKoot [1], a project that used computer games to correct faulty Optical Character Recognition (OCR) outputs for historic newspapers. The project was an overwhelming success; in less than a year, thousands of volunteers had completed over 2.5 million tasks [2]. The greater success of DigitalKoot, however, was the introduction of gamification to non-profit digital preservation. Since late 2013, four U.S. institutions have further developed the concept with a project called Purposeful Gaming and BHL [3].

I. A brief explanation of gamification

While there is disagreement over the exact origins of the term gamification, most scholars attribute it to British programmer and game designer Nick Pelling, who is said to have coined it in 2002 or 2003 [4]. Pelling confirmed this via email, adding that as he originally conceived it, “gamification was about the inevitability of games concepts taking over the cultural and business mainstream, all the way from digital content platforms (such as iTunes, AppStore, etc) to immersive user interfaces (touch screen phones)” [5].

If there is some disagreement over the coinage of gamification, its definition is even more contested. Merriam-Webster defines gamification as “the process of adding games or gamelike elements to something (as a task) so as to encourage participation” [6]. These elements are usually points, levels, leaderboards, and achievement badges. Betraying the predominance of use by corporations (where it is often referred to as “enterprise gamification”), Oxford Dictionaries adds that it is used “typically as an online marketing technique to encourage engagement with a product or service” [7]. This aspect has caused some critics to use the term “exploitationware” to describe corporate use of gamification [8]. Others have questioned the use of the term to describe activities that eliminate meaningful narrative and reduce gameplay to the accumulation of points and badges [9]; Pelling makes a point of distancing himself from these. Game designer Jane McGonigal argues that gamification cannot simply convince players to complete tasks they would otherwise find uninteresting [10], and Kris Duggan, co-founder of the enterprise gamification company Badgeville, has called for abandoning the term altogether [11].

These arguments make clear that much of the discussion over the definition of gamification is less about describing what it is than prescribing what it should be. The concept—and perhaps its name—will change as it matures, but in the contexts of cultural heritage and citizen science, gamification (the games are often called “serious games” when they are not oriented primarily around commerce or entertainment) can be defined simply as the use of game elements to enable and reward users in the completion of a given task. Dr. Mary Flanagan, founder of Tiltfactor (which designed the games for Purposeful Gaming and BHL) argues that this form of gamification represents an exchange between the public and the institution soliciting its help [12]:

Cultural heritage institutions are increasingly benefiting from human computation approaches that have been used in revolutionary ways by scientific researchers. Engaging citizens to work together as decoders of our heritage is a natural progression, as preserving these records directly benefits the public. Integrating the task of transcription with the engagement of computer games gives an extra layer of incentive to motivate the public to contribute.

II. Purposeful Gaming & BHL

The Biodiversity Heritage Library (BHL) [13] is both a consortium of natural history and botanical libraries and a digital library that contains the open-access legacy literature of its members from all over the world. In September of 2013, four of its U.S. members—the Missouri Botanical Garden (MOBOT), the New York Botanical Garden, Cornell University, and the Ernst Mayr Library of the Museum of Comparative Zoology at Harvard University—were awarded a grant by the Institute of Museum and Library Services (IMLS) to “demonstrate whether or not digital games are a successful tool for analyzing and improving digital outputs from OCR and transcription activities” on the premise that “large numbers of users can be harnessed quickly and efficiently to focus on the review and correction of particularly problematic words by being presented the task as a game” [3]. The project officially ends November 30th, 2015.

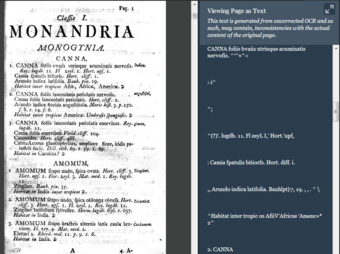

While OCR has been a major boon to digitization initiatives, the technology is still inadequate for accurate rendering of hand-written texts or non-standard formatting. The European Commission’s IMPACT project, created in 2010 to improve access to European historical texts, found that “[OCR] Recognition rates are poor or even useless. No commercial or other OCR engine is able to cope satisfactorily with the wide range of printed materials published between the start of the Gutenberg age in the 15th century and the start of the industrial production of books in the middle of the 19th century” [14]. The situation today is little improved.

Figure 1: Sample text and OCR output [3]

In order to convert scanned images into useful documents that can support full-text search, many institutions have relied on manual transcription or a hybrid OCR/transcription approach to digitization. Crowdsourcing has mitigated some of the cost and time constraints of this strategy, but transcription quality varies widely among volunteers, and inconsistency has required close vetting by trained staff. One solution is to require volunteers to review each other’s work before it is submitted to staff, as practiced by the Smithsonian Institute’s Transcription Center [15]. Another solution—the one imagined by DigitalKoot and used in the Purposeful Gaming project—is to create consensus through a game and then feed corrections directly back to the transcription.

III. Texts and Transcription

Two groups of BHL texts were chosen for use in Purposeful Gaming, both poor candidates for available OCR software. The first is the 19th-century handwritten journals and diaries of ornithologist William Brewster [16]. These writings are representative of many similar materials for which OCR produces an unsatisfactory transcription, mostly because of the cursive script, but also for the presence of scientific names and marginalia. The second group is historical seed and nursery catalogs [17]; these catalogs are challenging for their use of irregular formatting, varied typeface and font size, and interspersed images.

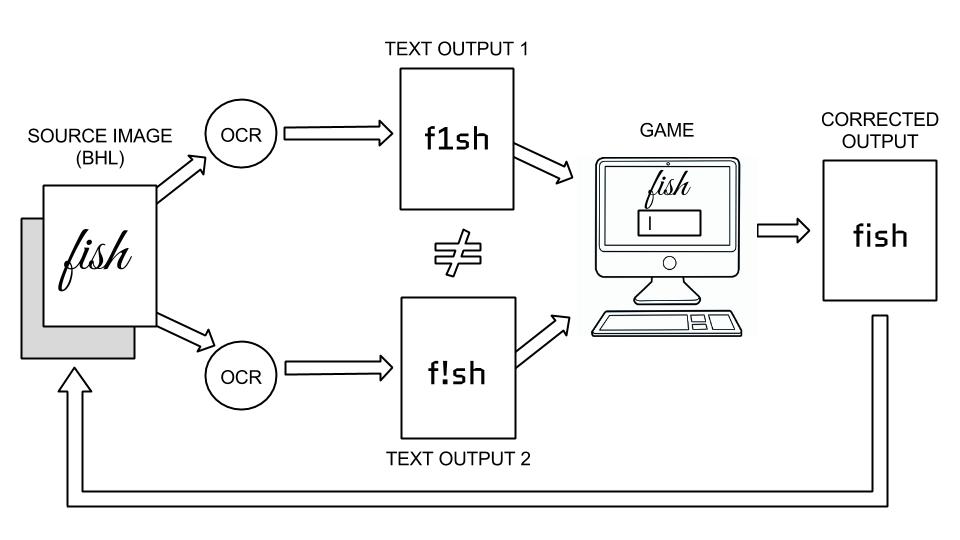

Originally, the games were intended to reconcile two manual transcriptions of the same text, which would presumably result in a third, superior version. To that end, duplicate sets of scanned texts were hosted on two crowdsourcing sites, Ben Brumfield’s FromThePage [18] and the Atlas of Living Australia’s DigiVol [19]. Volunteers on each site produced two similar, but not identical, sets of transcriptions. In order to compare both sets, it is necessary to associate each word in the image file with its corresponding word in the transcription; only when both transcriptions are mapped to the source in this way can they be compared word for word. Unfortunately, the page coordinate tools that would have enabled this comparison were not available within the timeframe of the grant. As a result, the decision was made to compare two different OCR outputs instead. Ultimately, the manual transcriptions produced on FromThePage and DigiVol will still be paired with their source images in BHL to facilitate full-text searching and data mining.

IV. The Games

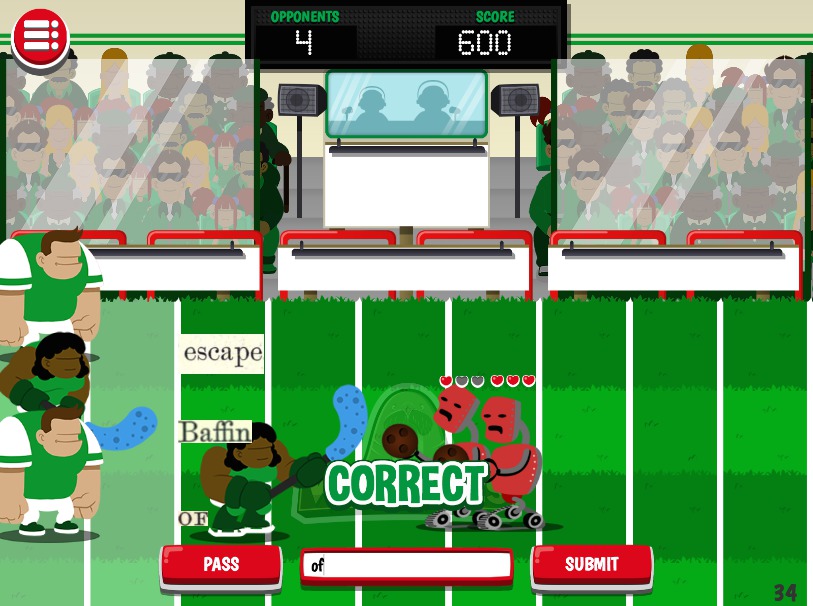

Purposeful Gaming partnered with Tiltfactor [20], a “serious games” developer based at Dartmouth College, to create two browser games for the project. The first, Smorball [21], is a fast-paced game in which players type words correctly in order to defeat the robotic rivals of fictional sports teams. The game incorporates traditional gameplay elements such as points, increasingly difficult levels, and leader boards. Smorball was designed to appeal to an audience that is already comfortable with gaming and that would be attracted to its tournament-style play.

Figure 2: Screenshot of Smorball gameplay

The second game, Beanstalk [22], was designed for players who are either unfamiliar with gaming or would prefer a self-paced gaming experience. In Beanstalk, players “grow” a beanstalk from a seedling each time they type a word correctly.

Figure 3: Screenshot of Beanstalk gameplay

V. Game Mechanics

There are several steps involved in correcting a text input in either game. The first is selection of the text input itself. A word is a candidate for the game when each OCR program produces different renderings of it. When this happens, the original image of the disagreed-upon word appears, and players are asked to type what it says. At this point, the game uses a modified longest common substring algorithm in conjunction with OCR software's best guesses about each word to determine whether or not the player’s typed entry should be marked 'correct'. Correctness simply means that the game will reward the player, not that the player’s entry is the best possible transcription of the word.

Next, the game seeks to reconcile multiple player entries for a single instance of a word (because OCR may render a word differently each time it is encountered in a document, each instance must be treated as a discrete word). Once players have supplied a minimum number of ‘correct’ entries for a given instance, the game examines the two most common interpretations for clear agreement according to the following algorithm:

agreed upon if W1/(W1+W2)>.75 where W1 is the number of times the most frequently typed word has been typed, and W2 is the number of times the second most frequently typed word has been typed

If there is agreement, that instance is tagged as complete. Only when all of the words from an original page of text are tagged will they cease appearing in the game.

Once an entire page has been tagged, it can replace the original OCR outputs. Project staff are currently working on a tool that would export these revised outputs to BHL, where they will accompany the scanned images of the original documents.

Figure 4: Process by which transcriptions are created and reconciled

VI. Measuring Results

Several metrics can be used both to quantify the output of the project and to gauge player response:

1. Pages corrected. This is the most significant figure and one that will, by design, increase more rapidly as the project progresses, even with a constant rate of play. Because each word instance (there are 40,000 in the Smorball database) must be typed a minimum number of times before the algorithm looks for clear agreement, most pages will not be marked complete until a critical mass of players has reviewed them. After one day-long session in which volunteer members of the public produced 10,000 entries but only a minimal increase in completed pages, Tiltfactor developers lowered the prerequisite threshold for determining agreement (i.e., a word instance will now be encountered fewer times before it is eligible to be tagged complete). Words that have already surpassed the new threshold but have not been tagged will be reevaluated the next time they are encountered.

2. Number of players. This sheds light on the effectiveness of outreach and promotion as well as the relative popularity of each game. Initial feedback suggests that the favorability of the games’ reception varies among audiences. Beanstalk was marketed toward citizen scientists on the assumption that they were unlikely to be avid gamers and would therefore appreciate a more relaxed game that required little instruction. What attracts these volunteers to digital initiatives like BHL in the first place, however, is the substantive nature of the work. These volunteers have contributed thousands of hours to the tedious task of manual transcription. Admitting that he does not consider himself a “gamer,” one BHL user commented that Beanstalk’s lack of context made him lose interest [23]; another suggested incorporating a “canvas of pages” of content as a backdrop for gameplay [24].

By isolating words from the original texts, both games divorce the task from its broader context; but whereas Smorball is challenging enough to attract gamers who may or may not be invested in preservation of historic texts, Beanstalk is less likely to hold the attention of gamers or citizen scientists, who desire meaningful interaction with the underlying material.

3. Average session time. This indicates whether the games hold players’ interest as well as whether the progressive difficulty of Smorball, in particular, is a deterrent or an incentive to further play. Early on, this can also be an indication of technical problems that could cause game sessions to close prematurely.

4. Website referrals. This indicates where traffic is being generated and where further resources should be devoted to outreach. Early analytics suggest that most players are accessing the games through the BHL and Tiltfactor websites rather than through Facebook or Twitter, where they have been heavily promoted. This speaks again to the priorities of the volunteer audiences and their attraction to context, whether biodiversity or gaming.

VI. Conclusions

Game-generated data will be aggregated at the conclusion of the project, but preliminary results and user feedback have already been invaluable in demonstrating a proof of concept. Trish Rose-Sandler, Principal Investigator for Purposeful Gaming, summarizes the direct benefit to BHL [12]:

The games provide a fun and engaging way for volunteers to help us with a task that we don’t have the staff to do ourselves. BHL benefits by having improved discoverability of its books and journals on plant and animal life. More importantly, benefits from the results of the project would extend to the broader digital library community. Any institution managing large text collections can learn from novel and more cost-effective approaches to generating searchable texts.

How widely these approaches are adopted across digital preservation initiatives depends on the ability of developers and institutions to address several challenges.

First, page coordinate software must be improved. Current OCR technology is inadequate for producing even a baseline transcription of handwritten texts. This means that for the foreseeable future, volunteers will continue to transcribe these materials manually. Only when page coordinate systems can be implemented in conjunction with transcription tools will it be possible to compare two manual transcriptions or a manual transcription with an OCR output. Moreover, reliance on OCR outputs alone means having to resolve simple or commonly occurring words that are easily recognized by human transcribers. The prevalence of such words in a game may adversely affect its difficulty or, conversely, its monotony.

Second, games must balance the interests of volunteers. The very process by which gamification streamlines and simplifies tasks may be off-putting to volunteers who relish direct interaction with texts. At the same time, the inclusion of too much context can make games unwieldy or alienating to players who are drawn primarily to gameplay. Games need not satisfy everyone, but they must have broad enough appeal to generate a level of interest that justifies their development.

Finally, gamification must be scalable to improve access to a significant number of texts. There are limited resources available to even the largest institutions, much less those without the funds or expertise to create their own games. In order to reap the benefits of gamification, such institutions must pool their content in consortia like BHL. The proliferation of open-source game code, like that produced by Tiltfactor, could one day put gamification within reach of everyone. If that happens, we can expect—in keeping with Pelling’s early vision—to see games and their texts increasingly integrated through plugins and widgets, across multiple platforms and devices. The more the concept is tested, the sooner the technology will be available to all.

References

1. DigitalKoot http://www.digitalkoot.fi/

2. Chrons, O., & Sundell, S. (2011). Digitalkoot: Making old archives accessible using crowdsourcing. In Human Computation: Papers from the 2011 AAAI Workshop (WS-11-11). Retrieved from http://www.aaai.org/ocs/index.php/WS/AAAIW11/paper/view/3813/4246

3. Purposeful Gaming public wiki https://biodivlib.wikispaces.com/Purposeful+Gaming

4. Fitz-Walter, Z. (2013, January 24). A brief history of gamification [Web log comment]. Retrieved from http://zefcan.com/2013/01/a-brief-history-of-gamification/

5. N. Pelling, personal communication, September 8, 2015.

6. Gamification. (n.d.). In Merriam Webster, retrieved September 17, 2015, from http://www.merriam-webster.com/dictionary/gamification

7. Gamification. (n.d.) In Oxford Dictionaries, retrieved September 17, 2015, from http://www.oxforddictionaries.com/us/definition/american_english/gamification

8. Bogost, I. (2011, May 3). Persuasive games: Exploitationware. Retrieved from http://www.gamasutra.com/view/feature/134735/persuasive_games_exploitationware.php?page=2

9. Robertson, M. (2010, October 6). Can’t play, won’t play. Retrieved from http://www.hideandseek.net/2010/10/06/cant-play-wont-play//

10. McGonigal, J. (2011). We don't need no stinkin' badges: How to re-invent reality without gamification [Video]. Retrieved from GDC Vault: http://www.gdcvault.com/play/1014576/We-Don-t-Need-No

11. del Castillo, M. (2013, April 30). Badgeville founder takes ‘gamification’ off the vocabulary list. Retrieved from http://upstart.bizjournals.com/news/technology/2013/04/30/kris-duggan-of-badgeville-on-gamifying.html

12. Biodiversity Heritage Library. (2015, June 9). Smorball and Beanstalk are live! [Web log comment]. Retrieved from http://blog.biodiversitylibrary.org/2015/06/smorball-and-beanstalk-are-live.html

13. Biodiversity Heritage Library http://www.biodiversitylibrary.org/

14. IMPACT (2012). Facts and figures. Retrieved from http://www.impact-project.eu/about-the-project/facts-and-figures/

15. Smithsonian Digital Volunteers: Transcription Center https://transcription.si.edu/

16. Journals of William Brewster on BHL http://www.biodiversitylibrary.org/bibliography/77525#/summary

17. BHL Seed & Nursery Catalog Collection http://www.biodiversitylibrary.org/collection/seedcatalogs

18. FromThePage http://beta.fromthepage.com/

19. DigiVol http://volunteer.ala.org.au/

20. Tiltfactor http://www.tiltfactor.org/

21. Smorball http://smorballgame.org/

22. Beanstalk http://beanstalkgame.org/

23. Page, R. (2015, July 23). Purposeful games and the Biodiversity Heritage Library [Web log comment]. Retrieved from http://iphylo.blogspot.co.uk/2015/07/purposeful-games-and-biodiversity.html

24. Shorthouse, D. [dpsSpiders]. (2015, July 23). @rdmpage @biodivlibrary Ever-growing beanstalk and cutesy sounds would be more effective against a canvas of pages where words originate [Tweet]. Retrieved from https://twitter.com/dpsSpiders/status/624208895360282624